Leaderboard

Popular Content

Showing content with the highest reputation on 03/09/13 in all areas

-

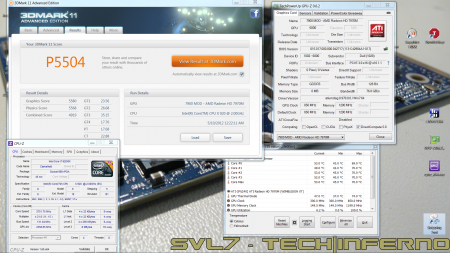

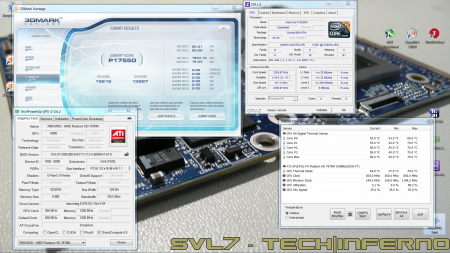

Got my Dell 7970m (ES) today... and so far it rocks! Native fan control, runs cooler than the 6900m series, and it simply kicks ass. It's pretty much plug-and-play, no issues so far, though I will need to continue the testing (and benching ). Only did a Vantage and 3dM11 run so far, with GPU and CPU at stock, Tess on, check it out, hehe: Confirmed for the Dell 7970m (part nr. 747M2) in the M15x: Fan control is working properly Sound per DisplayPort / HDMI is working GPU clocks and performs as expected Possible issues: The card runs slightly warmer than the officially supported cards, due to it's higher power draw. This also leads to less overclock headroom. The card works fine even if you have an i7 920XM in your system, but if you overclock the CPU too hard, the GPU voltage drops, resulting in a drastic performance drop. (This is actually not directly caused by a power limit due to the PSU... the CPU can draw as much power as it can get, but it seems that when the CPU draws more power than usual, the GPU voltage supply somehow doesn't get provided anymore with enough power, even though there's headroom power-wise, it's probably caused by the circuit design of the mobo). Things to do before changing the GPU: Download working drivers. At the moment you can either get the drivers of the M17x R4 from Dell, or the modified, but actually more recent (12.5) from "benchmark3d". Get some thermal paste, e.g. Arctic MX-4, or Prolimatech PK-1, or whatever you prefer, you'll also need something to clean the GPU die and heatsink, isopropyl alcohol or similar will do. I highly recommend using an ESD mat and wristband whenever working Get familiar with the upgrading procedure, respectively disassembling the system. Refer to the M15x service manual if you need help, or to this pictured guide which explains how to replace the GPU in the M15x. The upgrading procedure: Uninstall your GPU drivers. Make a power drain (Turn off your M15x, remove the power cord and the battery. Then press and hold down the power button (Alienhead) for about 10-20 sec. Remove your GPU - Here's a little guide with pics in case you need some help. Clean your heatsink, if necessary replace the thermal pads with new ones. Make sure the 7970m has the correct backplate on it. Insert your GPU, make sure that it sits properly. Apply the thermal paste, and attach the heatsink. More to come.1 point

-

NOTE: The US$180 BPlus TH05 (inc Thunderbolt cable) native Thunderbolt adapter used in this implementation was recalled in Jan 2013 due to (presumably) threats by Intel/Apple per TH05 recall notice. As a result refer to either of these solution that can be implemented today:http://forum.techinferno.com/diy-e-g...html#post63754 or 2013 11" Macbook Air + Win7 + Sonnet Echo ExpressCard + PE4L + Internal LCD [US$250].(3-4-2013) EXCLUSIVE!! A i5-3210M 2.5Ghz 13" Macbook Pro (2012) + GTX660Ti and HD7870 was performance tested on most bandwidth levels available to 2011-2013 Thunderbolt systems: x2 2.0 , x1.2Opt and x1 2.0. Only one that wasn't tested is a native 10Gbps (electrical=x4 2.0, TB-limited to 10Gbps downstream) which gives ~12.5% more bandwidth than x2 2.0. This writeup is released early so the benchmark results can be studied and conclusions drawn about expresscard vs Thunderbolt, AMD vs NVidia and Optimus vs Virtu. Users contemplating a Thunderbolt eGPU implementation may want to hold off till 2014 when 20Gbps Thunderbolt will be released. Expect eGPUs to really take off then. NVidia continues to be the better brand for best eGPU performance and driver features.Implementation: i5-3210M 2.5 13" Macbook Pro + NVidia GTX660Ti + HD7870 (TH05 @ x2 2.0) US$465 eGPU kit: PSU+ GTX660Ti+TH05(EOL) 13" MBP: [email protected] cabling using TH05 13" MBP: [email protected] DirtII on external LCD 13" MBP: [email protected] Heaven on external LCD [email protected]: mask lane2 for faster DX9 Notebook AU$1200 13" Macbook Pro i5-3210M 2.5 HD4000 8GB DDR3 500GB 10Gbps Thunderbolt port (Cactus Ridge DSL3510L) Windows 7/64 Pro + NVidia 310.90 + AMD 13.10 + US$35 LucidLogix Virtu MVP 2.0 DIY eGPU parts US$180 BPlus x2 2.0 TH05: EOL per http://forum.techinferno.com/diy-e-g...html#post36363 US$280AR MSI GTX660Ti (2GB, 1020/1502) and US$240 Gigabyte HD7870 (2GB, 1100/1200) US$5 salvaged 12V/18A ATX PSU Benchmarks GTX660Ti vs HD7870, Optimus vs LucidLogix Virtu internal LCD mode @x1 2.0 and x2 2.0 (highest GTX660Ti external LCD) 3dmark: 06/vant.gpu/11.gpu=18569/24363/7810, RE5.dx9=156.1, dmcv4.dx10_s4=204.1] LCD config RAM GB GPU$ linkspd DX9 DX10 DX11 Ports & Speed 3dmk6$ RE5 var|fixed FFXIV Mafia2 3dmk vant.g dmcv4 scene4 3dmk11 720p Unigine Heaven Dirt2 1080p 720p 1080p 768p 1080p 768p internal 8.0 HD4000 6200 41.4 | 30.4 - 1326 - 21.3 - 37.8 570 298 - 16.0/21.9 - external 8.0 GTX660Ti x2.2 15813 156.1 | 85.8 4180 4215 59.6 59.7 24363 204.1 7810 2126 60.6/93.5 72.4/90.6 QM77 CUDA-Z HD7870 x2.2 18558 149.6 | 83.2 4114 4220 55.0 58.7 21808 167.1 6863 1763 43.6/62.0 51.9/76.2 QM77 PCIe-S GTX660Ti x1.2Opt 18569 155.5 | 85.4 4253 4188 49.8 59.7 22629 171.9 7500 1938 53.4/76.6 58.3/85.2 QM77 CUDA-Z HD7870 x1 2.0 18417 142.7 | 76.9 4113 4172 54.6 57.4 21508 168.0 6722 1689 40.3/57.8 48.9/69.5 QM77 PCIe-S internal Optimus 8.0 GTX660Ti x2.2 14300 118.3 | 78.4 - 3874 - 60.2 - 158.1 7432 1871 - 61.6/86.2 QM77 CUDA-Z GTX660Ti x1.2Opt 16801 99.5 | 75.4 - 3774 - 60.1 - 93.6 6779 1437 - 50.3/67.9 QM77 CUDA-Z internal LucidLogix Virtu^$35 8.0 GTX660Ti x2.2 12376 102.7 | 77.5 - 3853 - 59.5 - 96.4 6502 1420 - 56.3/68.6 QM77 CUDA-Z HD7870 x2.2 13394 61.4 | 48.1 - 3414 - 52.8 - 98.9 5274 1329 - 35.1/47.3 QM77 PCIe-S GTX660Ti x1.2Opt 14238 69.0 | 58.3 - 3517 - 52.4 - 61.1 5563 1056 - 33.9/45.6 QM77 CUDA-Z HD7870 x1 2.0 11615 48.0 | 38.7 - 2779 - 43.4 - 65.0 4726 1026 - 34.8/42.2 QM77 PCIe-S internal LucidLogix Virtu^$56 8.0 GTX660Ti x2.2 16798 144.9 | 83.8 - 3914 - 88.4 - 201.9 8035 2000 - 58.0/75.6 QM77 CUDA-Z HD7870 x2.2 14735 95.4 | 52.4 - 3406 - 53.2 - 184.0 7768 1901 - 34.8/61.3 QM77 PCIe-S GTX660Ti x1.2Opt 18025 117.9 | 62.9 - 3565 - 53.2 - 78.5 6929 1527 - 36.3/45.7 QM77 CUDA-Z HD7870 x1 2.0 11704 48.2 | 39.6 - 2895 - 43.7 - 108.5 6931 1505 - 36.2/46.0 QM77 PCIe-S Virtu^$35 = 30-day trial Virtu with Hyperperformance and Hypersync disabled. Equivalent to US$35 product. Best one to compare against internal Optimus results. Virtu^$56 = 30-day trial Virtu with Hyperperformance and Hypersync enabled. Equivalent to US$56 product . Increases chance of double-buffer artifacting. $ = 1280x1024 for external LCD and 1280x768/1280x800 for internal LCD ! = two back-to-back runs using result from the faster second run # = min/average, London multi-car track with all HIGH except post-process=MED. cmd used "DiRT2.exe -benchmark example_benchmark.xml" Brief about Thunderbolt technology, upcoming Haswell and 20Gbps ThunderboltWorth waiting for 20Gbps Thunderbolt coming in 2014. We'll see many native Thunderbolt Haswell notebooks being released in June 2013. Maybe Intel will surprise us with pci-e 3.0 Thunderbolt (16Gbps)? Spoiler 2011-2013 Thunderbolt features 2x10Gbps channels of which one is exclusively reserved for pci-e traffic used by eGPUs and the other for Displayport. Thunderbolt eGPU enclosures such as OWC Helios and Sonnet Echo Express advertise x4 2.0 electrical connectivity for their Thunderbolt enclosures. They will see the eGPU register itself at x4 2.0 but only transmit traffic down the Thunderbolt link at 10Gbps when measured using CUDA-Z (NVidia) or PCIeSpeedTest (AMD). In practical terms, that works to be x2 2.0 + 12.5%. The same 10Gbps link restriction applies to Sony Z2/SVZ's PMD utilizing a Lightpeak controller. Apple has seen the greatest implementation of Thunderbolt as all 2011 or later Macbooks have TB ports. PC notebook vendors have been slow to follow. This is likely to change later in 2013 when Intel's next generation Haswell architecture is released with native Thunderbolt support. Expect to see many ultrabooks and notebooks featuring mini-DP/Thunderbolt ports. However, those will still likely only have 10Gbps Thunderbolt channels. Users considering a Thunderbolt eGPU might want to hold out till 2014. That's when when 20Gbps Thunderbolt is released capable of x4 2.0 link connectivity will be released. All components will need to be upgraded to handle this higher link speed - the notebook, the eGPU enclosure/adapter and (likely) a new TB cable. Performance Analysis: AMD vs NVidia, Virtu vs Optimus, x1 2.0 vs x2 2.0NVidia smokes AMD for internal LCD performance and mostly beats AMD for external LCD performance. NVidia delivers more FPS-per-buck. In the test set, the extra bandwidth Thunderbolt offers over expresscard is mostly felt when using the Optimus/Virtu internal LCD mode. Spoiler A GTX660Ti is 15% more powerful than a HD7870 on a x16 2.0 bus per techpowerup. So why compare a GTX660Ti to a HD7870? The reason is the 13" MBP has an active iGPU so can get benefit from internal LCD mode via either NVidia Optimus or LucidLogix Virtu. The latter is an additional US$35 item that pushes the total prices of the HD7870 package to the same level as a GTX660Ti. Internal LCD mode giving the eGPU implementation portability cred. In the analysis below I'm not including Virtu^$56 results where Hypersync and Hyperperformance are enabled. There Virtu acts as a middleman between the iGPU and eGPU framebuffer, applying smarts to try to eliminate copying across redundant frames to boost FPS performance. Those do not reflect raw FPS with one reviewing claiming cheating which I agree with. I've also excluded 3dmark06 for any comparison other than NVidia Optimus vs Virtu one since (1) x1.2Opt outperforms x2 2.0 there and (2) my version of 3dmark06 doesn't allow setting a 768P resolution for internal LCD mode so int vs ext would be at different resolutions not making it a fair comparison. Now if the 3dmark06 results are very important to you then simply put tape on the NVidia card to run it at x1.2Opt (pci-e compression) and see it accelerate result. The same tweak worth experimenting with other DX9 titles to see if they too benefit. How much faster is the GTX660Ti's over the HD7870? * external LCD: +9.0%, max=33.7% [x2.2: +10.5%, max=33.7%; x1.2: +7.5%, max=24.5%] * internal LCD: +27.3%, max=48.1% [x2.2: +31.4%, max=48.1%; x1.2 +23.1%, max=34.7%] [Optimus vs Lucid^$35] * internal LCD: +17.2%, max=40.2% [x2.2: +19.3%, max=40.2%; x1.2 +15.1%, max=33.6%] [Lucid^$35] Now if the system didn't have an iGPU or the user was primarily interested in external LCD performance then we can see - AMD performs better at raw x1 2.0 and x2 2.0 DX9 levels (not x1.2Opt). Eg: 3dmark06, RE5 gives less peaky results with higher averages. - external LCD performance would see little difference between say a HD7950 vs a GTX660Ti. However, internal LCD performance sees NVidia+Optimus comprehensively outperform AMD+Virtu. NVidia even performs better than AMD if using the Virtu software. What performance benefit does x2 2.0 give over x1 2.0? * external LCD: +4.8%, max=18.1% [GTX660Ti: +6.3% max=18.1%; HD7870=+3.2% max=8.8%] * internal LCD Optimus: +14.6% max=40.8% * internal LCD Virtu^$35 : +21.5% max=36.6% [GTX660Ti=23.6% max=36.6%; HD7870=19.5% max=34.2] Users with IVB/SB expresscard/mPCIe eGPU implementations would likely want to know how much better performance would a Thunderbolt eGPU provide. We see external LCD sees only +4.8% with max 18.1% performance improvement over x1 2.0. This means the sample benchmarks are not taxing the pci-e bus. The extra bandwidth showing it's significance when running in internal LCD mode where both Optimus and Virtu benefit significantly from the increased bandwidth. We see too that going from x1 2.0 to x2 2.0 in external mode has more of an impact on NVidia cards than AMD. Meaning NVidia is more bandwidth constricted at x1 2.0 levels. This would be more visible if we compared raw x1 2.0 (no pci-e compression) against x2 2.0. Unfortunately I have no way to switch off the iGPU to do such a comparative test on the Macbook. However you can refer tohttp://forum.techinferno.com/impleme...html#post37197 to see x1 2.0 versus pci-e compressed x1 2.0 (x1.2Opt) performance. The one exception is NVidia's 3dmark06 performance sees decreased performance going from x1.2Opt to x2 2.0. They must have enabled the pci-e compression in their driver (x1.2Opt) to deal with poor DX9 performance under low x1 2.0 bandwidth conditions. It does a stellar task at bumping up performance. Problem is the pci-e compression doesn't activate on a x2 2.0 link so there NVidia's DX9 performance can lag behind AMD. We see this in 3dmark06 and RE5 (average), though at a milder levels than at raw x1 2.0 levels. DX9 is still important as many console ports are DX9. AMD could be a better choice if DX9 performance is critical for your requirements. What is the performance loss when using Optimus or Virtu^$35 internal LCD mode? * 14.5% for Optimus when using the GTX660Ti [x2.2=10.4%, x1.2Opt=18.6%] * 36.9% for Virtu^$35 when using the HD7870 [x2.2=31.6%, x1.2=42.3%] Optimus' advantage over Virtu^$35 on the GTX660Ti * +16.5% [x2.2=14.0%, x1.2=19.0%] Not surprisingly, NVidia Optimus is the superior rendering technology by a significant margin. NVidia build their video cards so we'd expect would have superior drivers to extract the best performance from them. If LucidLogix wish to be a competitor to Optimus then they would need to provide the same or better performance when Hypersync/Hyperperformance are disabled. That's looking at the true performance of the underlying eGPU to iGPU copying engine. Furthermore, LucidLogix Virtu isn't production ready yet for mobile platform. The installer will only allow installation on a desktop system. Though I was able to get around that. LucidLogix haven't replied to my emails asking for a fix such that eGPU users could become their customers. NVidia is still the king for eGPU use For systems with an iGPU, the enabling technology required for Optimus/Virtu internal LCD mode and x1.2Opt then the overall feature set: internal and external LCD performance sees NVidia comprehensively outperform AMD. Optimus is significantly faster internal LCD rendering technology to LucidLogix. Plus we get some other goodies with NVidia; CUDA accelerated processing and lower pci-e config space requirements so for a handful of machines can avoid error 12 whereas AMD cards cannot [unless use a DSDT override]. Are there any exceptions where AMD may give better performance? Yes, AMD is still relevant where: (1) the candidate system has no iGPU so only external LCD performance is important. AMD can at least go toe-to-toe with NVidia and in some cases like DX9 beat it. REF: http://forum.techinferno.com/impleme...html#post37197 (2) the candidate system is only raw x1 2.0 only, eg: SB/IVB expresscard systems, capability where NVidia's pci-e compression doesn't engage. AMD will outperform NVidia in DX9 apps in that situation. REF: http://forum.techinferno.com/impleme...html#post37197 Saying that, NVidia still has scope to improve performance. I suspect the NVidia DX9 implementation does far more VRAM copies than the AMD one. NVidia must have identified this as a problem area and so have enabled the pci-e compression in their driver (x1.2Opt) on low-bandwidth x1 links doing a stellar task at improving performance. Problem is it doesn't activate on a x2 2.0 link so there NVidia's DX9 performance still lags behind AMD, though at a milder levels. DX9 is still important as many console ports are DX9. The 3dmark06 x1.2Opt vs x2.2 results being good ones to present to NVidia for an explanation as to why their cards give better results running a single lane over running 2 lanes.They might solve the issue by giving us pci-e compression on x2 and x4 links. Why the BPlus TH05 was the best Thunderbolt eGPU device to dateIt provided high performance, no chassis limits, ATX external input power, PERST# user settable delay all at a great price. Spoiler The TH05 product uses a Port Ridge chip, a cut down Thunderbolt controller capable of only connecting at x2 2.0 and no daisy-chaining of Thunderbolt devices. It delivers 89% of the bandwidth provided by competing Sonnet/Magma/OWC products that range in price from $340-$1000. A minor handicap when you consider the following superior design features: - no chassis, so no limits on card width/length. - has floppy molex power input to provide slot power - has a user settable PERST# delay switch. This is very important for Macbook BIOS implementations which NO competing manufacturer is providing. More about that later. The TH05 was the perfect product for Thunderbolt eGPU experimentation. Those that secured them at US$180 (that included the $40 Thunderbolt cable) prior to the recall got a sensational deal. Only thing it needed was a perspex type enclosure like that offered by the EXP GDC producthere to protect the electronics against accidental damage. TH05 recall means this is a bittersweet reviewThe TH05 recall leaves only less convenient Thunderbolt-to-pcie enclosures that cost two times more than the TH05. Whoever drove the recall (Intel/Apple?) didn't do us any favors. Spoiler Unfortunately the TH05 recall means users can't replicate the configuration I presented here for the low price. Competing solutions cost two times more and still need to be hacked for eGPU use (removal of enclosure, patch ATX power). So what is left? You can get either: * a US$91 PE4L 2.1b + US$170 Sonnet Echo Expresscard Pro adapter to give you x1 2.0 performance levels * US$320 Sonnet Echo Express SE or US$320 OWC Helios. Both would need to use a x8/x16 riser to provide a PERST# equivalent which also doubles up by allowing a double-width card to be used outside of the chassis with an ATX power. The T|I user MystPhysx will be starting a thread with more details about how to do this. Other than 2011+ Macbook users, I wouldn't recommend PC notebook users specifically purchase a Thunderbolt-equipped notebook from the meagre selection available + Thunderbolt enclosures at this point in time. The total cost just doesn't justify the performance results. There are plentiful expresscard-equipped business notebooks available that can be mated with a US$91 PE4L-EC060A 2.1b to provide eGPU connectivity. Appendix 1: Software Setup I concur the findings of users Shelltoe and oripash - there is the UEFI and BIOS method of installing Win7/8 on the Macbook which will affect subsequent use/configuration of the eGPU. 1. UEFI MODE [recommended for Win8]The first (UEFI MODE) requires a little more skill to get partitioned correctly initially but the eGPU functionality is plug-and-play thereafter. It's the recommended mode to use if wanting to use Win8. I didn't try loading Win7 in UEFI mode. Spoiler If install Win8 using oripash's guide http://forum.techinferno.com/diy-e-g...html#post33280 and Teknotronix' http://forum.techinferno.com/diy-e-g...html#post31839 then just need to set TH05 SW1=1 (PERST# from PortRidge), SW2=2-3 (x2..x16) . Boot into Win8 where the eGPU will work out of the box. There will be no error 12. It's a plug-and-play configuration. Unlike Teknotronix, I found no need to use a surrogate system to install the UEFI version of Win8. I could boot the MBP, hit ALT key and select either the Win8 Pro MSDN Installation DVD or USB stick copy of it and perform the installation. Only important point being I had to select the "EFI" DVD or USB stick. 2. BIOS MODE [requires Setup 1.x pre-boot software]The second (BIOS MODE) is the default Bootcamp 4.0 method so it's likely users will find themselves in this less desirable mode. It can be confirmed by showing the Partition style as "Master Boot Record" in the disk properties. I successfully loaded Win7 and Win8 and had full eGPU usage using this method. Spoiler [INSERT PIC oF DEVICE MANAGER HDD HERE] A Bootcamped MBP runs a MBR type partition system. It requires a special sequence to get the eGPU detected. I found Win8 would *always* get an error 12 against the eGPU and if don't get the timing right I could end up with either no eGPU on the PCI BUS or if use the TH05 setting as UEFI mode above (SW1=1), the Macbook will power itself off when trying to boot Win8. The 100% successful method to get the eGPU on the PCI BUS in this mode is to set SW1=3 (6.9s), SW2=2-3 (x2..x16) on the TH05, poweron the eGPU+TH05, poweron the Macbook. Hit ALT during boot to get a boot selection. Watch the RED PERST# LED on the TH05. When it's no longer red then the eGPU is on the PCI BUS so can select your required OS. It's also possible to flick switch SW1 to SW1=2 (500ms) to hasten the process of getting PERST# to no longer be RED while at the ALT screen or Setup 1.1x screen if the delay is too long for your system. The delay turns out to be more like 30s than 6.9s. The most convenient fix for the error 12 that will be seen in Windows 7/8 is: Install Setup 1.30 onto a USB stick. Configure it's \config\pci.bat to contain a replica of the same configuration UEFI boot uses for the eGPU, captured and translated below: Spoiler Code: @echo off echo Performing PCI allocation for 2012 MBP (BIOS) matching the UEFI settings . . . :: The X16 root port @echo -s 0:1.0 1c.w=6030 20.l=AE90A090 24.l=CDF1AEA1 > setpci.arg :: Underlying Bridges in order from high to low @echo -s 4:0.0 1c.w=5131 20.l=AB00A090 24.l=C9F1B801 >> setpci.arg @echo -s 5:4.0 1c.w=4131 20.l=A700A200 24.l=C5F1B801 >> setpci.arg @echo -s 8:0.0 04.w=7 1c.w=3131 20.l=A300A200 24.l=C1F1B801 28.l=0 30.w=0 3c.b=10 >> setpci.arg @echo -s 9:0.0 04.w=7 1c.w=3131 20.l=A300A200 24.l=C1F1B801 28.l=0 30.w=0 3c.b=10 >> setpci.arg :: The NVidia GTX660 @echo -s 0a:0.0 04.w=400 0C.b=20 24.w=3F81 10.l=A2000000 14.l=B8000000 1C.l=C0000000 3C.b=10 50.b=1 88.w=140 >> setpci.arg setpci @setpci.arg set pci_written=yes Configure the \config\startup.bat to do the pci-e fixups and then chainload to Win8. Spoiler Code: :: Speed up end-to-end runtime of startup.bat using caching call speedup lbacache :: wait for eGPU to be on the PCI BUS call vidwait 60 if NOT '%eGPU%'=='' goto found call wait_e 5 "eGPU not found!!" goto OS :found :: initialize NVidia eGPU call vidinit -d %eGPU% :: Perform the pci-e fixups call pci :OS :: Chainload to the MBR call chainload mbr Confirm this fixes error 12 against the eGPU as it did for me. Once confirmed to work, streamline this into the more convenient and faster booting disk image install of Setup 1.1x. It's more convenient as you'll no longer need to hit ALT to boot the USB stick. Instead, you'll have a DIY eGPU Setup 1.x Win8 bootitem. Proceed to copy your \config\pci.bat and \config\startup.bat from your USB stick to the Setup 1.1x disk image V:\config directory as mounted within Win8. The reason can't just use the disk image install for everything is because a Macbook doesn't do the disk mapping correctly so can't be used within the Setup 1.1x pre-boot environment to configure the system. Instead, the USB stick is used for initial configuration and when done, the pertinent configuration files copied across to the disk image for read-only access. Which one? Windows 7 or Windows 8?Win8 has some nice features: faster boot and animated icons when copying but loses out on Aero window eye candy like translucent windows and of course the much missed Start Button. I had fun using Win8 just to see what's new and different.After a week of playing with it I decided it wasn't for me. Why? Win7 looks better and is a production ready OS. Win8 still had bugs ad incompatibilities that disrupt use. Worst for eGPU purposes was Futuremark benchmarks gave lower results. Meaning any benchmarking I did wasn't directly comparable to Win7 results. That was enough to sway me back to Win7 and I haven't looked back. References used DIY eGPU experiences [version 2.0] http://forum.techinferno.com/diy-e-g...html#post41056 http://forum.techinferno.com/impleme...html#post37197 USB kdb+mouse, why Macbooks give poor Windows battery life1 point

-

Benchmark scores updated Oh yes, they do every game runs smoothly at 6040x1200 hahaha, yes the price is insane Good work with improving/expanding the desktop part of T|I forums The fans are good or better to say the combination of vapor chamber and radial fan is a good choice The disadvantage of radial fans is well known...noise increases significantly with increasing RPM1 point

-

Just ordered this off Sony's site for myself using a Sony CC ($100 credit towards purchase): Model Number: US-SVS151290X-LBOM VAIO S Series 15 Custom Laptop 1 $827.69 Customization Details Kaspersky® Internet Security (30-day trial) 4GB (4GB fixed onboard + 1 open slot) DDR3-1333Mhz CD/DVD player / burner Internal lithium polymer battery (4400mAh) No engraving Black 500GB (7200rpm) hard drive 3rd gen Intel® Core™ i5-3210M processor (2.50GHz / 3.10GHz with Turbo Boost) 15.5" LED backlit Full HD IPS display (1920 x 1080) NVIDIA® GeForce® GT 640M LE (1GB) hybrid graphics with Intel® Wireless Display technology Windows 8 64-bit Microsoft® Office 2010 trial version No Fresh Start Imagination Studio™ VAIO® Edition software So the total cost was $888 after tax but with the $100 credit I'm gonna get, it's $788 total. Pretty sweet deal.1 point

-

Isn't it a 680M's successor? I see a turbine type fans. Smaller than fans on other GPUs... interesting how good they are? Congrats.1 point

-

To do list: 1. Keep looking at picture of stacked titans. 2. Summon hatred. 3. Nurture burning coal of jealousy. 4. Fan the flames of revolution. 5. Lead horde to Switzerland. 6. Claim Titans in bloody coup. 7. Now THAT is a desktop. Can't wait to see more benches, builds and ideas, hope they treat you well.1 point

-

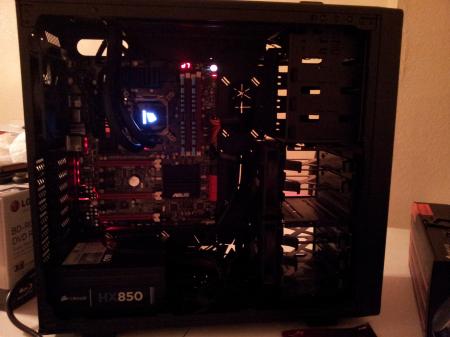

@ConkersGrillforce1337 DAMN YOU CONKERS!!!!!!!!!!!!!!!!!!! That's a sweet setup, I like the dual PSUs, I may opt for a bigger case in the future and do the same if I ever go extreme (custom WC + SLI). Always good to have a dedicated supply for the graphics, I used to do the same back in the day. Man I'd love to have a Titan but the price for one costs half as much as my system lol! I think those two titans you bought cost almost as much as my entire rig + display hahaha! I'm going to be creating some more desktop subforums to expand the scope of T|I's audience some more since you, me, @svl7, @Jimbo all have desktops now.1 point

-

1 point