-

Posts

3684 -

Joined

-

Last visited

-

Days Won

121

Content Type

Profiles

Forums

Downloads

Everything posted by Brian

-

If we (the community) put histrionics aside and look at the big picture, it may not necessarily be the end of high end notebook gaming like we think. I think it's evolving away from MXM and more towards what AW has done with external high speed e-GPU solutions for gamers that want bleeding edge speed. So you get a slim laptop with perhaps a soldered on 980M class card that doesn't OC but you can attach a desktop GM200/R9 390x to it and OC it to your hearts desire (well PSU permitting). This way you have a mobile gaming notebook and the power of a desktop on tap as well. I kinda like this and figured it would happen but I also wish CPUs weren't going the soldered route either but what can ya do, it's not a perfect world. Take a step back and look at where gaming notebooks were headed, it was a bit obscene until Maxwell arrived. We had Clevo releasing dual 240W (or was it 300W?) PSUs just to cope with SLI and AW was pushing the limits of their SLI systems and the PSUs. That's why we had hardcore enthusiasts like @Mr. Fox and @StamatisX building custom dual PSU solutions. But not everyone is as hardcore as they are nor willing to do that kind of work just to be on the bleeding edge and it probably wasn't financially feasible or sensible for companies like AW to keep pursuing a design philosophy that always requires bulk, cost and extra measures to control TDP. The logical solution is the one AW took and I applaud part of it but at the same time I think soldering the CPU was a huge mistake. But nonetheless, after thinking about it, I think e-GPU solutions, if given a chance and a bit more creativity can lead gaming notebooks into a whole new era of awesome performance. OEMs won't have to contend with designing bulky laptops that have to dissipate 100W+ TDP and can instead make sexier and higher quality notebooks that have the e-GPU option available for high performance gaming. Hell, give me a really nice Razer/MBP type notebook w/just a decent soldered GPU (e.g. 970M) with the ability to hook it to a universal e-GPU (not proprietary like AW) connection and I just may purchase it. And personally, I've written off gaming notebooks for nearly 2 years (hence the desktop in my sig) but this could get me to look at them again. I probably wouldn't use the e-GPU part for myself but I wouldn't mind getting my gf into PC gaming and what better way than a sexy new laptop that can also double as a high performance gaming monster via e-GPU that isn't confined by notebook thermals? Win/win.

-

Could very well be part of the reason but the answer I think is probably more complex than just a single point. Most likely a bunch of reasons factored into their decision ranging from OEMs not liking users holding on to systems longer (hence the sudden move away from MXM), bumpgate, cost cutting on the 980M board (though I can't imagine much savings by shaving off a single VRM), the TDP issue @Prema mentioned etc. Plus NVIDIA also knows they have the mobile market cornered so they can kinda do what they want now so why not just force people to pay more for faster refreshes? Helps relieve the R&D strains by allowing them to milk a design a lot longer than it normally would be allowed. Ultimately, whatever their reason may be, it sucks for current Maxwell owners. Best way is to spread the word about this change and tell people to skip Maxwell mobile products and see what AMD's R9 300 offers (and damn do I hope it's something good). But if AMD fails to deliver, well then I recommend this to make it hurt a little less: I just finished upgrading my system but I may put my money where my mouth is in Jan 2016 by going AMD again if R9 is good - I'd really like it if AMD built it on 20 nm.

-

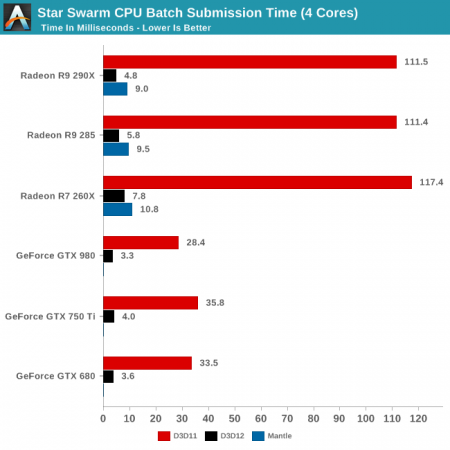

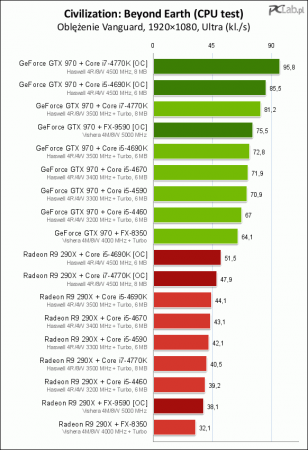

HD 7970M was ok for single cards but was a disaster in Crossfire. A lot of us purchased this card for use in crossfire and did not have working drivers over a month and the blame game shifted between AMD and OEM vendors. See here for reference: A Consumer Nightmare – Alienware M18x-R2 + AMD 7970M Crossfire DP 1.4a did not have the specs for this UNTIL NVIDIA released G-Sync and AMD went running to VESA to implement this as a counter to G-Sync. In fact, Adaptive Sync/FreeSync is still not on the market while G-Sync is a proven technology. It requires an aftermarket board but that's because it pre-dates DP 1.4a and the additonal memory on there holds frames in buffer for when fps drop below 30. See here for why this is a good thing: Mobile G-Sync Confirmed and Tested with Leaked Alpha Driver | PC Perspective The final scalers in desktop monitors should be able to account for that but we still don't know for sure. But what we do know for sure is that the G-Sync module does and it also has undisclosed features that NVIDIA plans to reveal. If you want to give credit where it's due, then NVIDIA deserves a lot of credit for finally solving a very old and frustrating problem of jitter + tearing. Even if AMD says 95C is within spec for their GPU core, that doesn't mean that other components on the board (like VRMs) won't degrade faster from the additional heat. Furthermore, it limits overclocking potential (290/290x can't OC for shit) and it also tosses more heat into your case and/or home. Ever play a game during a hot summer's day in the desert of Arizona? Yeah well you wouldn't want a couple of 95C cards pumping out heat during those summer months. Mantle was a way for AMD to cover up for the poor CPU overhead of their DX 11 drivers. Don't believe me? Take a look at these D3D 11 batch times: or take a look at this: AMD has been notoriously bad with D3D CPU overhead and they still haven't fixed any of it, instead they use Mantle as a band-aid. It was never meant to as an open source solution and that's why they claim it's still in "beta". But as you mentioned, DX 12 has made it irrelevant. Also, NVIDIA are the one's that brought back SLI to gaming, prior to that 3dfx were the one's that had it before folding and having their patents purchased by NVIDIA. However, NVIDIA's implementation is completely different than the one 3dfx used, the only thing they have in common is the name. When SLi first came out, ATi's response was of course to follow the leader with their own AFR implementation of Crossfire. What about poor frametimes in Crossfire? That plagued AMD since it's inception until last year when the fcat controversy finally forced them to address the lingering problem. In fact, I mentioned that Crossfire had problems with stutter in our review: AMD 7970M Xfire vs. nVidia GTX 680M SLI Review This is long before PCPer and other websites ever had an idea that there was a stutter problem with Crossfire. In fact, the only other website to pick up on this was Hard|OCP in their reviews. Unfortunately, this still seems to be the case in games like Battlefield Hardline where AMD doesn't have the benefit of the Mantle crutch: From Hard|OCP: Source: HARDOCP - Conclusion - Battlefield Hardline Video Card Performance Preview So those of us that buy NVIDIA hardware do it because we know their hardware and software is just more refined than what AMD offers. AMD has improved along the way but many times it's a case of too little too late as with Crossfire (their XDMA implementation is great). However, now with NVIDIA's arrogance growing by bounds, AMD has the perfect chance to clench some customers that had traditionally written them off. Now is the time for them to step up with R300 and offer a comprehensive desktop and mobile solution that is on par with NVIDIA. If they can deliver, they will regain a lot of their lost market share.

-

Really, of all the points I made in that post, that's what you took from it?? But sure, those of us that stick with NVIDIA hardware may be part of the problem but that doesn't absolve AMD for not being competitive. IF AMD had been competitive like you assert, they would have done the following: 1. Had mobile parts that had the same perf/watt as NVIDIA - they did not for the last three years! 2. Created niche features like G-Sync first. Yes they followed with FreeSync but that's still not on the market yet and we don't know how well it works. On the other hand G-Sync is here and I use it everyday. 3. Created cool power efficient GPUs: Fact is 290/290x reference versions were a fucking joke. If AMD wanted to really steal the limelight from NVIDIA, they should have put more thought into the reference cooler for the 290/290x upon release so that more of us would have purchased it. When I saw that they tried to market 95C as some sort of normal operating temperature, I wrote them off immediately. Then AIB's not delivering better cooling solutions for more than 1.5 months didn't help matters did it? 4. Cards like the GTX Titan came out more than 6 months before 290/290X were created by AMD to respond.. SIX MONTHS! In the GPU world, that is an entire product cycle so of course some of us bought GTX Titans, we weren't going to wait around for AMD to catch up. So while they may have employees to feed, it's not our responsibility to keep them fed - they should have done a better job on every front. AMD as a company has had some of the worst leadership in the industry, so why reward failure? Take for example them selling their mobile patents to Qualcomm who is now making a killing with the Adreno (anagram of radeon, bet qualcom laughed their asses off at that one). If AMD had foresight, they would have gone into the mobile space and developed adreno on their own and been MUCH larger than NVIDIA could ever dream of being. Secondly, instead of rewarding their hard working engineers, they gave golden parachutes to their joke CEOs, why reward a company like that? Honestly, fuck them. NVIDIA does a lot of crooked things but they also have business acumen that has enabled them to survive a shrinking PC business. They employ clever marketing, invent new proprietary technology to gain an edge (e.g. PhysX, G-Sync, GameWorks) all the while AMD plays catch up. When AMD/ATi merged, they had the upper hand vs NVIDIA but they pissed it away, I don't feel sorry for them. Their last chance of redemption will be this upcoming R300 release, if they screw that up then it may very well be their last. For the first time ever I'm considering going AMD again based on principle but only if they offer something compelling, money doesn't grow on trees for me or any other consumer.

-

Mr. Fox's Portable Transformer Über-Station for Laptops

Brian replied to a topic in General Notebook Discussions

Nice job Brenner, I like it! The AC in the last pics is a nice touch, just screams, "hey I own a laptop and I OC my shit!" lol. -

Survey is on T|I's fb page now too.

-

It's obviously a money grab from NVIDIA but I suspect their OEM partners like Dell also played a big role in this decision. Dell has been actively neutering their products the last decade, the only thing that has stopped them has been the very resourceful community. From programs like ThrottleStop from @unclewebb to bios mods made by @svl7 and @Prema If we didn't have those sorta developments out there, then most mobile enthusiasts would have taken it up the ass long ago. So now with AMD being a near complete failure in the desktop and mobile world and NVIDIA's AIB share having jumped to about 70-75%, it seems they know that inevitable day has arrived for a GPU monopoly. Jen Hsun must be over the moon because NVIDIA just posted a healthy quarter and as usual AMD is in a very weak position. Financially AMD cannot keep up with NVIDIA any longer and that means they will become less and less competitive with every generation, they are simply spread too thin having to develop a CPU and GPU with razor thin margins. .I guess part of the blame is on the community (guys like me) who only buy NVIDIA but most of the blame is on AMD for not being competitive. This is just the market taking it's natural course. Some have tried arguing that notebooks aren't designed for overclocking and perhaps this is something that was long overdue because it doesn't affect the majority of the market. If this were true, we wouldn't have companies like Lenovo releasing dual GPU machines or Clevo and MSI building thick well ventilated gaming systems - everyone would have taken the Apple approach by now. Unfortunately, I fear enthusiasts like @Mr. Fox worst nightmare is coming true faster than he thought. First Alienware thumbed their noses at their core enthusiast fanbase and now NVIDIA is following suite. I won't be shocked if we start seeing different levels of NVIDIA hardware for mobile after this if AMD does indeed fail to be competitive. I'll re-post what I wrote over on [H]: Hmm you may be on to something here.... 980M Stock 980M OC Edition +$200 nets you 100 mhz 980M OMG Edition +$400 nets you 200 mhz Oh Jen Hsun you sneaky bastard! I don't buy the "bug" b.s. either but hey this is what happens when the only game in town for mobile graphics is NVIDIA. AMD has been asleep at the wheel and I'm afraid they will never catch up again. BUT the silver lining is that you can still get modded mobile vbios from sites like Tech|Inferno to OC these things so all is not lost. GPU vbios flashing should still be viable so you guys will have an avenue should the driver lockout not be easy enough to circumvent. Some are talking about this little experiment from NVIDIA extending to the desktop later on once AMD is done and that could be a possibility. Jen Hsun has aspired to be like Apple/Intel for a long long time, he's made it known in his speeches if you've followed him. If AMD folds, then expect to see locked and unlocked GPUs from NVIDIA (e.g. stock 980 for $550 and 980K for $750). I'm ALMOST tempted to sell my G-sync display, 980s and go with a FreeSync + R300 Xfire, we'll see I just might.

-

Agreed, when I go for a GPU I usually want the best one on the market although the next Titan will be the exception to that rule. That said, whether NVIDIA intentionally did this or really did have a miscommunication w/their technical marketing team still puts them in a negative light to many 970 owners and other potential customers. I do think they should have offered an upgrade path to the 980 for 970 owners where they return the card for a coupon from the retailer they purchased it from and then can use that coupon strictly for a 980 upgrade. That way those who really want all 4 GB ram + ROPs can pay a little extra and do it and those that don't care could stick w/their 970s. As a fan of NVIDIA it did give me slight pause but it won't impact my decision to purchase their products because I still feel they offer the best package overall. AMD to me is a second tier video card manufacturer and is good for budget users but isn't on NVIDIA's level of refinement.

-

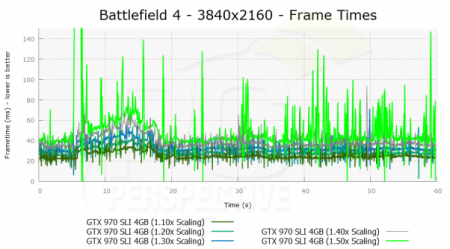

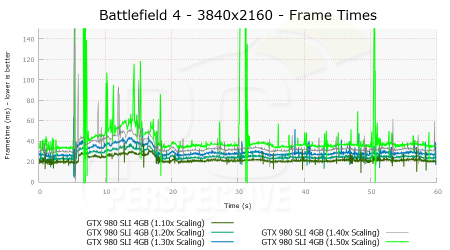

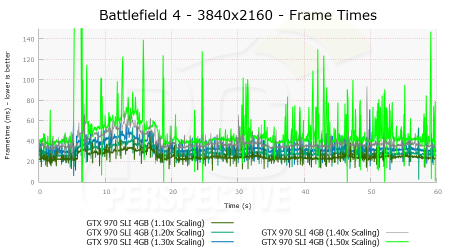

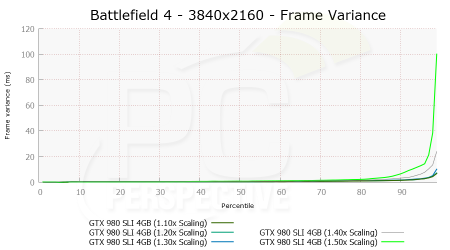

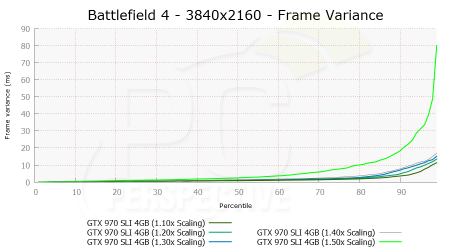

So now that we know the GTX 970 has 3.5 GB full speed memory and the other 0.5 GB that is segmented and 1/7th the speed with less ROPs than originally thought, does that impact your decision to buy the card or keep it? PCPer did some frametime analysis and here's a few of their results: KitGuru has an article where they quote John Peddie research saying only 5% of users have requested refunds so considering all the outrage we saw across the internet and the news of this being plastered on every major computer enthusiast site, it may demonstrate the average user just doesn't care because the performance hasn't changed. While one can set ridiculous settings like 4k DSR + AA to demonstrate the effect of segmented memory, in reality I don't see people ever facing that in real world use, even in SLI. What are your thoughts? Is this a serious issue or one where NVIDIA screwed up but it doesn't change your decisions about your future purchases with them?

-

[MENTION=119]Mr. Fox[/MENTION] like the computer man, shame you didn't get it from Mythlogic instead. Not a fan of Eurocom.

-

If you've got a budget PC and I'm guessing you do based on the locked 30 fps, just get one of those imported korean 27" IPS panels. They have glossy panels, OC to 100hz+ and have a low price.

-

Well I ended up getting an RMA refund for the X99-A from NewEgg. Had second thoughts this morning and decided to go for the X99 Pro because it has that extra VRM cooling pipe + comes w/the M.2 board but I still think that white shroud is very fugly and will see if I can remove it. Lucky for me NewEgg allowed me to return it unopened for a full refund - I hear they can be real asses about it. Ended up ordering the Pro from Amazon even though I had to pay $25 in taxes cuz well..Amazon is fucking awesome. @LAW I got a Samsung 840 EVO 500 GB. Probably will move up to a 1 TB 850 Pro eventually.

-

OP is no longer responding so I'm going to assume he found something. Closing thread.

-

Well the shroud is fugly Don't need wireless AC since I have a physical line running to my router (no wireless latency). Good to know about the H100i, guess my google skillz are weak. Fry's doesn't have the cas 15

-

Hey dudes, So I'm thinking of building a new X99 system today (going to head to Fry's to grab the parts) but before I do, I wanted to run the build by everyone here and get some feedback. I'm looking at Corsair ram, specifically: CORSAIR Vengeance LPX 16GB (4 x 4GB) 288-Pin DDR4 SDRAM DDR4 2666 (PC4-21300) Desktop Memory Model CMK16GX4M4A2666C15 - Newegg.comand noticed there's one that costs $20 more but is Cas 16 (CORSAIR Vengeance LPX 16GB (4 x 4GB) 288-Pin DDR4 SDRAM DDR4 2666 (PC4-21300) Desktop Memory Model CMK16GX4M4A2666C16 - Newegg.com). Is there any reason why the slower timings would cost $20 more? Shouldn't it be the other way around? Unfortunately Fry's doesn't carry the Cas 15 so I'd have to get the Cas 16 stuff or shop at NewEgg (which I don't want to do since I hate their customer service). Here's what I have in mind for my build: Motherboard: Asus X99-A (I was considering MSI X99S Krait but the PCI-E layout sucks for SLI). If anyone can recommend something better I'm open to it. Ram: See above. GPU: Already have 2 x 980 SC SLI PSU: Already have AX1200i Case: Got Corsair C70 but not happy with it. Really wish I could get my hands on the 800D but nobody is selling it anymore. CPU: Intel 5820K - don't see any pt in 5930k or 5960x since I don't need the extra lanes. The extra 2 cores would be nice but not for $1000 a pop. Cooler: H100i (it's the older one, will it still work on this board?) Currently this is what I have: Mobo: Asus Maximius V Formula CPU: [email protected] GHz GPU: see above Ram: Vengeance 2133 MHz XMP Case: See above Thanks. EDIT: BUILD IS COMPLETE! Pretty happy with the results, here's the end specs: Motherboard: ASUS Rampage V Extreme CPU: Intel i7 5820 K CPU Cooler: Corsair H100i with MX4 Ram: 16 GB (4 x 4) Corsair Vengeance LPX 2666 MHz GPU: 2 x EVGA GeForce GTX 980 SC Case: NZXT. H440 Black & Red PSU: Corsair AX 1200i SSD: Samsung 840 500 GB + 1 TB backup drive Fans: 3 x Corsair SP 120 for the front, 2 x AF 120 on the H100i and the regular NZXT 120mm in the back. Lighting: RGB LED strips + controller from Fry's electronics Total build time: About 2 days.

-

This game looks pretty sweet, its in closed beta right now and has some next gen looking graphics. Take a look at these videos: Anyway, if you want to know which video card to have if you want to play this game at smooth framerates, a website called Gamers Nexus did some benchmarks: Read more at Evolve Graphics Benchmark So who's going to be picking this up? Seems to be something I'll grab along with Dying Light and GTA V. Doubt it'll have a lot of replay value to it but should be fun for shits and giggles when I'm bored. Anyway, look at those graphs? NVIDIA seems to be dominating as usual.

-

Picked up a couple EVGA GTX 980 SC's with ACX 2.0 coolers and compared to the Titans that they are replacing, the temperatures are WAY better (Maxwell ftw) as is the operating frequency. It's pretty awesome how far NVIDIA has taken 28nm and they aren't done yet as the big Maxwell (GM200) will probably arrive in 2 months in the form of Titan 2 and then probably GTX 1080i in June or so (I'll probably grab that). Anyhow, I added a side fan to the computer door to help exhaust heat since these are open air coolers and therefore dump heat into the case. Temps and OC are fantastic as I mentioned, with my OC so far hitting 1520 MHz on stock voltage and max temps being 75C and 63C respectively. These cards idle at 40C and 34C each so I'm really happy about that. I'll probably do a run of 3DMark in a few minutes just to see how they do but I'm not a hardcore benchmarker, I prefer to test the results of my overclock in games I actually play and then crank their settings up and see how much an OC benefits me. Here's my setup: Benchmarks: 3DMark GTX Titan SC SLI vs 980 SC SLI (980s are on STOCK voltage):Result Stock vbios. +130 core (1571 MHz core/1853 MHz memory), +12mv voltage, 120% Temps never got above 69C on GPU 1, second card barley hit 60C. My 3DMark run: 19155

-

Since our last thread got deleted, I'll start anew: CURRENT OWNERS: Owner Where Purchased Cost Production Date 5150 Joker (Brian) Amazon.com $732 + tax October 2014 [FONT=Segoe UI][FONT=Roboto][FONT=inherit][FONT=inherit][FONT=inherit][URL="http://www.asus.com/us/Monitors/ROG_SWIFT_PG278Q/#game-detail"][FONT=inherit][/FONT] [FONT=Cuprum][FONT=inherit]Great Graphics for that In-Game Advantage[/FONT][/FONT] [/URL][/FONT] [FONT=inherit][URL="http://www.asus.com/us/Monitors/ROG_SWIFT_PG278Q/#smooth-visual"][FONT=inherit][/FONT] [FONT=Cuprum][FONT=inherit]Enjoy a Smooth, Stutter-free Gaming Experience[/FONT][/FONT] [/URL][/FONT] [FONT=inherit][URL="http://www.asus.com/us/Monitors/ROG_SWIFT_PG278Q/#gamer-features"][FONT=inherit][/FONT] [FONT=Cuprum][FONT=inherit]Gamer-centric Features[/FONT][/FONT] [/URL][/FONT] [FONT=inherit][URL="http://www.asus.com/us/Monitors/ROG_SWIFT_PG278Q/#game-accuracy"][FONT=inherit][/FONT] [FONT=Cuprum][FONT=inherit]Improved In-Game Accuracy[/FONT][/FONT] [/URL][/FONT] [FONT=inherit][URL="http://www.asus.com/us/Monitors/ROG_SWIFT_PG278Q/#advanced-connectivity"][FONT=inherit][/FONT] [FONT=Cuprum][FONT=inherit]Advanced Connectivity with Smart Cable Management[/FONT][/FONT] [/URL][/FONT] [FONT=inherit][URL="http://www.asus.com/us/Monitors/ROG_SWIFT_PG278Q/#extra-comfort"][FONT=inherit][/FONT] [FONT=Cuprum][FONT=inherit]Gamer Ergonomics – Say Hello to Extraordinary Comfort[/FONT][/FONT][/URL][/FONT][/FONT][FONT=inherit]/websites/global/products/t0Xm6iD2xvngT8tR/img/main/view360-bg.jpg) 50% 50% no-repeat;">[/FONT][FONT=inherit][FONT=Cuprum]360° Product View[/FONT] [FONT=inherit][FONT=inherit] [/FONT] [/FONT] [/FONT] [url]http://www.asus.com/websites/global/products/t0Xm6iD2xvngT8tR/img/main/intro-bg.jpg[/url]) 50% 0px no-repeat;">[FONT=inherit][FONT=inherit] [FONT=Cuprum]The World's First WQHD G-SYNC™ Gaming Monitor[/FONT] [FONT=Cuprum]Forged for Gaming Perfection[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=inherit]The ROG SWIFT PG278Q represents the pinnacle of gaming displays, seamlessly combining the latest technologies and design touches that gamers demand.[/FONT] [FONT=inherit]This 27-inch WQHD 2560 × 1440 gaming monitor packs in nearly every feature a gamer could ask for. An incredible 144Hz refresh rate and a rapid response time of 1ms eliminate lag and motion blur, while an exclusive Turbo key allows you to select refresh rates of 60Hz, 120Hz or 144Hz with just one press. The ROG SWIFT contains integrated NVIDIA[FONT=inherit]®[/FONT] G-SYNC™ technology to synchronize the display's refresh rate to the GPU. This technology eliminates screen tearing, while minimizing display stutter and input lag to give you the smoothest, fastest and most breathtaking gaming visuals imaginable. Connectivity is simple, yet robust, with a single DisplayPort 1.2 port to enable NVIDIA[FONT=inherit]®[/FONT]G-SYNC™ and dual USB 3.0 ports for convenience.[/FONT] [/FONT] [FONT=inherit][FONT=inherit]ASUS-exclusive GamePlus Technology enhances your gaming experience further by providing you with crosshair overlays for FPS games and multiple in-game timers for RTS/MOBA games.[/FONT] [FONT=inherit]The ROG Swift has a super-narrow 6mm bezel that begs this monitor to be used in multi-display gaming set-ups. With VESA wall mount capability and an ergonomic stand designed with full tilt, swivel, pivot and height adjustments, the ROG SWIFT is ready for gamers of all shapes and sizes to game comfortably for hours on end.[/FONT] [/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=Cuprum]GREAT GRAPHICS FOR THAT IN-GAME ADVANTAGE[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]The 27-inch WQHD Display that Offers Greater Detail and More Onscreen Real Estate[/FONT] [FONT=inherit][/FONT] [FONT=inherit]The ROG SWIFT PG278Q represents the next generation in gaming displays. This WQHD 2560 x 1440 monitor delivers four times the resolution of 720p, with 109 pixels per inch or 109 RGB matrices per inch. This gives you the benefits of greater image detail and up to 77% more onscreen desktop space than standard Full HD (1920 x 1080) displays. These minor details add up to give you a major in-game advantage.[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=inherit]Conventional 27-inch 1920 x 1080 monitor[/FONT] [FONT=inherit]Shows less detail and spacing with less room for work bars on the side. Images are relatively blurrier.[/FONT] [/FONT] [FONT=inherit][FONT=inherit]ROG SWIFT PG278Q 27-inch 2560 x 1440 monitor[/FONT] [FONT=inherit]See more detail and spacing with more room for working bars on the side. Images are much more crisp and detailed.[/FONT] [/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=Cuprum]ENJOY A SMOOTH, STUTTER-FREE GAMING EXPERIENCE[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]Incredible 144Hz Refresh Rate[/FONT] [FONT=inherit][/FONT] [FONT=inherit][FONT=inherit]Unlock the highest frame rates available, decimate lag and say goodbye to motion blur to gain the upper hand in first person shooters, racers, real-time strategy and sports titles. With twice the frame rate of standard LCDs, the ROG SWIFT PG278Q Gaming Monitor encourages you to never blink again.[/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=inherit]Fast Frame Rates[/FONT] [FONT=inherit]The ROG SWIFT PG278Q Gaming Monitor delivers refresh rates of up to 144Hz to deliver the smoothest possible gameplay, even in graphically-intensive fast-paced action sequences.[/FONT] [/FONT] [FONT=inherit][FONT=inherit]Minimal Motion Blur[/FONT] [FONT=inherit]The ROG SWIFT PG278Q Gaming Monitor minimizes motion blur to display more natural movement, making it ideal for first person shooters or real-time strategy games.[/FONT] [/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]Incredible 1ms Rapid Response Time[/FONT] [FONT=inherit]Response time and ultra-smooth gameplay are the hallmarks of a true gaming monitor. With a 1ms rapid response time – the fastest available – the ROG SWIFT PG278Q Gaming Monitor eliminates smearing, tearing and motion blur, allowing you to quickly react to your game.[/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]Gain a Competitive Edge with NVIDIA[FONT=inherit]®[/FONT]G-SYNC™ Technology[/FONT] [FONT=inherit][FONT=inherit][FONT=inherit]NVIDIA[FONT=inherit]®[/FONT]G-SYNC™ display technology delivers the smoothest, fastest and most breathtaking gaming imaginable. G-SYNC™ synchronizes the ROG SWIFT's refresh rate to the GPU in your GeForce GTX-powered PC, eliminating screen tearing and minimizing display stutter and input lag. Scenes appear instantly, while objects look sharper and more vibrant. Gameplay is fluid and responsive too, ensuring a serious competitive edge.[/FONT] [/FONT] [FONT=inherit][FONT=inherit]The ASUS Display R&D team works closely with NVIDIA to ensure that everything runs smoothly on ASUS G-SYNC™ monitors. The ROG SWIFT PG278Q gaming monitor with built-in G-SYNC™ delivers super smooth and stunning visuals.[/FONT] [FONT=inherit]Note: G-SYNC features require an NVIDIA[FONT=inherit]®[/FONT] GeForce GTX650Ti BOOST GPU or higher. For more detailed information, please refer toG-SYNC Technology Overview | GeForce | GeForce[/FONT] [/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=inherit]Without G-SYNC™[/FONT][/CENTER] [/FONT] [FONT=inherit][FONT=inherit]With G-SYNC™[/FONT][/CENTER] [/FONT] [/FONT] [FONT=inherit]Watch Video[/FONT][FONT=inherit][FONT=inherit] [FONT=inherit]NVIDIA[FONT=inherit]®[/FONT]Ultra Low Motion Blur Technology[/FONT] [FONT=inherit] [/FONT][/CENTER] [FONT=inherit][FONT=inherit]Conventional 60Hz monitors show extensive motion blur which detracts from your gaming experience. The ROG SWIFT PG278Q Gaming Monitor with NVIDIA[FONT=inherit]®[/FONT] Ultra Low Motion Blur technology* reduces the effects of motion blur by delivering crisp edges in fast-paced gaming environments for perceivable differences in natural movement.[/FONT] [FONT=inherit]*Users can turn on the Ultra Low Motion Blur function from the OSD setting. This works with titles that do not support the NVIDIA[FONT=inherit]®[/FONT] G-SYNC™ technology. Ultra Low Motion Blur technology works only at 85Hz, 100Hz and 120Hz.[/FONT] [/FONT] [/FONT] [FONT=inherit] [FONT=inherit]NVIDIA[FONT=inherit]®[/FONT] 3D Vision™ Ready[/FONT] [FONT=inherit] [/FONT][/CENTER] [FONT=inherit][FONT=inherit]The ROG SWIFT PG278Q is compatible with the NVIDIA[FONT=inherit]®[/FONT] 3D Vision™ 1 and 2 kits* to support up to three WQHD displays for immersive multi-display 3D gaming experience. ROG SWIFT provides the finest 3D gaming and content viewing experience and thanks to the ever-growing library of over 700 3D Vision™ ready game titles, as well as 3D Blu-ray movies and YouTube 3D Playback support. See everything in a new dimension with the ROG SWIFT.[/FONT] [FONT=inherit]*Requires NVIDIA[FONT=inherit]®[/FONT] 3D Vision™ kit, sold separately. Go to www.nvidia.com andwww.3DVisionLive.com for further details.[/FONT] [/FONT] [/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=Cuprum]GAMER-CENTRIC FEATURES[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]ASUS-exclusive 5-Way OSD Navigation Key[/FONT] [FONT=inherit]The intuitive 5-way navigation key allows you to select OSD settings with just a flick. Switching menu settings have never been so easy.[/FONT] [/FONT] [FONT=inherit][FONT=Cuprum]ASUS-exclusive Refresh Rate Turbo Key[/FONT] [FONT=inherit]The ROG SWIFT features a dedicated hotkey to toggle refresh rate on-the-fly without needing to access the graphic driver control panel. Quickly select from 60, 120, or 144Hz refresh rates and go back to gaming.[/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]Keeping It Cool with a Smart Air Vent Design[/FONT] [FONT=inherit]The ROG SWIFT PG278Q Gaming Monitor is designed for marathon gaming sessions. Its smart air vent design aids heat dissipation to keep things cool for extended periods of time.[/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]Awesome LED Light-in-Motion Effects[/FONT] [FONT=inherit]The ROG SWIFT PG278Q Gaming Monitor comes with a mysterious and intricately-patterned base that can illuminate with an LED Light-in-Motion feature.[/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=Cuprum]IMPROVED IN-GAME ACCURACY[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=Cuprum]ASUS-exclusive GamePlus Technology[/FONT] [/FONT] [FONT=inherit][/FONT] [FONT=inherit][FONT=inherit][/FONT] [FONT=inherit][/FONT] [FONT=inherit]The ROG SWIFT PG278Q Gaming Monitor features the ASUS GamePlus hotkey with crosshair overlay and timer functions. Select from four different crosshairs to suit your gaming environment, and keep track of spawn and build times with the on-screen timer that can be positioned anywhere along the left-hand edge of the display.[/FONT] [/FONT] [/FONT] [FONT=inherit][FONT=Cuprum]ADVANCED CONNECTIVITY WITH SMART CABLE MANAGEMENT[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=inherit]The ROG SWIFT PG278Q Gaming Monitor includes DisplayPort 1.2 for native WQHD output and dual USB 3.0 ports for convenient pass through connectivity. A cable management design feature found on the back of the monitor allows you to organize and hide your cables to keep your gaming area tidy.[/FONT] [/FONT] [*=center]Power [*=center]USB 3.0 Upstream [*=center]DisplayPort 1.2 [*=center]2 x USB 3.0 Downstream [/FONT] [FONT=inherit][FONT=Cuprum]SAY HELLO TO EXTRAORDINARY COMFORT[/FONT] [/FONT] [FONT=inherit][FONT=inherit][FONT=inherit]The ROG SWIFT PG278Q Gaming Monitor is specially designed for long marathon gaming sessions. Its slim profile and super-narrow 6mm bezel makes it perfect for almost seamless multi-display setups. The ROG SWIFT also has an ergonomically-designed stand with tilt, swivel, pivot and height adjustment so you can always find that ideal viewing position. It can also be VESA wall-mounted.[/FONT] [/FONT] [*=center][FONT=inherit][/FONT] [LEFT][FONT=inherit]Swivel (+60° ~ -60°)[/FONT][/LEFT] [*=center][FONT=inherit][/FONT] [LEFT][FONT=inherit]Height adjustment (0~120mm)[/FONT][/LEFT] [*=center][FONT=inherit][/FONT] [LEFT][FONT=inherit]Tilt (+20° ~ -5°)[/FONT][/LEFT] [*=center][FONT=inherit][/FONT] [LEFT][FONT=inherit]Pivot (90° clockwise)[/FONT][/LEFT] [/FONT] [/FONT] [/FONT] [/FONT]

-

So H1Z1 is out and I think they finally managed to iron out their log in issues. If you don't know what H1Z1 is then I'll explain. It's a persistent open world survival game that copies the concept Day Z created and expands on it with Sony's take. It's based on the PlanetSide 2 engine so people are hopeful it runs much smoother than the ARMA engine does (which it should). Anyhow, for those that have it, we should find a common server and meet up. I have a Team Speak server I run that we can all join as well if needed (ts3.kgbgaming.com).

-

Doesn't seem like 1230 ram would kill a DDR chip, not unless you were applying some insane voltage to them. Regardless, sucks but I guess now you can grab a 970/980m.