-

Posts

3684 -

Joined

-

Last visited

-

Days Won

121

Content Type

Profiles

Forums

Downloads

Everything posted by Brian

-

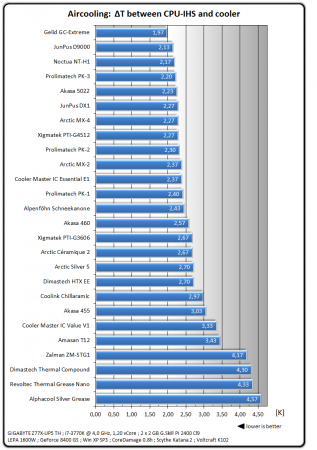

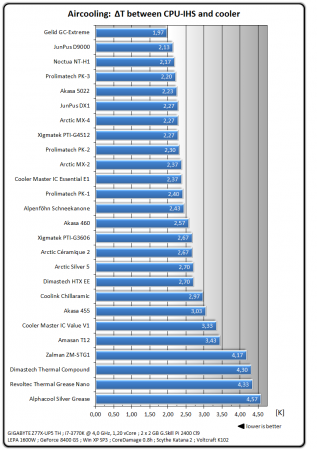

So was talking to another T|I member and he mentioned his preference for GE-Extreme because it's thicker vs other pastes like MX-4 for use in his Clevo system. Now from personal experience, I've never had issues with using a paste like MX-4 in a mobile system like Alienware and can't imagine there's much variation between performance notebook heatsinks. Plus someone did a really thorough test on different pastes over at hwbot forum and presented these results: So as you can see, there's <1C difference between a more expensive paste like GC-Extreme and others like MX-4. My last desktop build with a 3770K processore and 2 GTX Titans all used MX-4 for over 1 year and it didn't dry out and the thermal capacity remained consistent. So based on personal experiences, which do you use and why? And please mention what method you use to apply your paste as that can have a significant impact on results.

-

I've never used MX 4 on a Clevo IHS but it's hard to imagine it'd be too different than an Alienware. What application method do you use? Anyhow, this is going off topic so I think a separate thread to discuss thermal pastes is in order (though we have an older one based on IC testing somewhere). For anyone else interested in the thermal paste discussion: http://forum.techinferno.com/general-notebook-discussions/9145-whats-your-preferred-brand-thermal-paste-your-notebook-why.html#post124381

-

Not sure I follow, what do you mean it "does not have adequate pressure and pumps out in days"? As in dried out? Because I've used it on video cards (both desktop and notebook) + CPUs and kept it on there for 1+ year at a time without it drying out or losing thermal capacity. Anyway, here's a comparison of some pastes: GC Extreme does a bit better but overall a delta T of <1C isn't very meaningful. I'd probably go with whatever is cheaper, safer and easier to apply.

-

Just keep in mind IC Diamond scratches the surface of the die unless you're extremely careful - and even then it probably still will. Just use something like MX-4, it works nearly as well and is super easy to apply and gives great temps.

-

If any T|I players want to get together for a game let me know or just leave your steam name here.

-

@J95 does this quite a bit so he may be able to help you out.

-

If that's the case are you able to file a claim for warranty service? Try to get the motherboard replaced based on the fumes making you sick. If it's out of warranty then try baking it or using a heat gun. Just be careful not to melt the solder. Sent from my iPhone using Tapatalk

-

Because that's the model the guy that started the suit started purchased and he was denied a refund by Newegg and Gigabyte.

- 20 replies

-

- 1

-

-

- class action

- gtx 970

-

(and 1 more)

Tagged with:

-

No it specifically names NVIDIA as a defendant.

- 20 replies

-

- class action

- gtx 970

-

(and 1 more)

Tagged with:

-

Well the inevitable has happened as a result of the GTX 970's 4 GB controversy. Someone has filed a class action lawsuit against NVIDIA and Gigabyte over deceptive marketing practices with respect to the GTX 970 alleging that they (NVIDIA) purposely marketed the card as having 4 GB Ram, 2048 KB L2 and 64 ROP when in fact it has 3.5 GB useable ram with 0.5 "spillover" and 1792 KB L2 + 56 ROPs in reality. It also states over 8100 people have filed a grievance with the FTC: The lawsuit also attaches a bunch of images from NewEgg that purportedly show off advertising of 4 GB GDDR5 and other relevant technical details that they claim were misleading. Surprisingly, it doesn't allege that the card isn't a true 256 bit card in the suit, at least I couldn't find it. Here's the link to the lawsuit: Nvidia lawsuit over GTX 970

- 20 replies

-

- class action

- gtx 970

-

(and 1 more)

Tagged with:

-

Turn off SLI and see if it appears.

-

I'm sure we'll see something very soon from the likes of Futuremark.

-

They probably will at some point since they plan to build a game around that demo/engine. But releasing it right now would probably be premature since older architectures like Fermi and GCN 1.0 don't work currently with DX 12.

-

To see the benefits of DX12 (a low level API) you need games that are built around it. See the star swarm benchmark that Anandtech had benches on: AnandTech | The DirectX 12 Performance Preview: AMD, NVIDIA, & Star Swarm

-

Did you notice a drop in fps after enabling it? That's one way to know it's on.

-

Depends on whether it's a high player server or not. If it has a lot of players, you run into them quite often.

-

AW version is a gimped soldered one with lower clocks and performance. It gets beat by the gtx 780m, rest just smash it.

-

I really can't figure out why NVIDIA does this. I wonder if this is a general limitation they impose because they have to deal with so many different configurations of laptops (e.g. ones with and without optimus) or are there issues w/these features we don't know about with laptops? BTW, not all is green on the desktop side of things, at least if you own SLI + G-Sync display. I don't have access to MFAA or DSR and although NVIDIA has promised to add it for G-Sync/SLI users, I see no signs of it yet. Maybe I should try to find some registry hack as well.

-

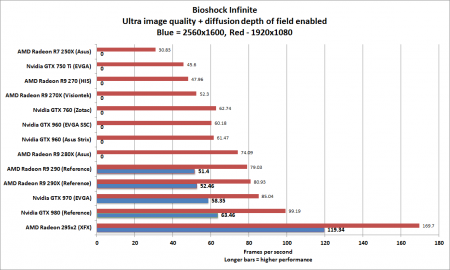

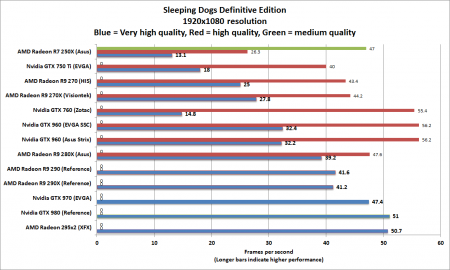

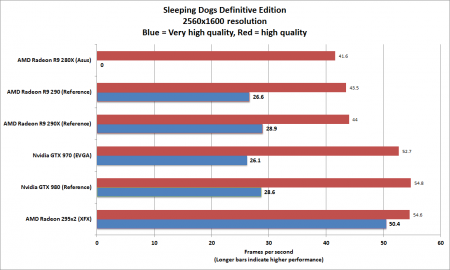

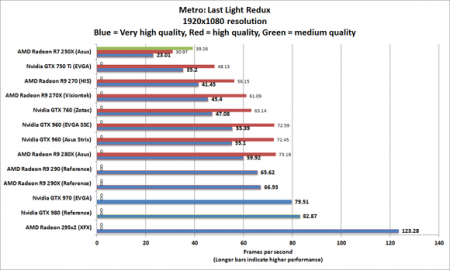

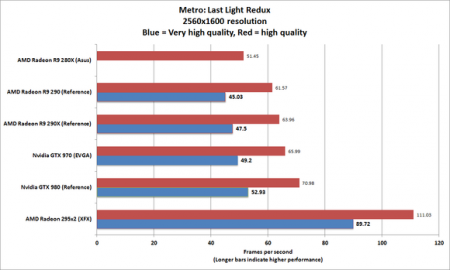

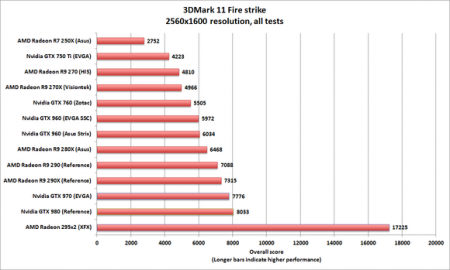

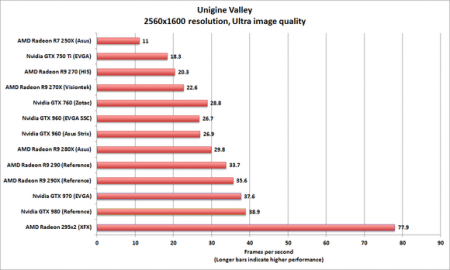

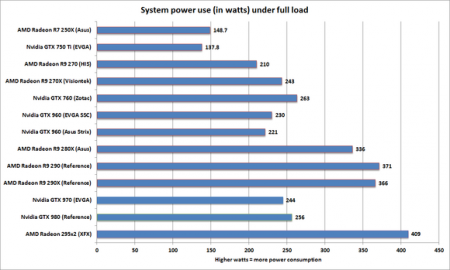

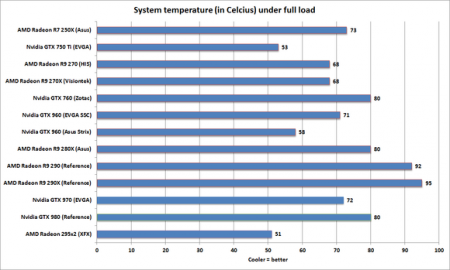

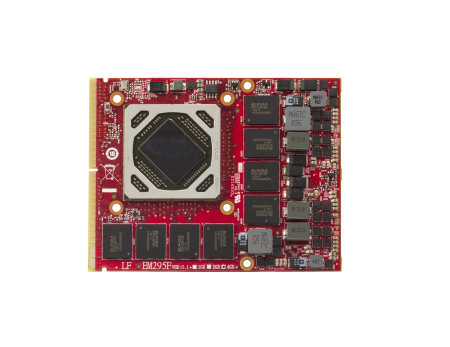

Surprisingly they did a pretty thorough article at almost every price segment comparing AMD vs NVIDIA and I thought their reasoning was pretty fair and objective. They did leave out mention of AMD's inability to get Crossfire profiles out in time and other lingering issues but aside from that, I thought it was well written. Of course it lacks frametimes but that's something most readers of PC World wouldn't understand anyway. Take a look: Graphics card comparison: The best graphics cards for any budget Here's some graphs from their review: Look at AMD's power consumption..yikes! Anyhow, here's their conclusion: [h=2]So which graphics card should you buy?[/h]Many charts and many thousands of words later, we’re finally ready to answer the question: Which graphics card within my budget gives me the best bang for my buck? $100: If you’re looking to spend $100 or less, the AMD Radeon R7 250X is your best choice. It’s no barn-burner, but it will let you play modern games at 1080p on low to medium detail settings. Under $200: The Radeon R9 270X is a solid choice, especially if you can find one on sale around $150. You'll need to dial down some detail and anti-aliasing settings in especially demanding games, however. But it’s worth giving the Nvidia GeForce GTX 750 Ti honorable mention here, because it doesn’t need any supplementary power connections whatsoever. That, plus its humble 300W power supply requirement, means the GTX 750 Ti could add a big graphics punch to a low-end system with integrated graphics for just $120. <figure class="large " style="box-sizing: border-box; margin: 0px 0px 10px; max-width: 100%; overflow: hidden; font-family: 'Helvetica Neue', Arial, sans-serif; font-size: 15px; line-height: 21px; background-color: rgb(255, 255, 255);"><figcaption style="box-sizing: border-box; margin-top: 5px; font-size: 12px; color: rgb(153, 153, 153); text-align: center; clear: both; font-family: facitweb, sans-serif;">VisionTek's Radeon R9 270X. </figcaption></figure>$200: The GTX 960 is clearly the best pick of the cards we’ve tested, delivering very playable frame rates with high or ultra settings at 1080p resolution. Its silence, coolness, and power efficiency are top-notch, too. But note that while we haven’t been able to test its Radeon counterpart directly—the R9 285—other sites report that AMD’s card offer similar performance, albeit in more power-hungry fashion. $250: The Radeon R9 290 can’t be beat here. This high-end card was selling for $400 less than six months ago. Insane! $300 - $500: Nvidia’s GeForce GTX 970 is a beast of a card at $330, despite the recent firestorm over its memory allocation design and incorrect initial specs. The card bests AMD’s flagship R9 290X in our trio of games at both 1920x1080 and 2560x1600 resolution, has plenty of overclocking overhead if you want to push it further, sips power, and runs far cooler than AMD’s graphics cards. <figure class="large " style="box-sizing: border-box; margin: 0px 0px 10px; max-width: 100%; overflow: hidden; font-family: 'Helvetica Neue', Arial, sans-serif; font-size: 15px; line-height: 21px; background-color: rgb(255, 255, 255);"><figcaption style="box-sizing: border-box; margin-top: 5px; font-size: 12px; color: rgb(153, 153, 153); text-align: center; clear: both; font-family: facitweb, sans-serif;">The Nvidia GeForce GTX 970 is great graphics card even with its funky memory allocation design. </figcaption></figure>That said, if you plan to game on multiple monitors or on a 4K monitor, the Radeon R9 290X’s memory configuration makes it better for pushing insane amounts of pixels. And if you’re gaming on a single non-4K monitor, opting for a $300 Radeon R9 290X over a $330-and-up GTX 970 could save you some real dough with minimal performance impact—assuming you can find one of those $300 deals, that is. $500 and up: There’s no question: The $550 GeForce GTX 980 is clearly the most potent single-GPU graphics card on the market today. Its insane power efficiency is just icing on the cake. <figure class="large " style="box-sizing: border-box; margin: 0px 0px 10px; max-width: 100%; overflow: hidden; font-family: 'Helvetica Neue', Arial, sans-serif; font-size: 15px; line-height: 21px; background-color: rgb(255, 255, 255);"><figcaption style="box-sizing: border-box; margin-top: 5px; font-size: 12px; color: rgb(153, 153, 153); text-align: center; clear: both; font-family: facitweb, sans-serif;">AMD's Radeon R9 295X2 is a monster in every sense of the word. </figcaption></figure>The dual-GPU champion: Finally, the $700 Radeon R9 295X2 is just in a league of its own—as it should be, with a pair of graphics processors crammed into a single card. If you can afford the sticker price and the sky-high power usage, this behemoth utterly demolishes any single-GPU graphics card you can buy. And with prices hovering around $695, its now sells for less than half of its original $1550 sticker price. Note, however, that you could buy a pair of Nvidia GTX 970s and run them in SLI for roughly the same price and performance. But you’d lose the Radeon R9 295X2’s single-card form factor, kick-ass integrated water cooling, and—most notably for the high resolutions you’re likely gaming at, if you’re considering a card like this—AMD’s memory configuration, which as I said earlier, is better built for pushing anti-aliasing settings while gaming at ultra-high-resolutions when compared to the GTX 970’s odd memory design.

-

And all that only yields 30 fps stable? Very odd, I'd have expected much more.

-

What settings do you use and which version of Dying Light?

-

30 FPS with an OC'd 780M sounds very low, you should easily be hitting 50 fps+ even at 1080p. You should adjust some of the settings you use.

-

I dunno man, I think the intent is pretty clear that it's about desktops but maybe some would also think it extends to notebooks. But the way they talk about system modifications (watercooling, optimizing case air flow) isn't something people typically associate with notebooks. I really don't see any litigation arising from this at all.