-

Posts

543 -

Joined

-

Last visited

-

Days Won

15

Content Type

Profiles

Forums

Downloads

Posts posted by johnksss

-

-

At the end of the day. Does not matter to me. It was only to say it works. You are more than welcome to go take it up with him though instead of over here with us speculating. Asking the source is clearly a better option right?

-

Well, that's why i was enlightening you guys...:)

-

1

1

-

-

Of course. That's a given. That happens everywhere. People go overboard and just don't want to let things go until they get their two cents in, but they weren't talking about textures and 8k/4k and ms this and that. They were talking you your a lier. You weren't first. Not giving credit to the guy who was first and who showed him how to do it in the first place. The same type of shenanigans that vbios files don't get made or bios files don't go to certain vendors. So that's why he post some comment in the video frames about him talking to venturi and they settled it, but that was not what he was telling the world for the first 1 minute of his video. First this and that. Go tell the world. I was first and blah blah blah.

That's like someone coming and saying the did the first teardown video of a P870DMG. I'm going to be right there calling them a liar point blank! When I know Mr. Fox was first (Well wait, were you first with one? lol)person to even have the machine. And guess what? So will everyone else around here and over at nbr.

And that's what happened on his channel.

You know it's all for one and one for all.

-

2

2

-

-

2 minutes ago, Mr. Fox said:

Hmm. I don't know who Digital Foundry is, or what "FCAT it" means. Nor do I really care at this point. I still thought it looked good enough. I'd be more interested in seeing his benchmark scores than some silly GTA V video with all of the display settings jacked up. I kind of doubt that anything is going to really run worth a damn with such ludicrous display settings.

I thought Thirty IR's post from 4 days ago was pretty awesome. I don't let trolls post comments on my YouTube channel either. I moderate everything all comments and if I don't like what people have to say I delete their comments without approving them and then ban them from my channel.

They weren't trolling. He said he was first. and he wasn't. LOL and they clowned him on it. That's what you don't see im afraid.

I posted a link to the guy that was actually first, but if I find my pictures of mine i'm going to be bucking for first.

-

1

1

-

-

34 minutes ago, octiceps said:

4-way "works", but it's a terrible experience just watching the video, and I'm not even playing the game. FPS and GPU usage drops all over the place, hitching, massive microstutter whenever the camera is turned, frame time spikes into the hundreds of ms range, etc. 99th percentile frame rate during that run was probably under 20 FPS. This is actually perfect evidence of why SLI is for number-chasers, not for a smooth gaming experience. I'd love to see Digital Foundry get their hands on a Titan XP 4-way and FCAT it so they can tear that guy to shreds.

You take it with a grain of salt.

You, just like I don't know if that's the game or the video.

And for a real test he would either need to do what linus did or get online and actually try to kill someone with a sniper rifle. Or have a gun battle. Which we do not see at all.

And he should have done one at 4k so we could have a better understanding of just how well or not well it works. Some of my videos look choppy, but the game was running perfectly fine....So you can't really just base it off of that.

As to tear to shreds, they already did that, but he deleted all the post about it. And the funny thing about it is....The way they got sli to run. I did that with my first gen titan x's. Buy using all 8 tabs. So that was interesting. I don't know about that pcie stuff in the bios though.

-

1

1

-

-

Lets get something straight about the post.

1: We all know it's in reality 4k or so he says.(considering that osd is pretty damn big for 4k or even fake 8k) I thought that was a given. And in his dialog about it? I already told you about his post clean ups and retractions and blah blah who cares.

This is at 8k from what he speaks.

I never said it was 8k. We are jumping the gun on what was said...

All I said was he got 4 way working. What should have been the topic is 4 way working, not 5 reasons about everything other than the 4 way working.

When i ran Battlefield 4 at 200% it was running 8 gigs of video memory so to me something is amiss or missing, but did not take the time to go tear apart his video for the answer.

Edit:

In my fallout 4 video I thought that was 4 gigs of ram, but read out fully and missing the decimal point.

Thanks for the clarification on that.

Edit: It says i'm using just under 10 gigs of video memory for 2560x1440P@120hz GTAV

-

3 minutes ago, octiceps said:

There is smooth. And then there is that.

BTW that's not 8K. That's 4K with 4x DSR.

I know that my friend. it's mentioned in the video as well.

-

23 minutes ago, D2ultima said:

Interesting... though I expect four would've been a stutter fest. I would say 3 might perform better. It's too bad, I'd have liked to have seen that. Maybe a 4.8GHz 5960X with three Titan XPs finally getting GTA V to max out and hit 120fps constantly.

Although he did some post clean up and retracted part of his story...LOL

This is at 8k from what he speaks.

The original first 4 way sli working...

-

I was only point out what some have done. And that game in question was GTAV. As to how well it was working I have no idea. Only mentioning that it was done.

-

Well, scaling has been made to work with 4 Titan XP's.....

-

Well damn, then there goes that idea.....

And I'm still not selling my second card.

-

4

4

-

-

In my situation, that would not be a good thing. Don't want anyone around my LN2/Dry Ice/Phase Change or Water Chiller. That could turn out disastrous. And there is way to much expensive stuff sitting around that could potentially end up broken unfortunately. And the grand kids have there own gaming rig which I really need to ship off to them next week. lol

And when benching with ln2, you do not have time to be monitoring anyone but what you are doing. lol

-

4

4

-

-

And this would make for a good argument on why they wanted to put desktop type gpus in notebooks instead of mobile. They could cut out sli/crossfire and just set the power to match that of the desktop counterpart. Although I don't see them matching the pricing any time soon though.

-

3

3

-

-

-

hummm, didn't take the video files...

-

1

1

-

-

31 minutes ago, Mr. Fox said:

Well, you had me confused because you replied to my post and said, "The gap is massive because of ln2, you will never ever ever catch a desktop that can run ln2."

So, that's why I asked you about it. I thought you were saying those scores that I linked were higher because they were cooling with LN2, which did not make any sense since they were not overclocked that much and should not need LN2 cooling to run at those clock speeds.

Ah, it's because you haven't started benching desktop stuff.

That was a reference to the whole total picture. Right now you have to put in way to much work on the 10 series cards to gain anything. That's why it's easier to get a bunch of on water and air results.But with these on air results you will find cpus under ln2/dice/ss/casscade/chilled water, which will push scores higher.

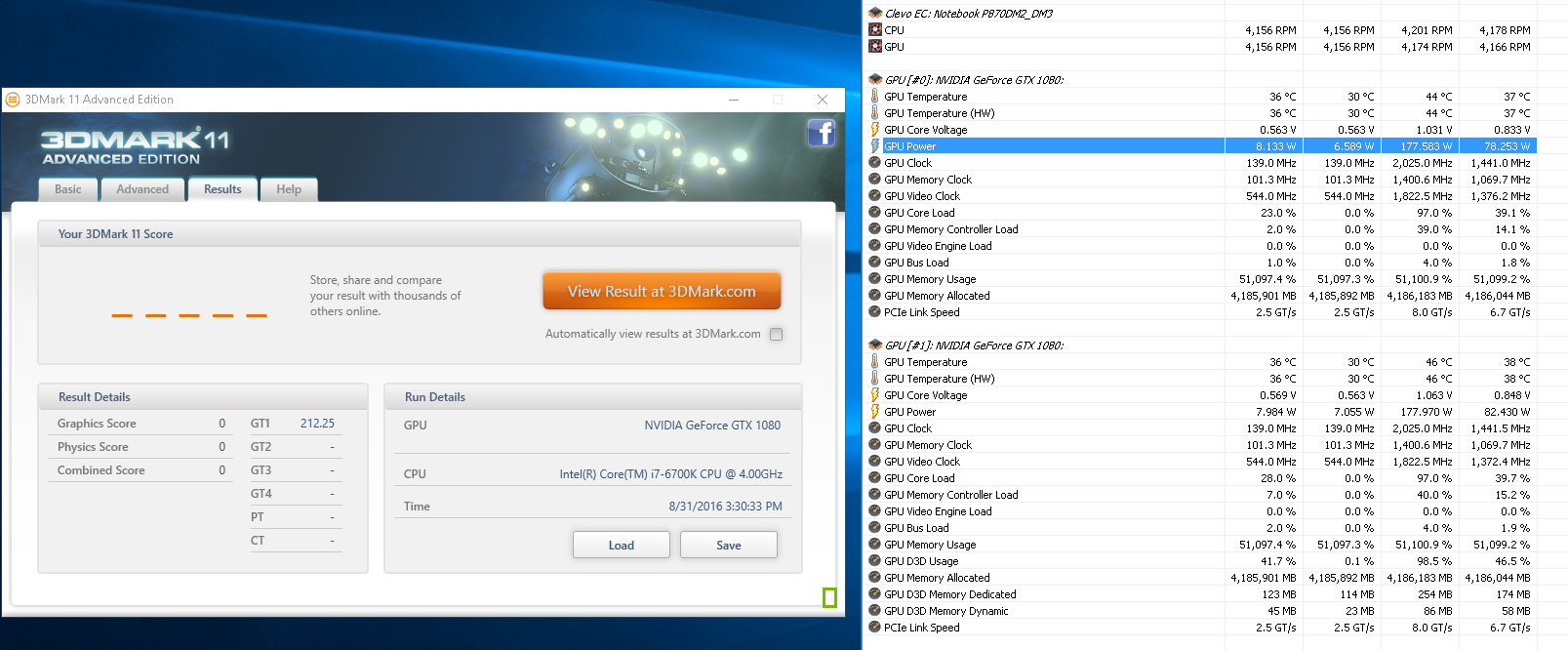

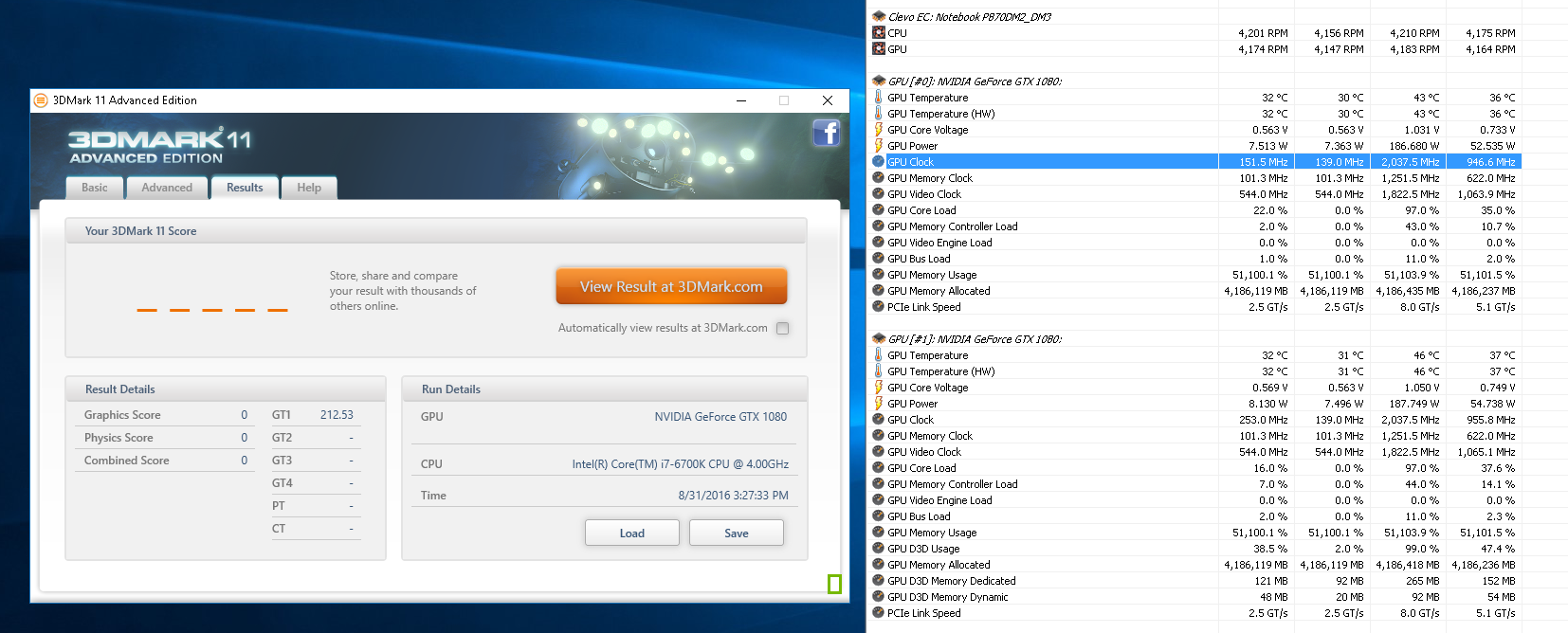

"The gap is massive because of ln2, you will never ever ever catch a desktop that can run ln2."http://hwbot.org/submission/3266743_smoke_3dmark11___performance_2x_geforce_gtx_1080_46488_marks

His cards are on air and i have him beat, but because of the ln2+6950 he has me beat over all. And at the end of the day, it's over all scores that win.

Technically speaking we could run ln2, but that would be very very risky

So using 2 1080's and a 6700k. A system benching both of these on ln2 you will never ever catch is how that comes about, but anyone basically on air is catchable. We seen this with @Papusan 6700k score. And my own scores in firestrike.

Sorry for the confusion.

4 minutes ago, Prema said:I think what John meant to say is that you have a real chance in the Notebook world to lead the way while the Desktop world is dominated by 'insanity'.

Yes, this is a perfect answer!

And that's why I do both.

-

1

1

-

-

2 hours ago, Mr. Fox said:

Those examples are using LN2 on the video cards? It looks like there is a pretty massive gap in the graphics scores alone between 1080 mobile and 1080 desktop, while there is hardly any difference in GPU core and memory clocks. I suspect the far more powerful CPUs are contributing to some extent.

And, for the CPUs clocked at only 4.4GHz and 4.6GHz they are using LN2 in those examples in my links? Why would they need to use LN2 at such a low core clock?

They are not on ln2 my friend.

I have been benching against menthol for a while now and he is not n ln2 guy

http://hwbot.org/user/menthol/

And neither is the other score. using a 6900k

And Menthols 3dmark11 run is higher because of Windows 7. While the other guys is because of flashing a better factory vbios.

Which brings me to....Where did you get LN2 from? Technically I didn't even have to look up Menthol because I already knew, but wanted to post for verification purposes.

My 3dmark11 with desktop 1080's

http://hwbot.org/submission/3289339_johnksss_3dmark11___performance_2x_geforce_gtx_1080_38710_marks

So once this thing with our cards is figured out, I'm pretty sure there will be a bunch more gpu scores falling by the wayside.

2 hours ago, Prema said:DM3 will also be able to do that 54k 3DM11 GPU score , no worries.

The 1080N SLI has already caught the 1080 SLI(2) And that is without being flashed to a higher profiled card.

http://www.3dmark.com/compare/fs/9846237/fs/9100118/fs/9954310

So with the added goodness, things will look that much better indeed.

-

2

2

-

-

10 hours ago, Mr. Fox said:

Yeah, I know a lot of people are really sold on G-sync. I really don't understand why. To me it is an absolutely worthless gimmick that I have never realized any benefit from. It seemed worthless to me on the Sky X9 and it was included on the machine I just sold at no extra charge. I would never wait for it to become available as part of my purchasing decision,and I certainly would not entertain the idea of paying extra to have it because it did nothing whatsoever at any point to enhance my experience. NVIDIA is the master of gimmicks.

The more I think about this, and how much pure joy I get from overclocked number-chasing, the more I am inclined to believe option A or B makes far too much sense for me to seriously entertain option C. Plus, option C is the least affordable of the three. Since playing games is a distance second place behind the pleasure I derive from benching, those numbers are very hard to ignore. And, while we are closer than ever to closing the gap between desktop and laptop, that really only applies to the P870DM3. Even so, the gap is still massive and it is VERY, VERY far from being true for any machine less than a P870DM3... which is basically everything. The P870DM3 totally eclipses everything else available anywhere in a notebook.

http://www.3dmark.com/compare/3dm11/11426953/3dm11/11495374/3dm11/11528240

http://www.3dmark.com/compare/fs/9846237/fs/9873275/fs/9954310#The gap is massive because of ln2, you will never ever ever catch a desktop that can run ln2.

And as long as the voltage is capped 1.5V, you wont fully catch desktop cpus either...Even if you had ln2

-

1

1

-

-

27 minutes ago, Mr. Fox said:

If the most powerful current generation laptop on the face of the earth isn't good enough to make the decision to keep it an easy one, then maybe I should just forget about the whole mess.

I was thinking of resale value. Most will want G-sync gpus and a G-sync display. This is none of the above, so that means I would have to wait till they show up and then reorder.

-

3

3

-

-

@Mr. Fox You already know how I operate. First try to see how high it can go. We are not your normal reviewers and who wants to wait 2 months to figure out what the machine is suppose to do if we don't really have to. I always say.

I didn't really want to go to in depth with things because I haven't decided on if i'm even going to keep it yet.

I didn't really want to go to in depth with things because I haven't decided on if i'm even going to keep it yet.

That current is going to stop you that's for sure, but going into 5 ghz range you need higher than 1.5V to bench. You might be able to get away with cpu validations sitting on 4C/8T, but trying to bench vantage. Not going to happen. You pass vantage cpu bench, you can bench almost anything at that clock and voltage. Wprime32 requires less voltage, but also vantage is known for killing cpus and or motherboards with no over voltage protections.....

-

1

1

-

-

2 hours ago, Mr. Fox said:

Yeah, I absolutely agree with that. More is always better. The goal is not to use less power than before... use just as much, but do more with it, or use even more and do more with more. There is were the value of the firmware mods come it.

All I can say at this point is...good luck with that.

Edit. Had to run out for a bit.

So I will explain this part everyone seems to be missing.

The part we need to change to be able to compete against desktop cpus in our class is the voltage. Without being able to go in the 1.525 and up range, the cpu is locked in place. No amount of bios editing is going to fix that. And when going to 5 ghz and higher, the voltage needed to make runs gets to staggering new heights. And at each voltage curve there becomes an invisible temp wall.

I'll use this link as an example.

See how @Papusan is pretty much the top of all air benched cpu's? Or at least of the recorded ones.

This result is excellent news for all mobile enthusiast because he is in fact at the top of all air benched 6700k's. Who cares if you can clock 5.3 ghz if you get beat by everyone running 4.8 ghz. If you look down that list you will see even one guy on [email protected] ghz in like 71st place. His run was not optimal...

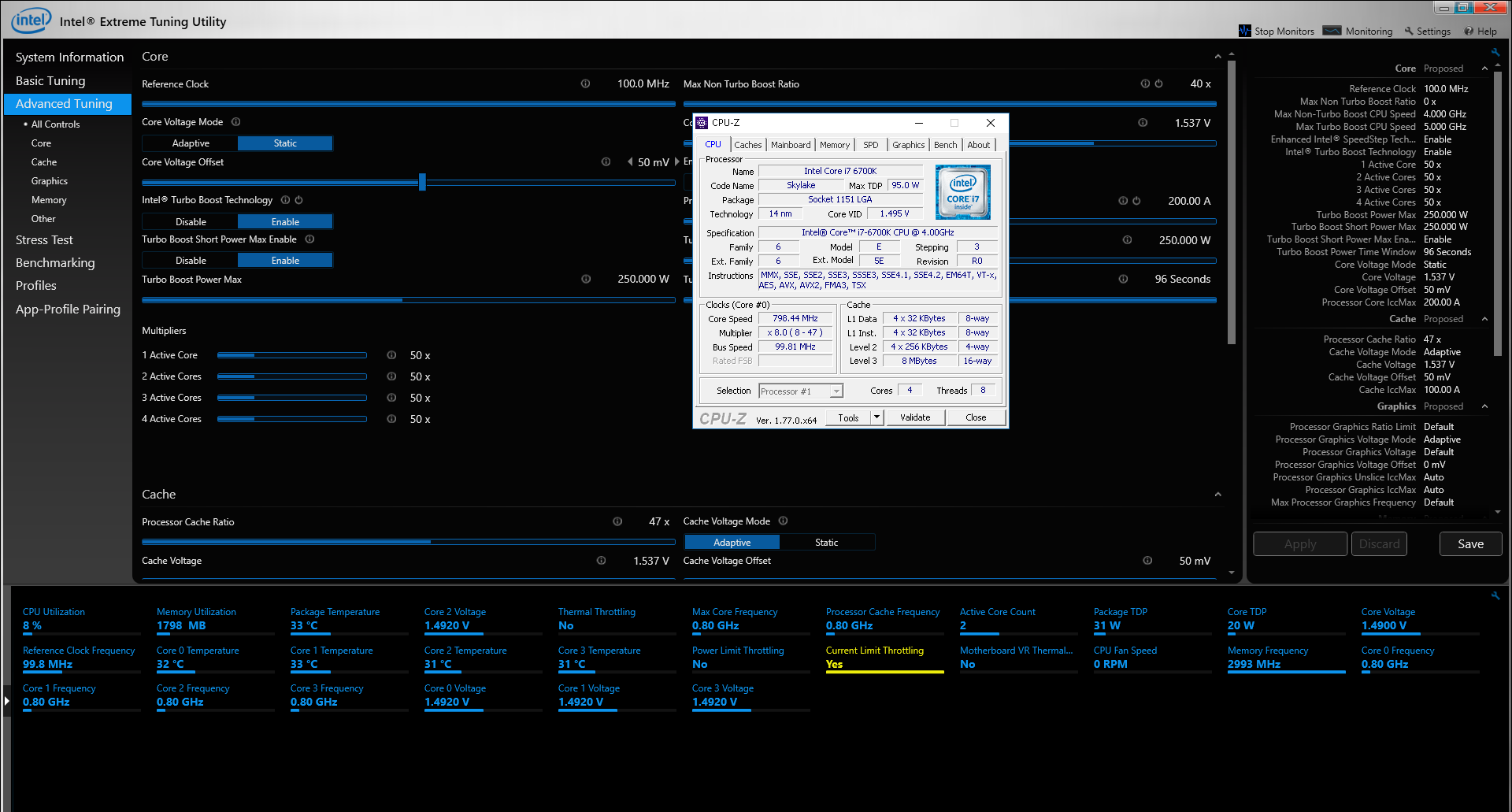

By going by this as an example: 5 ghz needs more than 1.5V to be stable. Even if we unlock the current limit throttling, you still can't get past the most important part. The real core voltage. And that my friend is hard coded somewhere on the motherboard. And has been for quite some time in laptops i'm afraid. Along with the other stuff you can't see in the bios files or the changes being made that don't stick because...of the stuff you can't see in the unlocked bios file.

Now the 1080/1070/1060.

Pascal is based off of temps No doubt about it. I was only able to push 2.055 on air cooler, but once I went to water i was able to push 2.150 ghz stable (My desktop 1080's with flashed vbios from another card. Exact same clocks). at the exact same voltage. 1.093V max I haven't really sat down to see how high we are on the 1080N, but I think it's somewhere near [email protected] ghz core.

Here is where we are standing at the moment with regards to gpu and cpu

Tried setting voltage to 1.535V and the highest it will go is 1.504V

Memory overclock

No memory over clock

Hope this all makes a bit more sense.

-

6

6

-

-

5 minutes ago, Mr. Fox said:

Yes, at one point with some very extreme overclock/overvolting it did go that high. The 180W 980 SLI easily pulled 725W in 3DMark 11 and Fire Strike without going to extremely high overvoltage.

So they seriously came down in wasting watts as a whole. Because my P570WM3 was also pulling past 700 watts, while this P870DM3 is doing far better with less. That means that there is more untapped power to work with later after a righteous unlock, but....these machines would need to be far colder for you to actually capitalize on it though...., but more is better in our book.

-

2

2

-

-

27 minutes ago, Mr. Fox said:

Does "bonus points" translate into 3DMark points and other performance metrics that match 1080?

If not, you're merely doing your best to squeeze some lemonade from the box of lemons when you really wanted a glass of orange juice.

Personally, I have a hard time with the idea of aspiring to second tier performance as a solution. It's an upgrade, but a disappointment at the same time because it will never be the best option.

Yes, but that's for guys like us....The other 98 percent of the people just want something better than what they have.

And a 1070N is better than a 980N. Uses less watts and give more performance for less money on an upgrade. Provided one can get one to work in a machine other than what it was designed for.

And anyone coming from a single 980M or lower...A 1070N is an upgrade. It is also an upgrade to a single 980N, just not as much.

Other news.

http://www.3dmark.com/compare/fs/9946886/fs/9225544/fs/9686773/fs/9954310/fs/9988160/fs/8291254

I would recommend anyone with sli 980M's to just wait it out, because the only thing beating it is SLI 1080's & 1070's And I would add SLI 980N as an option, but it seems you would need more than 2 psu's to run at full speed/locked down speed. I think Brother Fox had that at over 800W?

I for the life of me haven't been able to get past 500W yet. So far 477W max.

-

6

6

-

-

28 minutes ago, Mr. Fox said:

Nice scores brother @johnksss.

I wonder why 3DMark is showing the GPU clocks so differently between desktop and notebook 1080? Makes it harder to compare the graphics performance when you don't have an accurate view of their core and memory clock speeds.

http://www.3dmark.com/compare/3dm11/11315534/3dm11/11528240#

Edit: Time Spy shows them correctly. Must be 3DMark systeminfo needs to be updated.

Thanks Brother Fox

1: Different drivers do that about 95 percent of the time. Something to do with how it reports the clocks back. Some test pick them up correctly and others don't. The actual clocks are 2020/5504

2: You should have grabbed the next result down, it shows the clocks closer to right, even though it's still not right.

3: Time spy/Cloude gate/Firestrike Extreme/Ice storm all show the correct clocks.

-

2

2

-

PASCAL-MXM & P-SERIES REFRESH

in Clevo

Posted

GTAV. To me that is a weird reading since the max on each card is 8196