Leaderboard

Popular Content

Showing content with the highest reputation on 06/19/17 in all areas

-

Due to a stupid accident by me, I acquired a 980m with a chunk knocked out of the core. Not wanted to scrap a perfectly good top end PCB for parts, I wanted to replace the core. You can see the gouge in the core to the left of the TFC918.01W writing near the left edge of the die. First I had to get the dead core off: With no sellers on ebay selling GM204 cores, my only option was to buy a full card off ebay. With no mobile cards under $500,I had to get a desktop card. And with this much effort involved to do the repair, of course I got a 980 instead of a 970. Below is the dead 980 I got off ebay: You can see for some reason someone removed a bunch of components between the core and PCI-E slot. I have no idea why anyone would do this. I tried the card and it was error 43. PCB bend seemed to be too little to kill the card, so those missing components had to be it. GPUs can be dead because someone removed or installed a heatsink wrong and broke a corner of the core off, so buying cards for cores on ebay is a gamble. This core is not even scratched: Preheating the card prior to high heat to pull the core: And core pulled. It survived the pull: Next is the 980 core on the left cleaned of solder. On the right is the original 980m core: Next I need to reball the 980 core, and lastly put it on the card. I am waiting for the BGA stencil to arrive from China. It still has not cleared US customs: https://tools.usps.com/go/TrackConfirmAction?tLabels=LS022957368CN When that shows up expect the core to be on the card in 1-2 days. So some potential issues with this mod besides me physically messing up: I believe that starting with Maxwell Nvidia started flashing core configuration onto the cores, like intel does with CPUID. I believe this because I found laser cuts on a GK104 for a 680m, but could not find any on two GM204 cores. In addition, Clyde figured out device IDs on the 680m and K5000m. They are set by resistor values on the PCB. The 980m has the same resistor configuration as the 680m for the lowest nibble of the Device ID (0x13D7), but all of the resistors are absent. Filling in these resistors does nothing. Resistors do exist for the 3 and D in the device ID. Flashing a 970m vBIOS on my 980m did not change the device ID or core configuration. If this data is not stored on the PCB through straps or the vBIOS, then it must be stored on the GPU core. So I expect the card with the 980 core to report its device ID as 0x13D0. The first 12 bits pulled from the PCB, and last 4 from the core. 0x13D0 does not exist. I may possibly be able to add it to the .inf, or I may have to change the ID on the board. With the ID's 0 hardset by the core, I can only change the device ID to 0x13C0, matching that of a desktop 980. An additional issue may be that the core may not fully enable. Clyde put a 680 core on a K5000m and never got it to unlock to 1536 CUDA cores. We never figured out why. Lastly, there was very tough glue holding the 980m core on. When removing this glue I scraped some of the memory PCB traces. I checked with a multimeter and these traces are still intact, but if they are significantly damaged this can be problematic for memory stability. I think they are OK though, just exposed. Due to Clyde's lack of success in getting his 680 core to fully unlock I am concerned I might not get 2048. If I don't at least I should still have a very good chip. Desktop chips are better binned than mobile chips (most 980s are over 80% ASIC quality, while most 980ms are below 70%). In addition this 980 is a Galax 980 Hall of Fame, which are supposedly binned out of the 980 chips. Having a 90%+ ASIC would be great to have. The mid 60s chips we get in the 980m suck tons of power. I want to give a special thanks to Mr. Fox. This card was originally his. He sent me one card to mod and one to repair. I repaired the broken one and broke the working one. The broken one is the one I've been modding.1 point

-

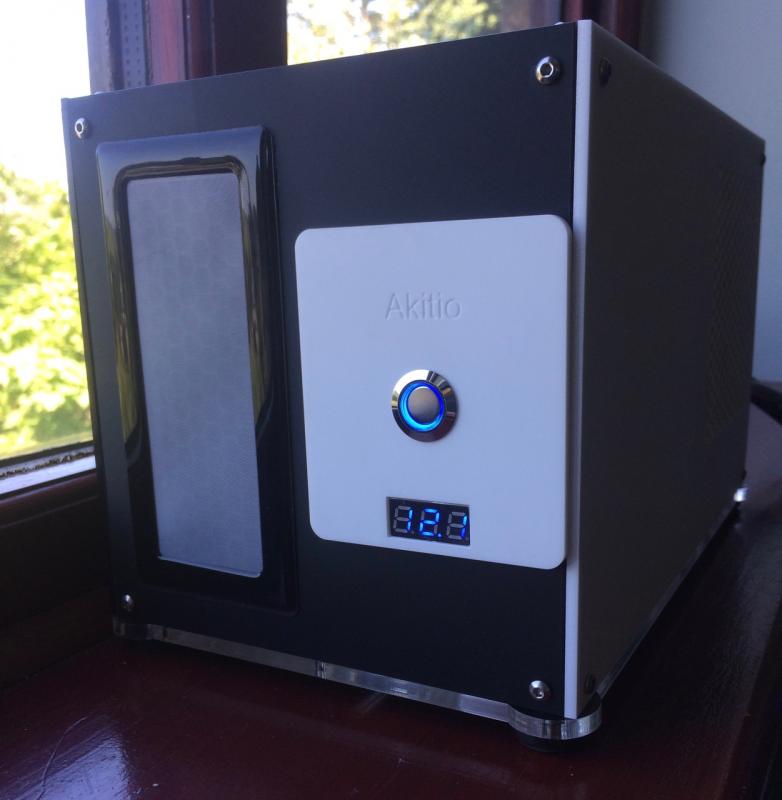

A little while ago I bought an Akitio 2 to play with for my Mac Mini. I did not like the idea of chopping the original enclosure and also was not happy with the idea of separate power supply and wires running all around the desk so, as I run my own one man laser cutting business I decided to make my own design. I wanted to develop something that met the following criteria. 1. No cutting of the original Akitio Thunder box, but re-using everything that I could from there in such a way that the Akitio could be re-assembled into 'as new' condition' if I ever wanted to sell it; 2. On board power supply to power everything (i.e. one mains lead in); 3. Switch at the front; 4. voltage indicator at the front to check stability of 12v line; 5. Lots of ventilation for the GPU; 6. Filtered input air; 7. Stylish look (shouldn't scream 'home made'. 8. Potential for expanding design to take longest GPUs on the market. I won't bore you with all the development side in this first post [ but will add the detail if you'd like it], but I did go through a lot of perspex before I settled on the first proper prototype I am showing in this post. Some sheets were wasted through simply not getting measurements right, others from fundamental design changes to get maximum strength from minimum materials cost. I'll explain more after the pictures, but here is the enclosure in the current state. I will have to split this post due to the 1MB upload limits per post. In this first post: front view with engraved detail, on/off switch and voltmeter:1 point

-

As you'll have seen from the first post I went for a honeycomb ventilation pattern - reason: this hexagonal pattern give maximum open area for minimum strength loss. The inlets and outlets should be filtered, so I have used demciflex filters to front and GPU side (and will also be buying for the roof outlet and for PSU fan inlet). Mix of matt white and black sort of evolved from the parts I had to hand, and these parts are all 3mm thick. I used the feature of putting white onto black at the switch area both for style points and for extra re-inforcement. 3mm is, in my view, not a structural strength product and doubling up can compensate for that in stress areas. And so, we come to the back of the case: I'll upload some more once I am allowed to!!! Anyway, you get the gist of it so far. Back of the case also is double thickness around the SFX power supply to give it good strength to withstand plugging and unplugging of the mains lead. Let me know your thoughts!!!1 point

-

I am proud owner of a new Clevo P775DM3 laptop with 1080 gpu and 6700K processor. As I am using the system mostly as workstation (besides some gaming), I am a bit annoyed by the noisy fans and for several projects I need Linux so I set up a dual boot configuration. It took some time to aggregate some information of the hardware behavior, mostly from this forum, nodebookreview, and some other, so I hope it might help sum people to summarize my findings here. In particular, I am still not satisfied with the fan noise, so I am glad for any advise. First of all: I like the system very much and also Linux runs pretty smoothly. It is an excellent compromise as a desktop replacement and the powerful hardware can easily handle workstation load. I player around with the clocks only shortly, and can operate the CPU at 4.2 or 4.4 with all cores in prime avx staying somewhat below 90 deg, so the cooling seems to work pretty well. My main concern however, is to operate it in a silent mode when idle, and from that perspective it totally failed. Obviously this is not relevant for overclocking, but I assume everyone is not constantly running games with headspeakers, so noisy fans are a major design flaw in my opinion. I made some progress so far, but I am not fine with the result yet. I tried to reverse engineer the fan behavior as far as I could. My conclusions are: - The settings Quiet / Multimedia / Performance in the ClevoControlCenter have little influence. They mostly apply different default settings. The main difference is between the fan modes (Full, Overclock, Automatic, Custom). - The modes overclock and automatic seem to apply a fan curve that is defined in the embedded controller (EC), which is exactly the way I would it like to be. The difference between overclock and automatic is mainly a slower hysteresis (high pass filter) in the control logic, so it does not spin up fast at load spikes. In addition, the fan settings of the automatic mode at a given temperature are somewhat lower than in overclock mode, in particular at low temperatures. At high temperatures, both get very loud. - The system has two fans, the CPU temperature regulates the left one, the GPU regulates the right one. In both cases (automatic and overclock), the systems gets really loud starting from around 50 deg. - The custom mode lets me define a start and stop temperature for the fan, but essentially it is not working as intended. It does indeed stop the fan completely at low temperatures, but start and stop temperature are related (but not identical) to what I set. When I define a fan stop below 60deg and fan active at 70deg (centigrade), the fan starts to spin at around 55 deg, cools down the hardware to around 48, then it turns off again until the hardware has reached 55 again. Because in a fanless mode the hardware reaches 55 in little time, this is no reasonable modus operandi, in particular because the fan seems to spin up fully for a short time when it sets in. - After a reboot or suspend/resume, the fans revert back to overclock mode, even if I set them to automatic before. I would consider that a bug of the ControlCenter. - When the system runs on battery, it seems to use yet another fan curve which is really silent and it dramatically cuts the clocks to around 2.4 GHz for the CPU. (In particular, it seems to me it does not cut the CPU clocks itself, but lowers the power limit.) Anyway, that fan curve might actually work well (system is silent even at 50 deg GPU temperature, fans spin up at really high temperatures), but I have no idea how how to activate it with PSU. I also observed some throttling that was reported by PREMA before. When the GPU is used, CPU clocks are throttled. Somehow, this only happens on Windows. On linux, the CPUs will constantly stay at full clocks if both CPU and GPU are under load. I don't know how the throttling is implemented. Perhaps the linux pstate kernel driver is interfering with it. There is also some throttling when the GPU gets warmer (starting way before 90 deg). Perhaps that is why the standard fan curve tries to keep the card below 50 deg (see below). Running idle, my system settles at around 35-40 deg CPU temperature and 50 deg GPU temperature (at 20 deg environment). I am actually wondering why the GPU gets so hot. Pascal is very efficient and in idle mode I would not expect it to drain much power. I would actually be interested in other people's idle temperature. I'll probably repaste the GPU when I find some time, but currently the warm GPU is actually convenient for tuning the system. Anyway, this 50 deg GPU makes the fan always spin fast (at around 3k RPM), so the system is constantly loud. (For reading the fan RPMs: the hwinfo64 tool can read it for some clevo models (https://www.hwinfo.com/forum/Thread-Solved-Monitor-CPU-and-GPU-fan-RPM-on-Clevo-laptops?page=9). So the fans are controlled via the EC, there seems no direct control of the fans the software. One has to speak with the EC. The common tools (msi afterburner, fanspeed, hwinfo, etc.) fail for this clevo Model. If I get it right, the PREMA BIOS contains also an updated EC firmware with an improved (slower at low temp?) fan curve, but that is not available yet, and anyway I will have to wait for a public version without a premium partner. So I am wondering what to do now. I couldn't find much info on the actual firmware content, or how to modify the fan curves. Ideal would be the possibility to set the fan curve via software, but I don't know if this is possible at all. There is a ClevoECView application which was leaked from Clevo some years ago that gives some insight, and some gui build a linux tool to manipulate the EC (https://github.com/SkyLandTW/clevo-indicator). I have extended that tool for my model, such that I can now set hardcoded fan speeds for GPU and CPU and read both fan's RPM and the CPU temperature (https://github.com/davidrohr/clevo-indicator - Naturally I will not take any responsibility if this breaks a system!). What I am missing yet is where in the EC memory I could get the GPU fan duty cycle or the GPU temperature. Checking the EC dumps at different fan settings makes me think it is simply not available. What is a bit weird: under Windows it seems to me the EC exposes the GPU Fan duty cycle at 0xCF, while under Linux the value is constant. I'd appreciate if anyone has an idea. Setting the fans to 15% - 30% duty cycle makes the system silent to completely silent, increasing the temperatures to 55-65 deg at idle, which I consider fair. I have not yet found out how to activate one of the fan profiles via speaking to the EC. In particular, I would be interested in activating the automatic or the on-battery profile. At least, there is a way to go back to the overclock profile: pressing fn-1 once after fixing the clocks reverts it. I am assuming that fn-1 actually fixed the clocks in the same way I am doing, so the EC assumes I am already in full fan mode, thus it goes back to overclock mode. So my current setup is that I pin the fans to a slow speed for normal operation, and I revert to the overclock mode for computing / gaming. But I don't really like this setup. It is both dangerous and inconvenient. I was thinking of implementing a background daemon or a kernel driver to maintain the fan curve manually, (the utility at github which I started from actually already provides such a mode) but that is not the ideal solution. It would be a problem when the background daemon exits / crashes, and I am also not sure about the stability of how I set the fan speed. I would really prefer a proper fan curve in the EC. That is the silver bullet. It is an absolute pity that such great hardware is deteriorated by a useless EC firmware. Finally some info on running Linux for those who are interested: Most of the hardware runs out of the box. The special keys on the keyboard are a bit of a problem, but there is a linux-laptop company called tuxedo computers which offer linux-based clevo laptops and they have implemented a kernel module for it, which is easy to compile (can be found in their forum: www.linux-onlineshop.de - it is called tuxedo-wmi). What annoys me a bit is that I can query very little hardware info. lm-sensors only detects the simple intel coretemp driver for CPU temperatures, and it gets the temperature of the wifi module. I would love any advise on how to query e.g. the CPU voltage, but it seems there is no linux kernel module yet for the monitoring chip used in the clevo system (at least there is one unsupported SuperIO chip).1 point

-

Some more info on the automatic linux fan control: There is some strange thing with the EC about querying GPU data. CPU temperature is always stored in 0x07 and CPU fan duty in 0xCE. 0xCF stores the GPU fan duty cycle, but only after starting the Hotkey utility under Windows, which seems to bring the EC in a different state. Booting Linux straight, 0xCF seems to store a constant random value. As I could not get this running under Linux, I query GPU temperature via nvidia-smi, CPU temperature via the EC. Once in a while, the EC reports a very low CPU temperature (< 15 deg). These values are filtered out. Fan control application is implemented as simple user-space process. A kernel module might be better, but would be more effort and I am too lazy. I assign the FIFO scheduler to the application, so in theory it should not be interrupted, and there is a background-process that restarts it if it crashed + a check that nvidia-smi actually delivers new temperature data. In case of failure, a high fan speed is assigned as safety measure. Running since 2 week now without issues, so I am rather satisfied and think this is stable enough for my purpose - until I come across a way to do this inside the EC. That would have the advantage to work under Windows as well. The application could be ported easily, but I would be afraid about interference with the hotkey utility - or perhaps I should just toss hotkey completely.1 point

-

Second follow-up: I delidded the CPU and repasted CPU and GPU with grizzly conductonaut. Unfortunately, it doesn't help a lot with idle temperatures, but temperatures under load decreased tremendously. CPU went down from 80 to 60 deg running prime95 AVX.1 point

-

Some follow-up: - CPU-voltage on Linux can be querried vie dmidecode, which gets it directly from CPU MSR registers, but apparently it only shows the voltage for the highest P-State. - Alternatively, the CPU-X application https://github.com/X0rg/CPU-X can show the current voltage. - What concerns the fan-speed: I have implemented an automatic fan control as a simple linux service, that fetches GPU temperatures from nvidia-smi (the EC is not reliably reporting GPU fan RPMs and GPU temperature for me.) A bit worse than using the EC for that autonomically, but anyway, the system is silend when not fully loaded now. - Next, I'll repaste the GPU (and also the CPU) to get the GPU temperatures down hopefully.1 point