-

Posts

141 -

Joined

-

Last visited

-

Days Won

3

Content Type

Profiles

Forums

Downloads

Posts posted by badbadbad

-

-

Is this going to be a side project, or do you plan on opening it up for general public use? Because if you choose the latter, then maybe you can crowd fund to drop down costs? I would love to back a project like that and can help spread the word for you!

Second, I was just wondering if the MXM model can simply use an 8-pin Dell DA-2 240W adapter to power up a very compact setup. The other eGPU adapter manufacturers have started doing this since the AC adapter is readily available, and that GPUs beginning the 750Ti have been very power efficient. In fact, the uber GTX880 is rumored to require only ~195W (like a GTX680) which works in an adapter like EXP GDCv6 without any external PSU. The only time I can justify adding a PSU is when SLI will be utilized. But you've already mentioned another adapter for that.

EDIT: Might I also add that I like how you are basically packaging this to work for OSX primarily, then just mentioning support for Hackintosh (for Windows support of course). We all know that this will work natively for Windows, but hopefully featuring it as an OSX addon (the rMBP "Graphics Upgrader!") will take Intel's prying eyes off of this potentially promising product.

Any foreseeable problems like hot unplugging errors will technically not be Microsoft's problem too. So it might just be worth the shot.

-

Yeah, gotta be careful about the support of some adapters. I did comment once about how Raid-0 in a Dual mSATA-to-SATA adapter would not work. Almost got me to go down that path. I guess it's option 1 or 3 then.

About the EC slot, did anyone manage to follow-up Kenglish's comment in boosting the PCH?

I do not know if this BCLK overclocking also overclocks the PCH PCI-E for eGPUs. It would be nice if someone could test PCI-E bandwidth in SANDRA with a BCLK overclock to verify if it does or not. If not I can provide an additional mod which definitely will overclock the PCH PCI-E.I can try to test this out with my stock CPU while my quadcore hasn't arrived yet, but I might need some guidance if I have to troubleshoot.

-

Cool. I love the options. Might be stuck with option 1 for a while until the GTX880 becomes readily available. But if I have enough for another EVO and the GPU isn't within reach yet, I might just go with option 3.

Regarding option 2, if I go with the PM851 (the rebadged 840 pros, I'm assuming), would I have to use an mSATA to SATA adapter? Just worried about adding more areas of unreliability since it'll be in Raid-0.

I can tolerate a little stretch in expenses if the result is more reliable. Is a 2x256Gb Raid-0 really the better option, or will 2x500Gb be just as reliable?

-

Actually, I was supposed to go that route and get two cheap SSDs to enjoy Raid-0 and to save up for an eGPU. But since I won a Samsung SSD from a raffle, I was looking to invest in another 500Gb to get a comfortable 1Tb of storage.

Would a cheaper 512Gb SSD be ok to pair up with the Samsung one I have? Or will it be unreliable and have instability issues? I'd be spending as much as you did if I get another 500Gb EVO since it costs about the same as both of your drives.

-

That's great buddy. But I already have a Samsung 840 EVO 500Gb SSD (gift) and am planning to pair up another one when I have the budget for it. The third mSATA would really just be for storage so getting max storage for a premium cost is not really appealing to me.

Can you Raid-0 drives with different storage spaces?

-

enable an additional SATA port AND provide mSATA cache facility

Thanks, now I get it. Honestly, I have no need for an additional SATA port yet unless mSATA SSDs are priced much closer to HDDs. But I'd surely love to try it out one day.

Will follow up on the WiDi feature when I finally convince myself to upgrade to W8.1.

-

I agree with Tech Inferno Fan on checking out the list first. But in the end, your decision will revolve around what your needs really are.

Your older notebooks indicate you're primarily a gamer. You might want to consider a gaming laptop first so that you don't always need to bring the eGPU hardware and "set up camp" near a socket.

If you can survive with non-gaming specs on the go, then a workstation option might be better since you get similar quality for your display and sounds, with a boost in battery life and ports. Plus, you get to heavy game at home with an eGPU.

What will you use it for, if I may ask?

-

Will the Gen 3 cards ie. PE4L pci express v2 x16 be backwards compatible with pci express v1 x16?

Not so long ago, I got my T400 notebook output at x1.opt with a GTX460 and PE4L 2.1b so I'm guessing that answers most of your "old" laptop questions. There's a thread here that specifically discusses the method for the T400 (a Core2Duo BTW).

forego it altogether and just get an awesome new laptop with sli or something.This.

Most eGPU users here milk the most out of the features already available in our notebooks. But if you mention that you are due for an upgrade, why not take that route? It makes the tinkering part a lot more useful and gives back quite some impressive results.

My suggestion though before buying a rig is to factor in eGPU capability (EC slots, TB/TB2 ports). The possibility of converting a powerful yet battery-saving workstation into a highend gaming rig truly maximizes the power and versatility of a notebook.

Hope this helps.

-

2

2

-

-

Oculus Rift CV1. (Yes, it's aimed for desktops, but I'm looking into something portable as well)

Since it's x1.2opt, I'm honestly hoping VR presence won't be ruined by upscaled settings or watered down details.

-

Well, I merely suggested the WWAN just to max everything out..simply because you can!

But otherwise, mobile hotspots are the way to go.

It'll be tough to say since the actual max multiplier achieved in the 2570p processor table so far is x35 for a few minutes.

I'm building my 2570p to be a decent mobile rig for when VR arrives.

-

Good News! Seems like the new Maxwell GTX880 will draw power just as much as a GTX680!

Follow-up question. How exactly will flashing an 8470p bios be of any improvement to our Extremebook? And why is a spare bios chip needed for standby?

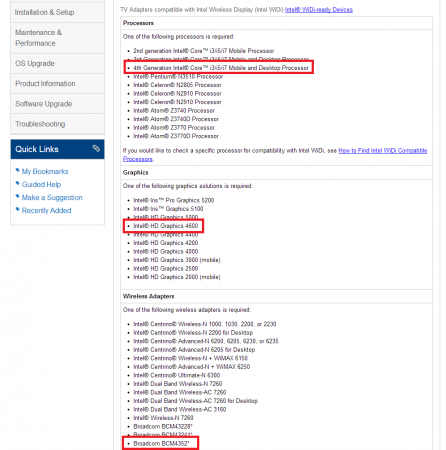

@Tech Inferno Fan, according to the official Intel website, the BCM4352 should be a compatible WiDi adapter even though it is not made by Intel.

Others have posted screenshots complaining of the same problem.

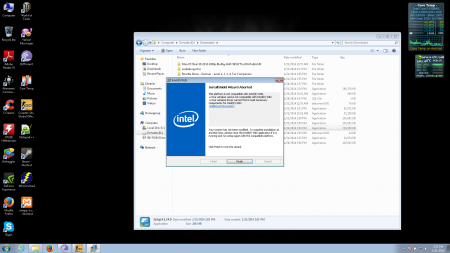

After much speculation, I have come across the WiDi solution. WiDi apparently needs Windows 8.1 Broadcom drivers to work.

Can someone with Windows 8.1 and a BCM4352 confirm if WiDi drivers install? Or can I simply install the Windows 8.1 WiFi drivers over Windows 7 and install WiDi from there?

UPDATE: Can't get WiFi W8.1 adapters to install on W7

-

2

2

-

-

@Yves. Hi man! I'm planning out my rig similar to yours but I might stick with Raid-0 500Gb EVOs. Don't need that much space and I kinda read a chart of specs somewhere that 500Gb has slightly better performance than 1Tb. Could be wrong though.

Get a 90W (slim) adapter too so you don't scrimp on power. I think @jacobsson is selling his.

Get the BCM4352 just in case you want to Hackintosh with Clover like these guys. I would try it just for the heck of it.

Maybe a Maxwell GTX880 when it arrives. With the speculated card specs, drawing only 195W of power will be ideal for an EXP GDCv6 or PE4C 2.x setup.

Since you want to max out the beast, you might want to consider getting a WWAN card as well. Just because well..you can.

-

Thanks Tech Inferno Fan. I knew about the bandwidth limits so I didn't mind a watered down, upscaled experience. Was more concerned about latency leaving a blurring, nauseating effect hence the question.

While we do not have 100℅ eGPU performance yet, this notebook remains for me as one of the most powerful, portable devices available for gaming. Great bang for the buck!

-

Is the 5GT/s x1.2opt setup in our 2570p really viable for VR gaming? Just read this rant (Timothy Lottes: New Laptop + VR = Fail) of an NVIDIA guy regarding mobile PCIe bandwidth and dGPU drivers increasing latency, rendering his notebook useless for VR.

Will eGPUs suffer the same fate?

-

There's some options for battery life in the BCM4352 adapter settings->properties->Advanced. wifi driver itself. Eg: power output, minimum power consumption. Can play around with those. There's other important system wide battery life tweaks.

Thanks Tech Inferno Fan. I did read on the tweaks before posting. What I meant was if there were other tweaks for the WiFi card other than those listed in the first page (like enabling WiDi or something like that). I'm holding off most of the power/battery tweaks until I get my RAM and processor.

@pandaleo, good to know that the BGA to PGAs work quite well. At least the issue regarding poorer performance due to added thickness from the customized pins was resolved. Question now is if the vendors were correct in claiming that the BGA to PGA are more heat efficient. Do the numbers prove that?

I personally still feel that how tight the processor is to the mobo is the determining factor for performance. The coper shim experiment and now the thicker BGA to PGAs try to hint us there.

-

Hello again. I finally got my BCM4352 WiFi adapter up and running. Just wondering if there are any specific battery tweaks besides the Gen1 port for the WiFi adapter?

And as follow up, is there any way we can spoof our device to run WiDi? Got everything but the driver working for WiDi. lol

-

Thanks for the feedback Tech Inferno Fan! I didn't look closely at the thickness of the drives. So 9.5mm it is..

As for the WD Dual Drive, power consumption is rated at 0.9W (idle/standby) / 1.9W (read/write) and hardware is available for about $200+ online.

Don't your SSD (0.4W) and HDD (0.8W) total at 1.2W idle? This might be a cheaper and better solution with space for an ODD still.

Oh, how I wish though that the DEVSLP feature on the new Samsung 850 pro was supported by Ivy Bridge. Would've thought about getting that too.

-

Hi guys,

I just wanted to ask a few things again.

1.) Are our Extremebooks compatible with the newer Hybrid drives like SSHDs or the Dual Drive of WD?

Seems like having larger storage with SSD-like speed for the OS can go cheaper if I go with a Dual Drive rather than a pure 1Tb SSD.

2.) Does our BIOS restrict the max capacity of HDDs? Or, in the event that larger HDDs are available, can we exceed the 2Tb HDDs available today?

I'm also thinking of getting a 2Tb WD Green in the caddy if that is compatible.

What do you guys think?

-

If these PCIe expansion backplanes are compatible with current Thunderbolt-PCIe 3.0 cards, will they be "hunted down" due to licensing problems with Intel?

If not, then we should really get Bplus Technology to consider making this. It's a very portable and better solution than the current Thunderbolt eGPU setups.

Best of all, we won't be needing a PCIe 4.0 version since Thunderbolt-3@40GT/s will already have enough bandwidth for 100% GPU performance.

-

Prior to going to a 12" form factor way back with the 2510P I was using 14" notebooks. A size I consider as a great balance between portability and usability.

Saying that, an i7-quad upgraded 2570P is still unbeaten in the < 14" size for performance.

I must admit that 14" is also my ideal portable setup that is good for gaming.

But since I am looking forward to a 720p upscaled gaming experience in a CV1 Oculus Rift (1440p), the small yet powerful 2570p seems to be the most portable device to suit the job. Not much use for the low 768p screen because of the HMD. @jacobsson 's GTX670 mini eGPU rig even makes things much easier to transport. Hoping for even better hardware with Maxwell.

Now anybody lamenting a 2570P using a Ivy Bridge rather than Haswell CPU just needs to see they are missing on a bunch of heat without gaining much if anything : http://forum.techinferno.com/throttlestop-realtemp-discussion/6958-haswell-step-backwards-ivy-bridge-i-have-some-shocking-tdp-results.html#post95181 . Pay careful attention to Mr.Fox's comment in that thread . It's only the Haswell ULT chipset with ULV CPUs (denoted by a U on the end), such as i5-4300U, that run cool and see great battery life. The sacrifice there is performance. They deliver only slightly more than half the performance of a i7-quad upgraded 2570P.Actually, I am wishing more for the unlocked multipliers on the 2570p 'coz I'm power hungry. (Will try the BCLK tweak when I get my processor)

I'd suggest just grab a PGA i7-3740QM, i7-3720QM or i7-3630QM for best performance in the order; or reverse for best pricing.Actually, based on your table, it seems like a 3820QM averages the highest in performance regardless of build quality.

I might be wasting a bit by risking this, but at least I know I didn't hold back in performance (in theory).

Oh.. and I found out today that a 14" Dell E6430 has x2 2.0 eGPU electrical capability via two mPCIe slots. It accepts a Ivy Bridge CPU, has a 900P LCD, eSATAp port. Looks may not be to everyone's taste.That's great with the PE4C! But two mPCIe slots would probably need a really good case mod, or a longer time to setup than an EC slot.

-

1

1

-

-

@Tech Inferno Fan. It's sad that we'll be seeing lesser of you now on this thread. Both you and @jacobsson. You guys really got us sold on to this unit!

But as nothing is ever permanent, thanks for the great job in tweaking and improving our 2570p!

Hope we cross paths again in a future notebook (hopefully a TB3 one).

On a lighter note, I received this message from the seller of the BGA to PGA Processor.

Dear friend,If you has a high requirement on the CPU.

I suggest you buy a Original PGA CPU.

Original CPU is more stable than BGA TO BGA. (<-Probably meant PGA here)

Thank you

Seems like the Throttlestop experiment picked up unwanted results regarding the performance of BGA to PGA.

The seller did not disclose the results anymore and declared that OEM is still a better choice over custom.

What you guys think? Has anyone else tried a BGA->PGA processor?

-

1

1

-

-

BGA to PGA?, could someone explain a bit more about this? It sounds very interesting!

EDIT: Oh so BGA is designed for integration, while PGA utilize pins for socket?

So, "BGA to PGA" would be a CPU originally designed for integration (possibly lower power consumption?) that have been converted (added to) to be used in sockets?

If my conclusions are right, does it mean that there are two kinds of socketed CPU's then:

1. PGA

2. BGA to PGA adapted CPU's

Correct. They used a machine to add Pins to convert a Ball Grid Array (soldered) into a Pin Grid Array (socketted).

The seller claims BGA is better, maybe because the soldered chips have closer contact to the board than the sockets of PGA chips.

We did see slight improvements when the sandwiched copper shims added a little pressure on the chips.

My only concern is if the attached pins are a little longer than the ones on OEM PGA chips. Wouldn't performance decrease with the higher resistance?

I'm just not too sold on the seller's idea that the BGA turned PGA is more heat efficient and performs better. I could be proven wrong after Throttlestop testings.

@hatoblue, we are probably looking at the same vendor but mine's on Aliexpress. The vendor, Daisy, was kind enough to answer some of my questions. Hoping she consents to doing the Throttlestop x27 test. Maybe you can try to PM her to do the x27 test as well?

-

Contacted a supplier for a custom BGA to PGA 3740QM chip. Seems willing to test a 3740QM with Throttlestop. Should they do x34 or is x27 a better measure?

By the way, thanks Tech Inferno Fan for the info on x2.2 compatibility of the PE4C! I guess I'll be sticking with x1.2opt for a much longer time then.

The GTX 670 minis make a great portable option but I'm really hoping that Maxwell can bring us more powerful, short, 1-slot cards.

-

Out of curiosity, is x2.2 implementation possible in the 2570p? The PE4C 2.0 adapter seems like a promising piece of technology and attaching a PM3N to the buggy WLAN port could make one suspect that x2.2 might just work.

Any thoughts guys?

Thunderbolt 2 eGPU [OSX] [Winsows 8.1] MXM or PCIe ($350 - $480 + Shipping)

in DIY e-GPU Projects

Posted

Agree with your hardware plans and two thumbs up for on-the-fly custom kext patching.

I was working on the premise that when an eGPU adapter for Windows (c/o Intel Thunderbolt technology) is sold to an end-user, the headache and blame is shared between the OS and not always just the adapter maker. It's not supposed to be Microsoft's fault that the OS will BSOD if an unaware user hot unplugs third party hardware. Not saying this happens all the time, but believe me it does. Based on the opinions of some of my friends in MSI (who have played around with their eGPU prototypes), eGPU adapters can't simply be released without that safety net protecting the OS maker (Microsoft). That is probably why when an adapter manufacturer mentions direct GPU compatibility for their product (ex. TH05), they get a cease-and-desist letter from Intel (even though Intel benefits from the payment for the TB license).

Now for my logic. How about we try to use another OS to tank the eGPU problem. If Apple does not object in having "unstable" third party hardware like eGPUs or Modbooks, then we can produce the Thunderbolt product easily without Intel being told on by Apple to pull out the hardware (as what I'm speculating Microsoft does). We can package it as a pure OSX product so that Microsoft is relieved of this pressure when consumers attempt to add an eGPU to their notebooks. "It is not Windows-compatible" as what they might say.

Now we know that this is not true, since Apple hardware is most certainly compatible with our Microsoft-run hardware. So saying that this "OSX exclusive" product is compatible with Hackintosh hardware is our clue for consumers that it may work with Windows.

tl;dr

Making an "OSX exclusive" TB eGPU adapter will (hopefully) not make Microsoft whine complain to Intel about the product's end-user problems being blamed on the OS. This lame "OSX-only" excuse will allow us to sell the adapter and not get a cease and desist letter from Intel (as speculated is being requested by Microsoft).

Hope this clears things up.