mrdatamx

-

Posts

15 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Posts posted by mrdatamx

-

-

On 12/08/2016 at 5:42 PM, balonik said:

I bouth EXP GDC V8 recently, how can I tell if its 8.0 or 8.3?

The version is written in the circuit board of my adapter. It is visible through the plastic enclosure if I take a look at it from the top. It is located near the PCIe slot .

-

I was able to spare some time and implement an eGPU with my wife's T400, here is a brief documentation.

System:

Lenovo T400

Intel Core2Duo P9600

8 GB DDR3

eGPU:

EXP GDC v8.3 ExpressCard

nVidia GTX 970

350w 80+ Bronze PSU

Steps:

- Booted into windows and downloaded the most recent drivers as well as DDU.

- Executed DDU and set it to reboot in safe mode. Rebooted.

- Removed all previously installed video drivers using DDU and shut down.

- Plugged in eGPU in ExpressCard slot. Unable to boot. Initial boot sequence looping.

- Unplugged eGPU and tried to boot.

- Logged into Windows and set the system to sleep.

- Plugged in the eGPU and woke up the system.

- eGPU was recognised. Installed drivers and rebooted.

- Device manager showed error code 12.

- Not using Setup 1.x proceeded to perform DSDT substitution according to the guide.

- I followed the listed steps. I did not have any error during the procedure, just some warnings, however, the generated file was not working. Windows was crashing when trying to load the DSDT tables.

- I had to generate the same .aml file a couple of times using two "iasl" from both referenced packages. What seemed to fix the issue was executing "iasl dsdt.asl" instead of "iasl -oa dsdt.asl"

- Once Windows was able to boot correctly I verified the new "Large Memory" was activated.

- Suspended the system.

- Plugged in the eGPU and woke the system up.

- Windows woke up, recognised the eGPU and enabled it.

- Installed 3d Mark 06. Tweaked some drivers parameters using Nvidia Control Panel

- Tested the setup.

- Done

Using 3d Mark 06 and an external LCD this system scored: 12861Now she is able to game with a decent experience using her everyday laptop. -

2 minutes ago, quake said:

Anyone know a seller for v8.3e? I have seen a couple of posts and videos that use it, but checking chinese sites I can only find v8.0. Is there even a difference between v8.0 and v8.3?

I got mine from two different stores.

They both have the v8.3, I did confirm that before ordering.

-

1

1

-

-

On 02/08/2016 at 10:47 AM, jowos said:

So while you played Doom, were you using an external monitor plugged on eGPU? Or on the internal monitor? I would be very curious to know your configuration if it's the latter.

Sent from my iPhone using Tapatalk

With this setup using Windows I have played Doom using both external only or internal only. I have found the texture compression to be severe when using the internal LCD so I prefer to use the external monitor for Doom.

Now on the performance side.

With the GTX 950, I used the "optimised" settings recommended by the Nvidia Experience application (mostly Medium quality settings). I was delighted to game at 900P resolution with 60+ fps on light-action scenes, dropping to low 50 fps in ONLY ONE of the heavy action parts of the demo at Nightmare difficulty.

Using the GTX 970 described on my first post, I get to enjoy Doom at 1600x1200 on High-Quality settings at 90+ fps. Nvidia Experience recommended to use Ultra settings, but using they got my implementation unstable, the Nvidia drivers started crashing and so I am back to High-Quality.

-

5 hours ago, ish said:

Thanks a lot for your post. I am also trying to build a similar configuration using rMBP (early 2015) + GTX 1070 + AKitio Thunder2 + Internal display + Ubuntu 16.04. It would be very helpful if you could detail out the complete setup process. Thank you very much for your help.

Actually, the full setup was very simple for this implementation:

-

Every device was off.

-

Plug the "Seasonic 350 watts 80+ bronze" to the "EXP GDC Beast adapter"

-

Physically install one of the cards (Zotac Geforce GTX 750 1GB or EVGA GTX 950 SC+) on the "Beast adapter"

-

Provide power to the GPU (only the 950 needed this). I use a power cable from the Beast to the PCIe power

-

Plug the ExpressCard adapter into the Lenovo T430.

-

Turn on the T430, this it sends a power on signal to the Beast/EGPU, so they turn on too.

-

On the OS selection screen, before booting into Ubuntu 16.04, I manually added two grub flags: pci=nocrs pci=realloc. These were later added permanently to grub

-

Check if the eGPU was recognised. On the terminal, I executed: lspci. My device was listed.

-

After two minutes Ubuntu had enabled the Nouveau drivers. No problem

-

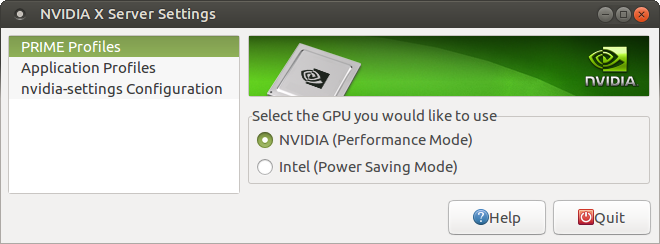

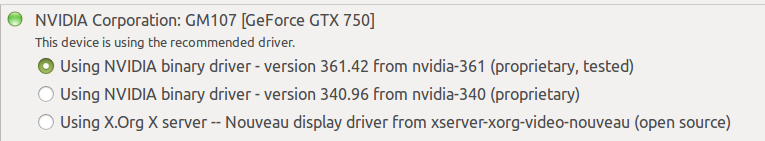

Open the "Additional Drivers" application in the configuration. I selected to use the proprietary and tested drivers (In the first screenshot of my original post). Wait for it to finish installing.

-

Reboot

-

Done

The Nouveau drivers get disabled by the automated setup and they have not given any problem to me.

Now if I am using an external LCD monitor I need to boot the system with it unplugged. Then, once I started a session in the OS I plug the monitor and enable it.

-

-

@jowos I play Doom on windows, my wife plays The sims 3 on windows. On Linux, I was playing OpenArena, Quake 3 and Xonotic. But I'm so into Doom right now, I stopped playing anything else.

-

Thank you for your messages, and sorry pals, I was not paying attention to this forum. This setup has been super stable. In fact, I have even increased the Ram to 16 GB, and then upgraded the eGPU to an EVGA GTX 950 SC+ without any problem and having the same benefits. This laptop has seen a good amount of gaming (just DOOM and The sims 3) with PCI 2.0 enabled and everything rock solid!

Right now I cannot perform any test, I have sold the GPUs, but should get an upgrade in two days.

Now to answer your questions.

On 23/07/2016 at 8:16 PM, Draekris said:Wow, this is a really nice setup! Do you know if you get the pcie compression benefits of Optimus that the Windows drivers have?

Actually, I did not test the compression. I was just amazed I was able to use the eGPU to drive the internal LCD without doing anything special.

On 25/07/2016 at 3:54 AM, jowos said:Did you do anything like Tech Inferno Fan's diy gpu setup 1.30?

Sent from my iPhone using Tapatalk

2I did not use any extra software. Everything was plug and play.

On 25/07/2016 at 7:36 AM, TheReturningVoid said:Nice setup! I'm planning to do a setup on an Arch Linux system myself soon. Do you still need bumblebee for this to work? I'd assume it's unnecessary, because the new card has the power to handle everything

Good luck with your setup! By the way, I was an Arch user 4 years ago. Sweet distro. About the bumblebee, no I did not need it. The nVidia Drivers handle all the work by themselves. The "Nvidia X server settings" lets you select the card you want to use. Then just a quick log out and log in and you are running the selected card.

One thing to notice here.

- If you select "NVIDIA (Performance Mode)" the laptop panel is driven or accelerated by the eGPU, you can plug an external panel if you want, both are accelerated.

- If you select "Intel (Power Saving Mode)" the eGPU gets disabled as if no eGPU was installed, even the drivers seem as if they were not installed.

So if you intend to completely dedicate the eGPU for CUDA/OPENCL computing, this is the procedure I follow:

- Select "NVIDIA (Performance Mode)"

- Edit your "xorg.conf" to switch the "active" screen from "Nvidia" to "intel"

- Log out and Log in again.

This is the only way I have found to free the eGPU from being "distracted" by drawing the desktop. Then if you want to do some gaming, just edit your "xorg.conf" again.

-

2

2

-

@Jong31 here are some alternatives you can try which have been helpful for me under different scenarios:

- Try booting your laptop with no external monitor plugged to your eGPU. Then if it boots correctly just plug the monitor when you are inside your OS.

- Try plugging your eGPU after your laptop has booted. How? First boot with no eGPU connected or just turn off your PSU, Then, suspend your computer after you have logged to your OS. Next, plug your eGPU, or just turn on your PSU. Now wake up your computer.

Good luck.

-

NOTE: This post has been updated to reflect the latest state of this implementation...

Hello mates,

I am delighted to share a bit of my new successful implementation...

After fighting my way thru previous EGPU implementations using several Linux distributions. From Ubuntu Mate 14 & 15 to Linux Mate and Centos 5 and 6. I only documented one of them.

I had to share this experience, mostly because I am amazed by what the community behind Ubuntu Mate 16.04 has achieved. So bear with me.

System Specs

Lenovo T430

Intel Core i5-3320m at 2.6 Ghz

8 GB DDR3L 1280016 GB DDR3L 12800

Intel HD 4000

EGPU:

Zotac GeForce GTX 750 1GBEVGA GeForce GTX 950 SC+ 2GBKFA2 GeForce GTX 970 OC Silent "Infin8 Black Edition" 4GB

EXP GDC v8.3 Beast Express Card

Seasonic 350 watts 80+ bronze

Display:

Internal LCD 1600x900

Dell UltraSharp 2007FP - 20.1" LCD Monitor

Procedure:

I prepared the hardware as usual. Feeding power to the Beast adapter using the PSU. Plugging these into the laptop's ExpressCard slot.

The installation of Ubuntu Mate 16.04 used is only a couple of weeks old and is loaded only with a full stack of Python and web tools I need.

For the integrated graphic card, stock open source drivers are used. For the EGPU... I was ready to perform the usual steps, disable nouveau drivers, reboot switch to run level 3, install the cuda drivers, etc. But..

Following the advice read on a Ubuntu/Nvidia forum, and very sceptical, I installed the most recent proprietary drivers for my card. Reboot. Boom! I am done. Even functionality previously not available in Linux is now available...

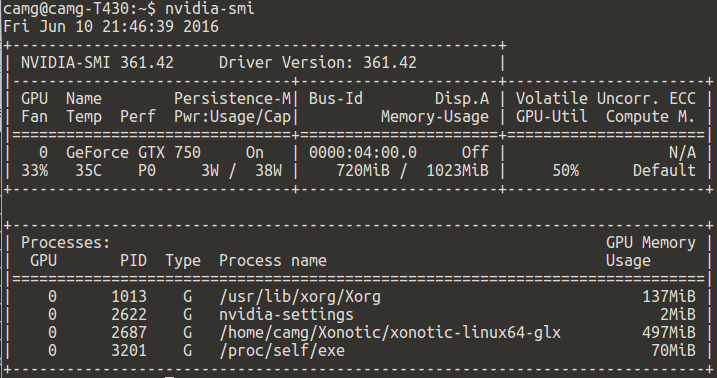

As you can see from the last screenshot the drivers now report what processes are being executed on the GPU, that was something reserved previously to high-end GPUs like Teslas.

That screenshot also shows the evidence of the computation being performed in the GPU while the display is rendered in my laptop's LCD.

This screenshot also shows how the proprietary driver can now display the GPU temperature as well as other useful data.

For those of you into CUDA computing, I can report CUDA toolkit 7.5 is now available in the Ubuntu repository and also installs and performs without any issue. I went from zero to training TensorFlow models using the GPU in 30 minutes or so. Amazing!

I could expand this post if anyone needs more info, but it was very easy.

Cheers!

After upgrading the GPU two times, my system is now capable of handling Doom fairly easy. Now some benchmark results.

RAM eGPU PCIe gen 3d Mark 11 3dm11 Graphics 8 GB GTX 750 2 P3 996 4 095 16 GB GTX 750 2 P3 994 4 094 16 GB GTX 950 1 P5 214 7 076 16 GB GTX 950 2 P5 249 7 709 16 GB GTX 970 1 P7 575 11 202 16 GB GTA 970 2 P8 176 12 946

Now, the difference between Gen 1 and Gen 2 might not seem relevant from the results in the table. But playing Doom there is a difference of around 15 fps on average between both modes. This brief difference is even more noticeable during intense fights. -

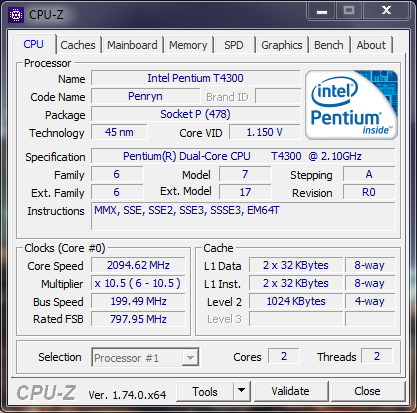

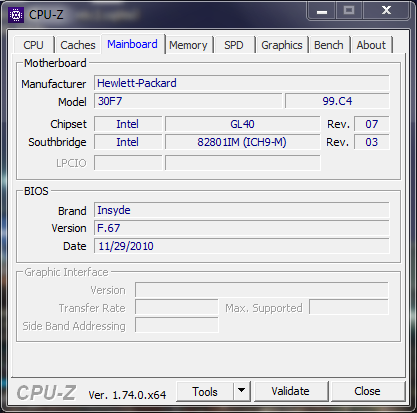

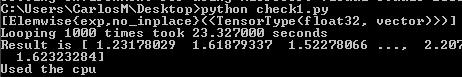

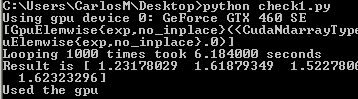

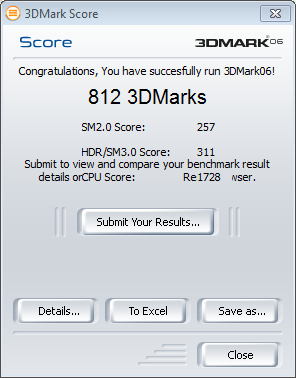

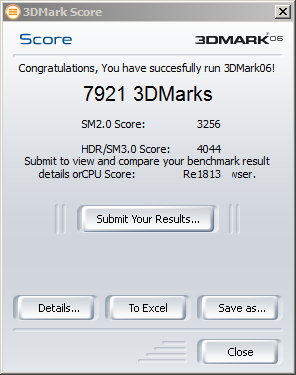

Finally, the Windows part of my project is completed...

Win 7 64 bit

6 GB RAM DDR2

Pentium Dual-Core T4300 @ 2.10Ghz

Intel 4500MHD

Evga GTX 460 SE

EXP GDC Beast v8.3 EC1

Dell DA-2

Python with Theano on GPU (Cuda 5.0)

Benchmarked with 3dmak06, almost 10x the old score!

Now I'll try to my luck with CUDA on Linux Min 17.3

-

I finally got from local e-bay sellers: my EXP GDC BEAST v8.3 and an EVGA GeForce GTX 460se, the problem is... the PCI-E power cable is taking ages to get here so I can't properly test my setup, So I need to ask this question for any EXP GDC BEAST owner here...

if you use the EXP GDC without a card, does its blue led stays on all the time and the red led never turns on? I am worried my EXP GDC could be broken because mine does just that!! I mean, I just plugged the power and data cables to my EXP GDC to at least test its USB port and it seems to work, but I read on a forum this blue led must be on only for a short period of time when initiating then only the red led must be on. I am afraid it is not working correctly, but I can't perform further tests until I get the PCI-E power cable.

Could any kind owner of an EXP GDC BEAST please help me out by doing the same test with your item? I would be really thankful.

Thank you guys.

-

Hi guys, I see you are very experienced with this EXP GDC adapter so I want to share this with you. I got a GTX 580 from e-bay, it's still on its way but basically, I would like to power it using a DA-2. Do you think it would be possible maybe by underclocking it? By the way, I also have the option of using a GTX 460 SE, so I'll try both, keep the one which works for me and then sell the other but I want to read your opinions on this, please.

-

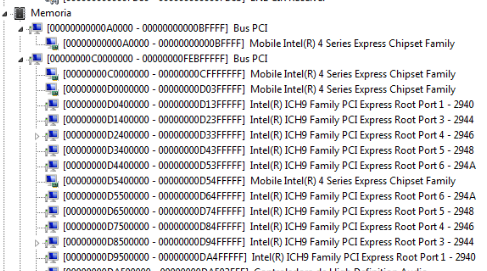

Based on what you guys have posted here, I found my system's TOLUD size is 3GB, so it seems i won't need to perform a PCI "All" compaction.

Ideally I would aim for an Optimus x1.Opt implementation in order to use the Internal LCD for display purposes.

Some specs for the chipset I am dealing with:

Intel ® 82801IB ICH9, ICH9 Mobile Base

PCI Express* Interface

The ICH9 provides up to 6 PCI Express Root Ports, supporting the PCI Express Base Specification, Revision 1.1. Each Root Port supports 2.5 GB/s bandwidth in each

direction (5 GB/s concurrent). PCI Express Root Ports 1-4 can be statically configured as four x1 Ports or ganged together to form one x4 port. Ports 5 and 6 can only be used as two x1 ports. On Mobile platforms, PCI Express Ports 1-4 can also be configured as one x2 port (using ports 1 and 2) with ports 3 and 4 configured as x1 ports.I booted into windows 7 to check first PCI address...

-

Hi guys, I have been following your friendly and helpful community for some time and after reading lots of success stories I decided to jump into this eGPU world! So I am looking for your guidance/comments/tips.

My current laptop is an old HP DV4 1523la with an Intel Pentium Dual-Core T4300 @ 2.10 Ghz processor, 6GB DDR3 800Mhz, integrated Intel GMA 4500M.

I am considering the Express Card version of EXP GDC v8.0 and an Asus GT-740 OC 1GB GDDR5.

I am a Linux user and a very occasional FPS gamer (Nexuiz and Xonotic).

My main interest in this project is to provide this laptop with entry level CUDA capabilities with the least investment possible.

As such I would not mind just using the card as a processing unit but if a successful implementation has the added benefit of enjoying my favoured games with high frame-rate and visual settings, it would be AWESOME!So in your experience guys, what do you think? Does my project seems promising or most likely to be a complete failure?

Cheers!

EXP GDC Beast/Ares-V7/V6 discussion

in Enclosures and Adapters

Posted

@mimen Although I do not own a DA-2 it seems like you should be safe with a 970. Actually, this card seems to be capped at 200W max. That is the reading I got from the nvidia-smi utility. My 970 seems to run at a constant 149W under full load, I am sure there must be higher power peaks during gaming but the 200W cap suggests you should be safe.