sskillz

Registered User-

Posts

98 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Downloads

Everything posted by sskillz

-

Be more specific, whats your system specs , gpu? setup 1.x? What exactly doesn't work? Does it setup 1.x compacts it successfully ? is it a BSOD? etc...

-

No it isn't thermal I ran my PC over a AC unit and the ~10 seconds benchmark (32M) started, stayed, and ended with my CPU running at 27x multi while temp were under 62. And I'm not alone, another forum user reported the same with the same laptop and cpu. Here's my ThrottleStop bench log: nopaste.info - free nopaste script and service Send @Tech Inferno Fan a PM and see what can be done. I'm sure he will help.

-

Hey I've test crysis 3, but with 6950 on my desktop and on my laptop. I got really strange result and I may have found the solution. I have a crappy cpu on my desktop (but I think its good enough) and I get 30fps on the start of the jungle scene (on the stairs) and while I look at the floor almost 60fps. This is on low and very high textures, original version crysis. Now on the laptop I was getting just 20fps looking to the jungle and 30fps looking at the floor behind, so I checked GPU and CPU usages and they were both lower than 100%, 75% gpu and I think 20% cpu. Then I tried disabling vsync in game options and I got 25fps looking down the jungle and 45fps looking at the floor behind, GPU's utilization jumped to 100% while the CPU's was at ~20%. I can't really explain why it wasn't using the full gpu power with vsync at <= 30fps if it was synced to 30Hz and not 60Hz as it seemed to be. So still it is withing the 83% performance of desktop, at least for this card, and doesn't seem to be affected more than other games, unless you'd like another scene to test (I can't get the map load command to work, so if its near the start it would be great). Related thread: https://forums.geforce.com/default/topic/537360/crysis-3-locked-30-fps-when-v-sync-is-enabled/

-

Is BF3,Crysis 3 the most recent demanding game that people have noticed bandwidth limitation beyond ~85% of desktop? I think there is a list someone on some polish board. (I'm not really interested in games that aren't demanding and are restricting (World of WarCraft)).

-

12.5" HP Elitebook 2570P Owner's Lounge

sskillz replied to Tech Inferno Fan's topic in HP Business Class Notebooks

I'll just add to that, that when the applications are using mostly 1,2, or (If I remember correctly) even 3 cores the CPU is excellent and the CPU draws <36W so its runs on its spec speed. But 2560p are selling for about the same price as a 2570p, so I'll recommend it over 2560p for those who don 't already have the older model. Where's haswell 2580p- 1882 replies

-

12.5" HP Elitebook 2570P Owner's Lounge

sskillz replied to Tech Inferno Fan's topic in HP Business Class Notebooks

As you've guys noticed a TDP limit with the 2570p running quad cores, I've as well noticed it with the sandy bridge 2560p, just more severe (seems to be at about 36W compared to 2570p 41W). 2760QM should run at 32x on 4C but I only get 27x, here is Throttlestop log of a 32M test which has been run over my AC unit! DATE TIME MULTI C0% CKMOD CHIPM BAT_mW TEMP VID POWER2014-01-07 14:44:51 31.48 0.5 100.0 100.0 0 27 1.1959 4.02014-01-07 14:44:53 27.07 92.4 100.0 100.0 0 51 1.1259 32.12014-01-07 14:44:54 27.00 100.0 100.0 100.0 0 53 1.1208 34.82014-01-07 14:44:55 27.00 100.0 100.0 100.0 0 55 1.1208 35.12014-01-07 14:44:56 27.00 100.0 100.0 100.0 0 57 1.1259 36.02014-01-07 14:44:57 27.00 100.0 100.0 100.0 0 58 1.1208 35.82014-01-07 14:44:58 27.00 100.0 100.0 100.0 0 59 1.1208 35.52014-01-07 14:44:59 27.00 100.0 100.0 100.0 0 59 1.1208 36.02014-01-07 14:45:00 27.00 100.0 100.0 100.0 0 60 1.1208 35.92014-01-07 14:45:01 27.00 100.0 100.0 100.0 0 61 1.1208 35.62014-01-07 14:45:02 27.00 100.0 100.0 100.0 0 61 1.1208 35.72014-01-07 14:45:02 27.00 100.0 100.0 100.0 0 62 1.1208 36.32014-01-07 14:45:04 27.00 100.0 100.0 100.0 0 61 1.1208 35.82014-01-07 14:45:04 27.12 38.8 100.0 100.0 0 39 1.2109 20.52014-01-07 14:45:05 13.63 0.2 100.0 100.0 0 34 1.2109 3.8 I've also posted it at 2560p forum: [URL]http://forum.techinferno.com/hp-business-class-notebooks/2090-hp-elitebook-2560p-owners-lounge-%5Bversion-2-0%5D-2.html#post79495[/URL]- 1882 replies

-

- 1

-

-

HP Elitebook 2560P Owner's Lounge [version 2.0]

sskillz replied to Tech Inferno Fan's topic in HP Business Class Notebooks

Hello fellow 2560p users. I've been using different quad cores with this laptop and I've noticed that they are all limited by what seems to be a TDP draw of the CPU, and it's probably set at BIOS (Probably unmodifiable for now, BIOS is RSA signed). Users of 2570p laptops have noticed the same, but to a lower extent. The Quad cores I tested are 2630QM (QS) and 2760QM (ES). Most of the time the CPU don't draw their spec 45W consumption so they are fine most of the time. Like in single threaded applications, or even up to 6 threads, since it will mostly 3 cores of the 4. They do try and draw 45W while all the cores are busy, meaning it runs all 4 cores, and of course Turbo is enabled. Then the CPU tries to draw 45W and the they get limited to about 36W which limits the frequency. For example my 2630QM should have run at 2.6Ghz on all cores but got limited to just 2.5Ghz if I recall correctly, which is not so bad. But 2760QM was limited to 2.7Ghz instead of 3.2Ghz! If you'd like to test your, download the latest Throttlestop, and run TS Bench on 8 threads or more. When I run that test with 2760QM starts at multi 27x (2.7Ghz) and then as it gets hot throttles down to 2.5Ghz. I don't mind the throttling but I do mind the starting frequency as I can't change that will stronger cooling methods. Here's my Throttlestop log file (check the Log file checkbox) at when I started the 32M test. This was taken with my laptop over an AC unit! So there is no chance of thermal throttling. 2014-01-07 14:44:49 20.49 0.3 100.0 100.0 0 27 1.1959 3.8 2014-01-07 14:44:50 29.62 0.6 100.0 100.0 0 27 1.1959 4.0 2014-01-07 14:44:51 31.48 0.5 100.0 100.0 0 27 1.1959 4.0 2014-01-07 14:44:53 27.07 92.4 100.0 100.0 0 51 1.1259 32.1 2014-01-07 14:44:54 27.00 100.0 100.0 100.0 0 53 1.1208 34.8 2014-01-07 14:44:55 27.00 100.0 100.0 100.0 0 55 1.1208 35.1 2014-01-07 14:44:56 27.00 100.0 100.0 100.0 0 57 1.1259 36.0 2014-01-07 14:44:57 27.00 100.0 100.0 100.0 0 58 1.1208 35.8 2014-01-07 14:44:58 27.00 100.0 100.0 100.0 0 59 1.1208 35.5 2014-01-07 14:44:59 27.00 100.0 100.0 100.0 0 59 1.1208 36.0 2014-01-07 14:45:00 27.00 100.0 100.0 100.0 0 60 1.1208 35.9 2014-01-07 14:45:01 27.00 100.0 100.0 100.0 0 61 1.1208 35.6 2014-01-07 14:45:02 27.00 100.0 100.0 100.0 0 61 1.1208 35.7 2014-01-07 14:45:02 27.00 100.0 100.0 100.0 0 62 1.1208 36.3 2014-01-07 14:45:04 27.00 100.0 100.0 100.0 0 61 1.1208 35.8 2014-01-07 14:45:04 27.12 38.8 100.0 100.0 0 39 1.2109 20.5 2014-01-07 14:45:05 13.63 0.2 100.0 100.0 0 34 1.2109 3.8 2014-01-07 14:45:06 32.79 0.2 100.0 100.0 0 34 1.2109 3.7 2014-01-07 14:45:07 32.71 0.2 100.0 100.0 0 33 1.1959 3.8 2014-01-07 14:45:08 31.86 0.2 100.0 100.0 0 33 1.1959 3.7 Rightmost column is TDP Draw, third from left is the frequency multiplier. As you can see it starts at 27x (x~100Mhz BCLK clock) instead of spec 32x for 4Cores (4C). If you'd like to see what your 45W Quad core supposed to run at, visit this page: http://en.wikipedia.org/wiki/Sandy_Bridge#Mobile_processors @vnwhite @SimoxTav @Malloot Can you test yours, and possbile produce a similar log? -

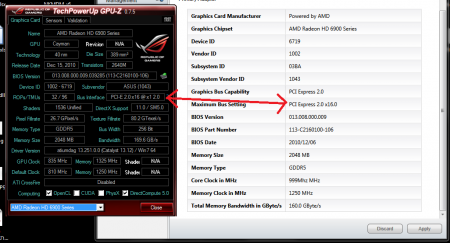

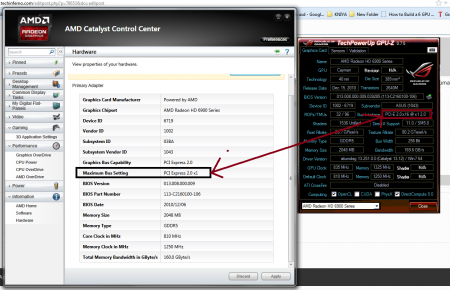

I've managed to modify some parts of catalyst as seen here (GPU-Z's reading is the real current speed): This isn't just changing a label, at least I hope not. I tried to reach where the Catalyst got the info in the first place, and got to some AdapterInfo structure. It might not be "deep" enough, and there multiple places that store this info. I'm not sure which one might fool Catalyst to allow crossfire(if it blocks it), if even possible. A different way might be to just do what the Enable Crossfire button... I still don't have a 1x-16x to connect a second GPU to test this.

-

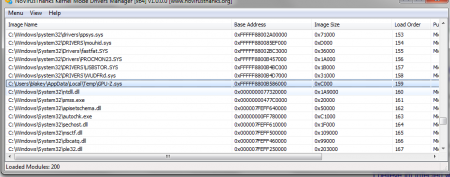

What's the Kernel API (Win, AMD's) used to read it? Maybe it can be hooked/intercepted? Do you think these two are read from that register using the same API? Edit5: GPU-Z uses a kernel mode driver to read the register: But this program nor windows are able to see the actual .sys file in that temp folder, so I can't try to disassemble it and see how it works. Do you know specifically how do I access the said registers from windows? Even if its from kernel mode? Are you sure it read only? And lastly, Anyone tried ATI Tray tools to enable crossfire?

-

Your result would be more interesting :| About a week I guess :\ (They shipped it late without notifying me) My guess it will work on two PE4L, if you get the addressing fixed (I'm not sure how setup 1.x will handle it)

-

Yea you said a tapped card didn't work on one of the 16x slots, so I just checked if mined did, and it does. Cutting the end of the port won't help for me as each 1x is blocked by something on the board (like CMOS battery, caps, etc).

-

I've got myself two HD6950 that are unlocked to HD6970 shader count to test crossfire on my desktop. I don't have two pci-e slots so I'm waiting for my 1x-16x riser to arrive (which takes forever o0) I'll try tapping one in the meanwhile. UPDATE: OK tapping worked! 16x Score: 5176 AMD Radeon HD 6950 video card benchmark result - AMD Phenom II X4 20,Gigabyte Technology Co., Ltd. GA-MA770T-UD3P 1x Score: 4505 (87% of the first score), pretty good percentage don't you think? AMD Radeon HD 6950 video card benchmark result - AMD Phenom II X4 20,Gigabyte Technology Co., Ltd. GA-MA770T-UD3P * This are actually two different but identical cards, but I don't think they would give a different scores at 16x so I didn't test the second one (tapped on) at16x. Waiting for my riser to arrive to test crossfire

-

So you're saying that with only one card in the system, and its tapped to run on 1x, it runs only on a specific port (it works on one of them)? If so can you still benchmark that one card so we will also have a desktop x1.2 result. And take a look at this: Taping Connectors, Updating The BIOS - SLI Is Coming: Time To Analyze PCI Express And I quote: And I guess will need to test with a riser on a 1x port, we know those work from mining... Edit: Found a gen 2 tapping: http://www.tomshardware.com/reviews/pci-express-2.0,1915-4.html In this one they didn't mention a BIOS, and they even tested dual GPU on single card (9800 GX2), shame its a old one.

-

Why are you using such a old version of setup 1.x there is version 1.3 now., I don't really know that old version but. You would use !Run compact (after choosing themethod and ignore group) which automatically writes the PCI.bat file for you.

-

Strange your 3dmark shows you have zero cards (0x). Powerful card, how about some 3dm11 benchmark, heaven, and game benchmarks. Someone here said he had bandwidth bottleneck at Crysis 3 at welcome to the jungle scene you may try that too. Whats your cpu/gpu usages at cod on max settings? And on the lower one? Does he have a pci-e gen 2 or 3 mobo? If it will work on desktop 1x links I don't see why it won't work on a laptop? except some (minor?) extra latency (Desktop pci-e is on northbridge vs laptop's southbridge)

-

So how does one go about faking a x4 link? I'm looking for any deals till my bridges get here (AMD are on short supply) I've also tried asking litecoin folks to do a test, since they all running multiple amd gpus on 1x risers to mine, which is perfect to test on! but they didn't respond, I guess they'll want a LTC to even "waste" their time on it. Here's my thread asking: Can someone test something for me? : litecoinmining Here's another guy offering a reward: LTC REWARD FOR HELPING ME GET MY 2x R9 290 MINER WORKING! I HAVE SPENT 30+ HOURS ON IT AND IT WILL NOT MINE! I KNOW WHAT IM DOING BUT IT WONT MINE! HELP PLEASE! : litecoinmining 10 people joined skype to help him (I was one of them to help him so he could help me..)

-

@Tech Inferno Fan Has anyone revisted SLI/CF yet? Like trying hyperSLI or modifying the reported PCI speed for the driver to allow it? I've ordered 3 1x-16x pci-e risers, a cf bridge and a sli bridge to try on my PC first, but I don't have two graphic cards yet.

-

Hello, here's what Nando4 said:

-

Yes, the slot is cut at the end so any card will fit it. But since its small you usually have to put something to balance the card properly, or build something for it. I myself used paper that I folded several times to lift the back of the card. With the front bazel I stuck its lower edge in a cardboard box. You can see how to donate for Setup 1.x to nando as shown in Setup 1.x thread that I pointed: I suggest you get all the required parts before getting setup 1.x, nando sends you a link pretty fast by email. Also consider buying PE4L from bplus directly: http://www.bplustech.com/Adapter/PE4L-PM060A%20V2.1.html I've got it by DHL from them in less than a weak (international).

-

Setup 1.x is a Donationware (you pay a donation to nando to receive the program) that does the following: You might meet 1, 4, 5, 6, and 7 of what setup 1.x does, more info can be found at the relevant thread: <link>http://forum.techinferno.com/diy-e-gpu-projects/2123-diy-egpu-setup-1-x.html x1.2Opt means 1x (lanes) of PCI-e Gen 2 with Nvidia Optimus PCI Compression engaged to save bandwidth and provide better performance (Compared to x1.2, especially on internal screen and DX9 games.). Basically its what you'll probably want, since Engaging Optimus is what also enables you to reroute the eGPU's output to your laptop's internal screen.

-

No you don't have a expresscard and PE4H 2.4 is Gen1 (2.5Gbps), You'll need PE4L v2.1b or PE4H v3.2, both are Gen2 and sadly don't have detachable cables! So PE4L v2.1b is good enough. This guy was using a 560TI with a laptop almost the same as your asus: http://forum.techinferno.com/diy-e-gpu-projects/2109-diy-egpu-experiences-%5Bversion-2-0%5D-19.html#post30125 Strange that his next post he says he has a 660TI: http://forum.techinferno.com/diy-e-gpu-projects/2109-diy-egpu-experiences-%5Bversion-2-0%5D-32.html#post31995 Check to see if you have access to a free mPCI-e port and or at least that you have access to the wfi's one. I'm guess if it worked for him that the port isn't white listed only, but I can't be sure. Any by quoting nando: If there is no bios option to disable your 610M, then yes, you will need DIY eGPU Setup 1.x to disable the 610M. Only then will the NVidia driver engage pci-e compression netting you x1.2Opt performance. I think that's also needed for routing to the internal screen.

-

Placed in a spoiler, because there are 20 screenshots, and someone might have not played the game yet... it's my favourite mission in the game, spectacular and mind blowing, even on low settings like in the test. I don't have the GTX660 log at the moment, but I remember the mission was fully playable at >30 fps min and about 35 average, played with high textures and med-high settings, so again, way better Maybe the FPS was higher because of better CPU Utilisation? The CPU Usage stays at 60% avg on hd6850, and on 660 was more like 80-85%. My guess its the opposite, its higher CPU Utilization because of the higher FPS, meaning the CPU can handle those frames easily but the GPU isn't keeping up so the CPU has to wait...

-

If you can and willing to return a card after use, why not test a 290X and be done with it Has anyone tried SLI/Crossfire yet? Like two PE4L one expresscard and one mPCIe and using a bridge? As I understand the bridge helps the gpu's talk trough it rather than using PCi bandwidth. This is different than using two lanes from different ports to one. Now with a DSDT override, I would really want to see the max a 1.x 2.0 or two in SLI (if possible) can handle, say a 7990, or 690. Wish I had the money

-

When I had the 7970 I left it at night to litecoin mine.. a desktop hardy takes more power as the GPU takes the most.