-

Posts

284 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Downloads

Everything posted by D2ultima

-

Okay. I've run the three benches I said I would. I DID see a benefit on single GPU in the physics test. But it was very slight, as reflected in the results. http://www.3dmark.com/compare/fs/10073292/fs/10073334/fs/10073378 As far as I can see, this is what should happen. The slight benefit, logically, is due to the fact that SLI in itself has a CPU overhead to render. The same should be for crossfire. It's probably a good thing people haven't figured out a way to use NVPI to specifically disable SLI via NVAPI for the physics test alone, or that would be a way to boost scores without actually turning SLI on/off inbetween tests (which would really be impossible I think). As for why multi-GPU is doing better in Physics with Maxwell (and Pascal, since Pascal is essentially die-shrunk maxwell overclocked), you've got me stumped right there. I couldn't begin to guess. It makes no logical sense to me.

-

Yes, I did see. I was wondering when they'd fix it.

-

The fourth one was at 3.9GHz, though. What I can do at some point is run a firestrike, disable SLI, run another, enable SLI, then run a final one, and see if scores are generally consistent across them for Physics or not. I will have to do it at 3.8GHz however, since 3.9GHz has been wonky on this particular 4800MQ ever since I've gotten it. Randomly shuts off the PC under stress if I use it. I figure I could toss more voltage at it and stabilize it, but I'd rather use what I know is stable. This however will be later, as I'm about to play some Killing Floor 2 with a friend.

-

Your own scores show that 1080N does the same thing. http://www.3dmark.com/fs/9988160 single GPU from you, 4788MHz, 14,790 score. http://www.3dmark.com/fs/9954310 dual GPU from you. 4790MHz, 15085 score.

-

..... http://www.3dmark.com/compare/fs/9954310/fs/10053831# this is the score comparison that @Mr. Fox posted with your score versus another user with a 6920HQ at 4GHz. http://www.3dmark.com/fs/10053831 This is the score that I pulled from the above link just now, to look specifically at the Physics score (35.11fps; 11,059 score). http://www.3dmark.com/fs/9595299 This is a run from @Spellbound, who I asked to provide one of her benches with her 6700K at 4GHz for me, so I could check her physics score (39.79fps; 12,534 score), as I felt that the above 6920HQ run was way too slow. Also, I noted that in his other benchmark (the comparison in Mr. Fox's post to your score in Time Spy and the MSI score in Time Spy was from the same person) his CPU only went up to 3.6GHz (either he removed the overclock, or something else happened). My original post, saying "something is wrong", is pointing out that the 6920HQ at 4GHz should have minimum cracked 12K, and not sat at 11k on the PHYSICS test. You bashed me, and posted a single GPU benchmark with a 4.7GHz chip in response. That was pointless. Then you told me that single GPU gets higher Physics scores than SLI setups, which while it may be true, does not equate to 1500 points lost. And then you post benchmarks for single GPU where the highest CPU speed is 3.6GHz, which does not equate to the 4GHz that was supposedly on the MSI benchmark. What exactly have I "misunderstood" now? Unless if I go and disable SLI on my notebook and run a single GPU firestrike right now, I'll end up with a solid 1.5k increase in score?

-

What. Are. You. Talking. About? I am claiming, that the person Mr. Fox compared your score to, who benched in firestrike with his 6920HQ at 4GHz, only managed 11k physics, whereas the benchmark I posted managed 12.5k physics, which means that the 6920HQ model was throttling. Why are you showing me one of your benches against the one I linked? There is no reason to do this?

-

Copying and pasting from NBR: I thought something was very odd about this... and I was right. In Firestrike ALONE he runs at "4GHz" (the rest have a slower reported clockspeed, unlike John's benches). But his physics score is too low. Here's @Spellbound's physics score from a random bench she did with stock CPU clocks: http://www.3dmark.com/fs/9595299 Note how her physics score was 12500? The 6920HQ at 4GHz (I know it can clock up to and hold 4GHz on all 4 cores without issue as long as the laptop isn't limiting it somehow) only had 11059 for Physics. That's too large a discrepancy; it was throttling pretty hard. My 3.8GHz 4800MQ can get about 10400 with crap RAM; Skylake at 3.8GHz or a bit less might hit 11,000. But that wasn't 4GHz.

-

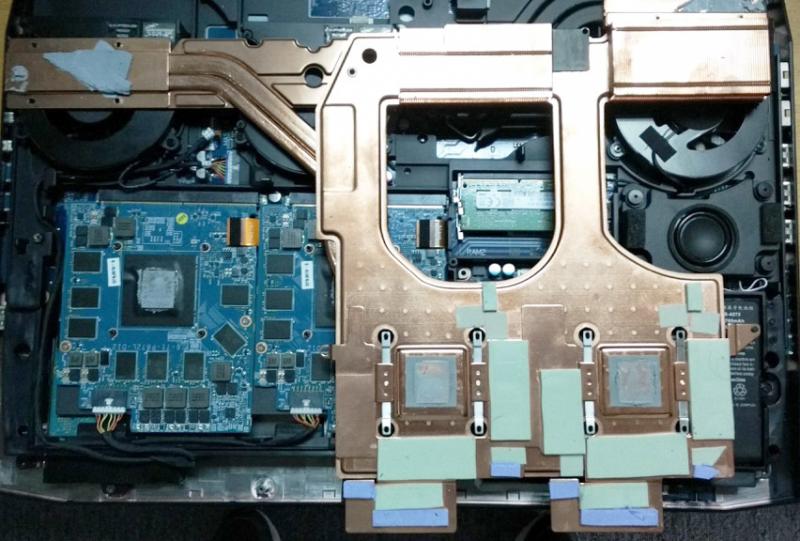

GTA doesn't read vRAM properly. It doubles vRAM in SLI for one thing. If GTA said you were using "10GB" in SLI, then you were using around 5GB. Because it used to tell me I use 6.6GB and obviously I only have 4GB, and OSD readouts were around 3200MB. Here: Old screenshot, but you can see its readout is bugged.

-

Digital Foundry is a company that basically analyze game graphics and compare it to consoles. They're very thorough people, and one of the very few groups that are heavily into tech that actually advocate understand how much high speed and low latency on RAM can help a system. Their "is the i5-2500K still relevant" has some very good points about it; they even manage to get a solid 30% extra performance just getting some good 2133MHz RAM onto it over 1333MHz... which then scaled with overclocking. FCAT, to be simple, is basically reading the data of the timings of frames sent from the GPU to the display. As far as I know, it only works with DVI connections, so Polaris and Pascal cards haven't had FCAT testing done on them. Basically, it's testing the frametimes, which is the time between each frame. Let's say you've got 120fps constant. You should be having exactly 8.33ms between each frame for it to be perfectly smooth. If your framerate is 120 but your frametimes are say... 2ms 12ms 12ms 18ms 3ms 3ms etc (I'm just being wild with this) your game will look like your FPS is about 20, not 120. High FPS and stuttery gameplay is not a pleasant experience, though to my knowledge it has little effect on benchmark scores since in a one-second timeframe, whether your frametimes are consistent or all over the place, the same number of frames in one second is still delivered (if I'm wrong, do correct me). In other words, for the purpose of gaming, frametimes being consistent is important. 4-way SLI works, as far as I know (from nVidia's explanations anyway), by using SFR of AFR. Something like this: Top half of screen -------------------------------- Bottom half of screen Cards #1 and #3 run two-way AFR on the top half, and cards #2 and #4 run two-way AFR on the bottom half. SFR is split-frame rendering (there's a ton of other names for it too, but I'll simply stick with that one) where each card runs one portion of the screen. So it's using the AFR of two GPUs as "GPU 1" and the AFR of the other two as "GPU 2" and running SFR. It generally needs a lot of bandwidth and driver optimization to have consistent frame timings (and often brings negative scaling due to lack of bandwidth in some titles), but benchmarks love it. On the other hand, gaming on it with bad frame timings is an absolute pain, and many consider the higher FPS counts not worth the stutter. On the opinion side, if someone got 4-way SLI working on Titan X Pascals and is enjoying the life out of himself? Well sure, kudos to him. I'm in the boat where I'd need things to be smooth enough to play, but I'm not going to rain on another's parade for being happy. I WILL however attack people who lie about things, because it gives other people the wrong idea, usually about what to expect from spending cash on certain products, but about other things too. Curious, but what software reads out this 10GB for you?

-

That's TB? I saw it do something similar in John's fallout 4 video, but I thought it was "4GB" as in "4,xxx,xxxKB" for some reason. Ah well. I'm still a skeptic.

-

That is not 8K. He's using way too little video RAM for 8K (DSR grants the same vRAM hit as actually using the resolution if I remember correctly). 4GB of vRAM is BARELY enough for GTA V at 1080p "maxed" out. I've asked Tgipier from NBR (don't believe he's on T|I) to test for me going from 1080p to 3440 x 1440 with the game on absolute maximum, and he crosses 5GB vRAM just doing that. 4K or 8K would be much higher. He has at LEAST turned off MSAA, possibly more. I mean, good for the fact that 4 Titan XP cards work, certainly. But something's pretty off about his metrics in that video. He should have shown us his options menu if he's making those claims. I hate to be a skeptic about these things, but there's far far too many people who say things like "I max witcher 3 with a 970 and I get like 144fps" and they've turned off all gameworks options and AA and the like.

-

Interesting... though I expect four would've been a stutter fest. I would say 3 might perform better. It's too bad, I'd have liked to have seen that. Maybe a 4.8GHz 5960X with three Titan XPs finally getting GTA V to max out and hit 120fps constantly.

-

Yes, I know that scaling and utilization are different. But I DO notice better frames with SLI on versus off; otherwise I disable it. Dark Souls 3 is one such game where SLI on is negative scaling. But yes, as I said: single strongest GPU before even considering SLI. And there is *NO* AMD card I would Crossfire on the market. None. Crossfire *IS* dead. SLI's benefit from nVidia Inspector helps. I've been able to get SLI working with positive scaling on multiple games, even UE4 ones, that don't support SLI from the get-go. Killing Floor 2 three months before it got an official profile. Ark: Survivial Evolved which will never get a profile. Unreal Tournament 4 can have SLI forced, though TAA shouldn't be used. How to Survive 2 worked well with forced SLI and allowed me to max it and keep my 120fps constant where single GPU didn't allow that. Toxikk also was easily forced. Overwatch I forced before its profile appeared in the driver and worked swimmingly. Crossfire can't do any of that. *BUT* I will be clear: SLI is not for somebody who isn't fully willing to do a lot of elbow grease to get it working. It just is not. 1080s are the best for the mobile platform, so it makes sense to SLI it. If they shoved a single Titan X Pascal in a P870DM3 and got it working I'd grab that over the 1080 SLI in a heartbeat. But as of now, it isn't here. Desktops, as I said, Titan X Pascal before SLI-ing. If they're running DX12 like they're running DX11 (which they are) then nVidia can add DX12 profile bits. NVPI already has DX12 bits in a section, though since I refuse to touch Windows 10 on this PC it's a pointless option for me. But it means the driver supports it, and it can be enabled in games. A simple "force AFR1" or "force AFR2" won't cut it, though. However, as things currently are, there really is no benefit to multi-GPU in DX12/Vulkan titles. We'll see how that pans out in the next few months, but I think DX12 is worse for gaming in its current state than it is good for gaming. Vulkan is middle of the road. No multi-GPU and requiring driver-side optimization of games is as stupid as DX12 is, however it does not require Windows 10, and can be run on Linux and Mac, and that makes all the difference in the world. And yes, I know they're scaling it back. I think though it's more that they can't attack the bandwidth problems without losing money (XDMA-style design of cards, or consumer NVLink in non-proprietary format, possibly using PCI/e interfaces). Since they can't charge for the proper solution, they axe 3-way and 4-way and just use a doubled-up LED bridge, call it "High bandwidth", and call it a day. It's a band-aid on the problem, but one that costs them basically next to nothing. I'll be watching multi-GPU closely, but I already tell everyone to ignore it. I don't even mention forcing it, or anything like that. If somebody already knows about it, they'll get multi-GPU anyway. Anybody asking the question just won't enjoy it. It makes me laugh at the 1070 SLI models of laptops that are coming out from MSI and such. Those are pointless.

-

Yes. GOOOD. Mwahahahahaha. Also, @Brian, thank you for that link to SLI charts testing. I knew all of that basically in my head, but it is an EXTREMELY nice collection of such testing (since I do not have the hardware myself) that I can show to other people, and it's already been added to my SLI guide. Edit: Brian, do you think you could relax my forum signature rules to 3 URLs? I kind of cannot change my signature at all without having to remove one of the already-existing links, and I wanted to update the specs portion a bit. Or relax the whole forum's requirements a bit works too.

-

As far as I know, it can be done driver-side. Just like all the other "optimizations" that DX12 is bringing... every dev is hurry to toss DX12/Vulkan on a game but they don't bake a single iota of optimizations into it, and let the driver do all the optimizations. In this sense, it's basically DirectX 11 with less CPU usage, and that's nothing special whatsoever (proper coding wouldn't be eating up i7s for breakfast like 92% of AAA titles since 2015 do). In fact, that's pretty much the reason nVidia has been doing worse in DX12 and Vulkan than without... it's because their DX11 drivers have as much CPU overhead as possible removed, and are very mature. So since DX12/Vulkan relies on the (immature) drivers for optimizations and there is very little to no more CPU overhead removed in the driver (though in-game CPU usage is lowered), they perform worse. So basically, we've been waiting on drivers to give us DX11-like performance or better with DX12/Vulkan. On the other hand, it's the reason why AMD performs much better. Since DX12/Vulkan kills their ridiculous CPU overhead in the driver for DX11 titles, their cards perform close to what the hardware actually allows. So take DX12/Vulkan performance in AMD cards, add about 5-10% from driver optimizations (just like nVidia had to do) and THAT is what AMD cards should be doing in DX11 today. Hence my intense annoyance at them for focusing on everything that doesn't matter in competing right now. That's what I would do... except I would just get two Titan X Pascals =D. I'm irrational when it comes to this... single GPU will just never be enough for ol' D2. But yes, it's extremely questionable. The problem is that while every dev under the sun is happy coding AFR-unfriendly tech into games, none of them give a flying meowmix about actually optimizing the damn games. "Unreal engine 4 omg" is so popular in gaming, but then there's games I can barely get 60fps on low in while forcing SLI (Dead by Daylight is a good example) whereas single GPU I get 100+fps maxed in Unreal Tournament 4. If every game ran like UT 4, I'd be perfectly fine. But not every game does, and that's a rather large issue. And it keeps happening with most every title I see, especially ones on Unity 5/Unreal 4. Did you know it takes approximately 85-95% of one of my 780Ms to render Street Fighter 5 at 1080p with the settings turned up? You know, the game that looks like this? Compare that to UT4 here. You understand what I mean. Ah you and I are so alike in our irrationality. We both so don't want to give up our notebooks. 780M was $860 and 770 4GB was $450 though. 970 was $330 and 980M was $720. We've been around or over double price for quite some time. The 1080 is replacing the 980 though, which was $1200 over the $550 desktop model, so... if anything... price markup has actually gone down? =D? =D =D? (Yeah I know it's still garbage). I AM DISAPPOINTED IN YOU MISTER FOX. D=. Let me fix your statement. "Bench with SLI and game where supported, and where it's not supported you force SLI on that bastard with nVidia Profile Inspector like a true enthusiast until it works and laugh at people who don't know how to do this, and still game in SLI." There, I fixed your statement for you.

-

Ah yes. THAT way. I wasn't too sure, but better to ask xD

-

Your usage of the word "paradise" confuses me. I also imagine Prema exorcises in full-on anime style complete with unreasonable power ups and general all-around badassery. He's like Hackerman xD

-

So, what have I missed? Did Prema magic up a holy grail of notebooks with his Phoenix powers yet? Has Mr. Fox led a charge on Clevo and MSI by surfacing from the water on psychic humpback whales with an army of pufferfish-poison-tipped spear-wielding flying fish? Has anybody managed to CLU everything in a P870DM3 and achieve sub-70 degree temperatures with a 4.7GHz CPU and 2GHz GPUs? DID WE ALL SHARE A PIZZA? I've only skimmed the recent developments in... well... everything. Except for that video where the Razer Blade 2016 with a 1060 in an A/C showroom with superloud fans sat at 93c constant at 1080p playing Overwatch locked to 70fps. I had a good, long, hearty laugh at that.

-

Where? I cannot find this anywhere. I KNOW for a fact they offered GT72 with two generation upgrade-ability... but that machine launched with the GTX 880M. That means Pascal *IS* the final generation. I have never seen direct proof that the GT72 900M models, or GT80 Titan (900M-only), have ever gotten the promise for two generations of upgrades, and if I have, I have forgotten it and cannot find it anymore. Either way, GT80 is dead in the water. It has CPU cooling problems and its CPU heatsink is shared with its GPU heatsinks, meaning it's going to have a tough time with 1070 SLI. 1080 SLI at 130W would be pointless for the most part; 1070s overclocked would be better as well. And finally, the system CANNOT pull over 330W from any power supply even though the board does not seem to have a physical limit. They simply pushed for hybrid power because, as far as I can see it, they were too cheap, too lazy, or both, to offer a dual-PSU solution which was needed for CPU overclocks, or 980 SLI with any CPU in the first place.

-

Your best update is possible the GTX 980 130W SLI option from the MSI GT80. But I don't know if they can actually fit, and if not, then 980M SLI is the end for that machine. The other cards simply won't fit, and you don't have heatsinks for them with the custom designs, as far as I know.

-

I am 100% certain if they used a vapor chamber contact point and the P870DM3's "Grid" heatsink, we could shove a Titan X in there. The power draw WILL be less than the 1080 SLI setup, so that isn't the issue. Just the heat. And I think it could be done. You could even use both the existing 4-pin connectors. But that's only if vapor chamber contact points are a thing, and I don't think they currently are. Titan XP is simply too thermally dense for the current heatsinks I think. Mod hijack edit:

-

So, I was right? This new design will be Clevo's new "standard" so later models should be upgrade-able even though MXM standard design is dead? Kind of like a turning point? I was hoping this would be the case. Sucks for previous gen owners, but that'd leave some hope on the table. Hehehe. Headphones, sir. Who cares about a little jet engine ;). But even so, that's a huge discrepancy. I at least hope that was an outlier and it'll get down to 70-75 if the other is ~64c (hopefully a bad paste job or whatnot). I hope to see more! I hope you get your review unit soon =D. This sounds good and bad at the same time, but as long as a 4.5GHz or higher chip can handle the load I'm good. I don't care if max fans are required, as long as I can heavily stress both (gaming at 120fps while running some solid CPU encoding, either livestreaming or recording) and be fine, I'll be good with it =D.

-

Eurocom sells upgrades for MSI, therefore should be a direct (if a bit expensive) source for MSI cards. Also you could try HIDevolution, though I don't know for certain if they sell upgrade kits or are allowed to cross-sell if you can't show them you own a MSI laptop. They only plug into some roomier models like possibly the P7xxZM/P7xxDM. I can tell you for certain that 1070 will NOT fit in my laptop, either in the primary or slave slots. And I don't expect it'll fit in your P150EM either, though I could possibly be wrong. (Not that we could cool them easily, heh. Or that I have ANY intention of upgrading this laptop whatsoever; a new model is just better in every way now that 120Hz screens are a thing again).

-

Yes I have a question @Prema, how much of an anomaly was that 90c 1080 in the Heaven benchmark? xD The other card running at 64c is something I'm sure could be managed in my country, but that other one at 90? In Canada, possibly in A/C room? Couldn't even tell if max fans or not? That hopefully is indicative of some other issue beyond the base cooling config, otherwise that machine won't even run at stock for most people (especially as it's still "summer" for much of the world).

-

Now, I thoroughly dislike ZDNet and their authors for the most part, however the information here seems fairly good. http://www.zdnet.com/article/french-authorities-serve-notice-to-microsoft-for-windows-10-privacy-failings/ Basically, as we all know, W10 is extremely overbearing with their information collection, and now they're getting essentially a "change or we take legal action" order from a French company dealing in data protection. I can only hope the rest of the world not in the EU takes notice and updates their consumer protection laws.