Another Tech Inferno Fan

-

Posts

198 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Posts posted by Another Tech Inferno Fan

-

-

I was talking about the original SG01 - If there are variations of it then I don't know, the seller didn't mention.

Nevermind though, it's been taken by someone else.

-

For those wondering why the Silverstone SG01 is not listed in the OP:

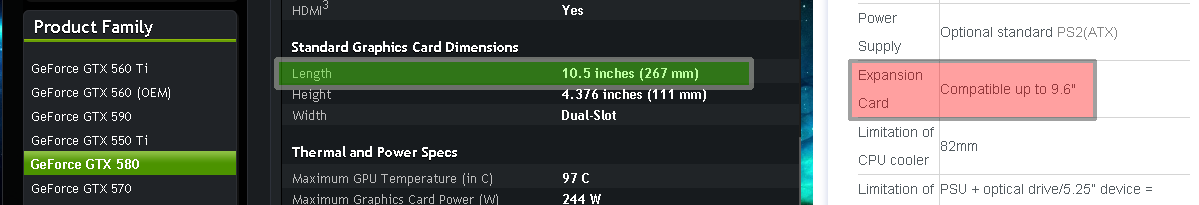

(Left: geforce.com page for the GTX 580. Right: Silverstone's page for the SG01)

Sigh. And I thought I could snap up that SG01 that just went on sale on a local classifieds for $10 and stop having my eGPU be mounted in an open plastic basket with zip-ties and power cables hanging everywhere. That said, there's no reason the SG01 wouldn't work if you were using a short card.

EDIT: Can anyone who has an SG01 answer this: What is preventing longer cards from being installed and could you just saw it off to make more space?

-

Mine is red.

Always has been, always will be.

There is also only one LED mounted on the PCB, so either I am missing something and being an idiot, or red light is the only light you'll ever see.

-

On 7/24/2016 at 1:53 AM, Olehkh said:

new v1,42

To my knowledge, after upgrading to BIOS v1.42 it is impossible to rollback to any version earlier than v1.42, since the 1.42 BIOS was meant to fix the SMM "Incursion" attack vulnerability.

Flash at own risk.

-

Run any sort of stress test like FurMark or run GPU-Z's internal render test.

The bus interface on the eGPU should automatically switch to PCIe 2.0 when you do that.

Otherwise, you have the problem where it's stuck at PCIe 1.1. There is various info on fixing this - I suggest looking around on this board.

You may have to use eGPU Setup 1.x in order to force gen2 speeds.

-

Open GPU-Z and check if it's running at PCIe 2.0.

-

The EXP GDC product page has a good chart outlining what cards are ideal for PCIe x1 gen 2: http://www.banggood.com/EXP-GDC-Laptop-External-PCI-E-Graphics-Card-p-934367.html

I don't know if there's much truth to it, since I presume different applications might utilise the PCIe bus differently.

It should theoretically be possible to benchmark every card at PCIe x1 speeds to determine at what point additional graphics horsepower becomes meaningless due to PCIe x1 bottlenecks.

-

35% what?

-

https://en.wikipedia.org/wiki/ExpressCard#ExpressCard_2.0

There was never an "ExpressCard 3.0" standard which allowed connection to PCIe 3.0.

I hope I am wrong, however.

-

1

1

-

-

1 hour ago, benjaminlsr said:

One thing though: Double check why only two of the six 12v pins going to the GPU are wired on your picture.

1. There are only three 12V pins on that card. Not six.

2. ATX specification shows that the middle 12V pin is "not connected", though most PSUs and PCIe devices have it wired as 12V. This is negligible however.

-

Read around before posting questions.

-

>he thinks that stating his make of laptop is sufficient to determine compatibility

It's like asking if I can install a magnetic tape reader on my IBM.

-

Stores like Best Buy exist because of and for people like you.

If you don't even know how electricity works, this is not the forum for you. This place is for enthusiasts who know what they're doing.

TLDR: Just buy another PC. It saves everyone the hassle.

-

On 12/3/2014 at 5:31 PM, angerthosenear said:

I've use the Kraken X40 and the Kraken X61 only on my CPU.

I would've used the X40 with the G10 on my GPU, except I changed builds to a miniITX. Sooooo:

X40 is very suitable for a GPU. Or any other similar cooler of this side.

Also, the Corsair H60 will not work. It has the more square styled pump block right? You need one of the round versions. Like that of the X40/1 X60/1 - or for Corsair cooling solutions: H50, H55, H75, H105, H110. Essentially any cooler with that round pump base - Asetek built.

Interesting.

http://www.overclock.net/t/1203528/official-nvidia-gpu-mod-club-aka-the-mod

It seems a fair number of people on overclock.net have been successful with using Corsair H60's.

-

1 hour ago, Dschijn said:

In theory, seems good. But I am not feeling well, when thinking about so much power going through that barrel plug.

The worst that could happen is that the DC jack or the connector burns out.

If the 6 (or 5) pins for power on the Dell DA-2 can push 220w, then I'm sure the amount of surface area on one of those standard DC power jacks can handle the same.

Will the wiring get hot at full 220W load? Probably.

The solution? Use thicker gauge wire. Preferably the kind you use to jump-start combustion engines.

-

If you knew anything about computer performance at all, you would know that there are these programs called 'benchmarks'.

I hear they're the bee's knees!

-

Laboratory bench PSU's can be a very nice tool to have if you have the money for one.

However for most people, a quality ATX PSU is more than sufficient.

-

Use notebook drivers.

-

6 hours ago, yeahman45 said:

wire

Cable.

6 hours ago, yeahman45 said:is there a way to extend it?

Yes.

6 hours ago, yeahman45 said:is it a standard hdmi cable?

1. Oh, so now you realise it's a cable?

2. If it were, it wouldn't be mPCIe/EC on the other side.

6 hours ago, yeahman45 said:possible to use an hdmi extender?

-

No.

Just buy PE4C V3.

-

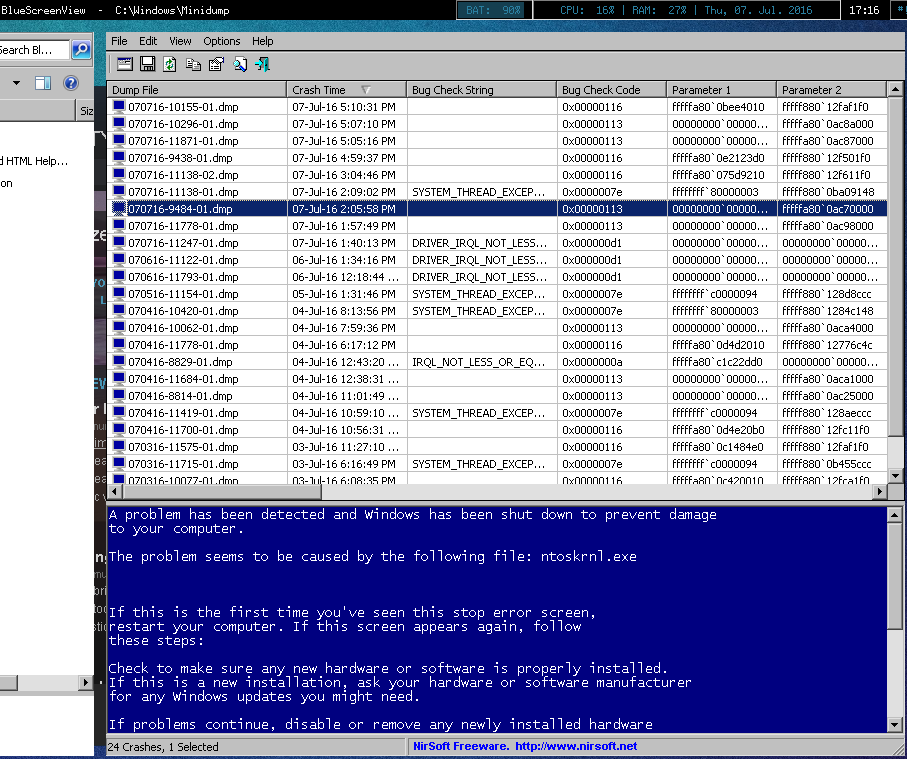

Hate to bump, but just so people realise the extent of this problem:

That is 9 bluescreens in 4.5 hours on July 7th.

I've yet to try the obvious possible solution of wrapping the cable in foil however.

Perhaps I should try writing "No more bluescreens" on a tanzaku and tie it to a bamboo tree?

--

This also happens right after startup sometimes. So every program that is set to open on startup has a small chance of losing all its data when the system crashes.

-

On 7/3/2016 at 10:03 PM, MD_mania said:

I don't understand why the wire would degrade the signal so much. I thought the signal doesnt degrade much with digital signals

Except this is a high-frequency differential signal.

If your statement were true, nobody would need to use anything more than CAT5 cabling for ethernet, and CAT6 STP would never exist.

On 7/3/2016 at 10:03 PM, MD_mania said:and that's why HDMI is so great

Except this has nothing to do with HDMI. The signal is PCIe.

On 7/3/2016 at 10:03 PM, MD_mania said:Can someone explain to me what is happening?

You've already figured it out. See first line of this post.

-

6 hours ago, Atom said:

No, it is a proprietary pinout.

6 hours ago, Atom said:Now is there a place to purchase an 8 pin EPS to 8 pin PCIe converter so that I can convert it again into 6 pin?

The only thing that matters is that the 12V and ground lines are connected as per 8pin PCIe pinout. Refer to the pinout diagrams at the top of this page.

-

Worth noting that in the above post, the GPU (R7 240) is very underpowered and will almost never be bottlenecked by bus utilisation.

It is also a Radeon card, which implies that it's (probably) running on an external monitor, which then implies that there is no PCIe bus overhead from Optimus.

eGPU cases

in DIY e-GPU Projects

Posted · Edited by Arbystrider

Whoops, double-post.