-

Posts

7 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Posts posted by RokasK

-

-

Soooo... next testing step would have been to keep everything as it is (the breadboard) except pull out the TX, RX and clock pairs and solder them together. As memory serves me, there are no other information channels.

-

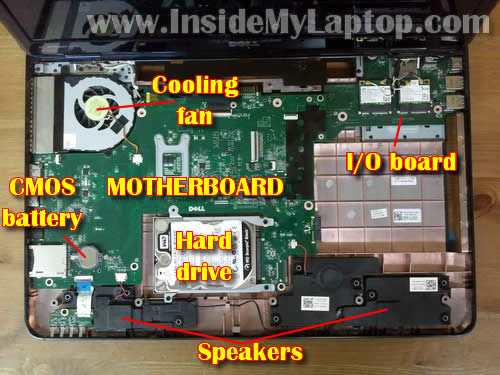

This is not a call for help, this is my story - I tried something new and did not succeed. I did not see anyone trying to solder a mPCIE adapter themselves, so this may very well be the first warning for future hobbyists. Also, this is a verification that Dell N7110 laptop (motherboard version DA0R03MB6E1) accepts an eGPU without any extra tinkering.

So I had the idea that a PE4C for 80$ is too expensive and I can make my own, since it's just a passive adapter - no transcoding required. So I bought these 3 things:

The idea was:

1. cut the extension cable AND the hdmi cables in half

2. solder male extension cable part to female hdmi cable part (just the required PCIe connections and with as little distance between them)

3. Solder female extension cable part to male hdmi cable part

4. put step 2 results in my laptop with the hdmi female leading out the side

5, put step 3 results in a box, plug the cheap adapter into it, a video card into that

Done.

P.S. In Dell N7110 the mpcie is in an awkward spot. Was willing to desolder a VGA connection and use that chassis hole for the eGPU.

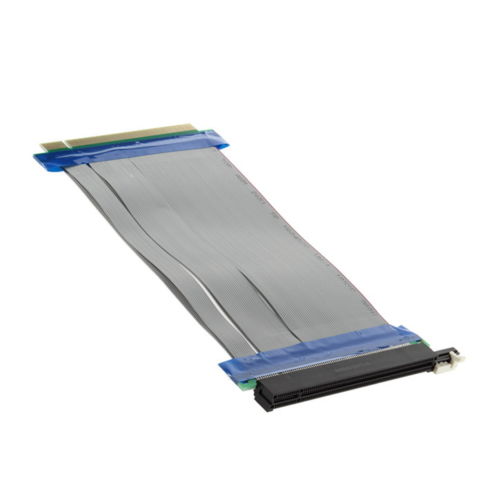

At first I tested if there is any functionality at all - took the extender, plugged it in my laptop, connected the adapter, the video card - success! Windows 10 recognized the video card, installed drivers, was able to play Fallout 4 on a laptop that had only Intel HD graphics and could not even launch the game before. Downside - only PCIe V1 speeds. I thought maybe it's due to the adapter being lame - contacted the seller, he repeated that it's only meant to work at V1 speeds. I had planed to use V2 speeds, so I made a new plan. Bought a PCIe extender:

Cut it in half. Now the plan was to modify step 3 by soldering the female part of this to the male part of the hdmi cable thus making IT the adapter.

Now came the time to find out which pins from the mPCIe to use. Did as much research as I could, cross-referencing with a discovered success story of extender to expresscard, reading the hw-tools schematics and anything I could think of. This was my last attempt (also, bought an arduino breadboard):

Yet still, the computer would not recognize the video card. There was some reaction - when the video card is powered, the fans spin at max speed, but when I power on my laptop (with this connected), the fans slow down to minimal speed. At this time after a few months of work my motivation ran dry and I gave up. The last thing I found was a mention that the data transfer cables not only go in pairs, but also there must be a strict value of resistance between them, or through them... (https://boundarydevices.com/wp-content/uploads/2014/12/PM2-C.pdf) That was it, I did what I had energy for and it was time to call it quits. Now I am selling all the remaining parts

Also, I add my notes here in "Open Document" format for those who would wish to succeed me:

-

Hi,

I am doing a DIY egpu dock, but am only getting v1 suppot. My laptop supports v2, my graphics card supports v2, but the adapted "does not". Now, the question is, how the hell does an adapter cable influence this? As I researched, the difference between v1 and v2 is data transfer frequency, not anything in the metal. So how is the adapter, which does no recoding, just rewiring, limit possible transfer frequency?

The adapter:

http://www.ebay.com/itm/381392799818

My current connection:

This flexible cable was used:

http://www.ebay.com/itm/151575020362 -

21 minutes ago, Brian said:

Scaling... that's what I missed... But this last one... This one is what I was after - x1 configuration shows barely any inferiority... amazing! I guess I will keep the possibilities in the back of my head about bigger better cards

tough... the possibility of my motherboard choking the bandwidth by routing data through the chipset.. gotta check that out...

tough... the possibility of my motherboard choking the bandwidth by routing data through the chipset.. gotta check that out...

Thanks, Brian!

Yet... other places show other situations...

http://www.tomshardware.com/reviews/pci-express-scaling-analysis,1572-8.html

Looks like different games will behave differently on the same setup. -

So this is a dilemma I couldn't find addressed enough... The main guide here did warn us beginners that having only x1 seriously impairs the gaming experience with any eGPU. But what does that mean exactly? If we say, that the x1 reduces effectiveness by 50%, does this mean for all eGPUs, or it was 50% for a specific one, 60% for a newer one and 90% if you buy a GTX 980?

I mean, I know the limiting factor is the bandwidth (I know it depends on the PCIe version), so, having the newest GPU would have no purpose if you laptop is still a PCIe 2.0, right? Or does a newer card do something magical, where it is able to squeeze more out of the same bandwidth?This guy addresses this very topic:

http://www.sevenforums.com/graphic-cards/279406-external-gpu-egpu.htmlQuotenow since thats out of the way, my opinion is if you have a PCI 2.0 capable laptop or x2 link possible, my suggestion is to get either a Nvidia GTX 4xx or 5xx series GPU. i chose a PNY GTX 560Ti OC edition. i prefer nvidia over ATI because of 2 reasons. 1) i found and others found that they are more compatible then ATI and 2) nvidia allows for something called "optimus" to be engaged only if you have a 4xx, 5xx series, an iGPU, and x1 link which will speed up things a lot for DX9 and DX10 games. the main thing to do on your part is research. research everything about your laptop, figure out if you have a mPCI slot (note that some WWAN chips will NOT work because there not fully connected, however WLAN WILL work) or EC (express card slot). then figure out if your notebook can be configured for x1 or x2 link. also do not get an insane GPU, because no matter what GPU u get, it will not be able to use 100% of it due to the bandwidth limitation. for my laptop, it would not make anymore sense to get anything higher then a ATI 6770 or GTX 560

I mean, if this is true, then there is really no reason to buy high-end cards...

-

So I am starting my own eGPU project, but one thing still bothers me - the power consumption of the card. I bought this used device:

http://www.scan.co.uk/products/2gb-gigabyte-gt-640-hd-series-28nm-1800mhz-gddr3-gpu-1050mhz-shader-clock-1050mhz-384-cores-2x-dl-dv

I have all the components for my eGPU system ordered and I am now missing a power supply. The specifications say, that it eats up to 65W of energy, yet in the same page it says „System power supply requirement: 350W“. Now, I am thinking, that by "system" they mean motherboard, cpu, ram, hdd and that kind of stuff. My system will be only one component... And this component doesn't even have any external power plugs - it feeds through the PCIe plug...

But since on many pages the required power for the card is bluntly stated to be the 350W, I would like to hear some more opinions, cause there is a difference in price whether one is buying a 100W or a 400W PSU. What do you think?

DIY "mPCIE to PCIe adapter" failure

in DIY e-GPU Projects

Posted

The mpcie pins are very tiny, so you need a very small soldering iron and steady hands. You also need to check with a multimeter which pcie pin on the beast transfers to which pin of the hdmi cable - it will be several pins to one cable, so don't stop at the first. That's sort of it