Tech Inferno Fan

Registered User-

Posts

5 -

Joined

-

Last visited

-

Days Won

90

Content Type

Profiles

Forums

Downloads

Everything posted by Tech Inferno Fan

-

Actually, can you run GPU-Z while the GTX1060 is under load? Pls observe the link speed reported. It might be x4 2.0 (16Gbps) or x4 3.0 (32Gbps). Your hwinfo output shows it was running x4 2.0 (16Gbps). That may be because it downgrades the link when idle to save power. Putting it under load will show the full bandwidth available.

-

Yes, coil whine on the video card is normal. Nothing to worry about. Yours is the second 32Gbps-NGFF.M2 eGPU implementation that eclipses TB3 performance at a significantly cheaper eGPU adapter pricepoint. Would you mind doing an implementation write up so other users can leverage off your pioneering findings? Along the lines of https://www.techinferno.com/index.php?/forums/topic/10579-17-clevo-p870dm-g-gtx108032gbps-ngffm2-pe4c-v41-win10-bloodhawk/

-

Yes, raise the underside to allow airflow. Consider too drilling holes on bottom cover directly below the fan to allow drawing cool air. I'll add that 50-60 degrees is a cool running temperature. It's only once you get to 90 degrees that should be concerned as it gets close to the 95-100 when the system shuts itself off in self preservation. There's no undervolting capability. Closest is a ME FW update to allow BCLK overclocking (4-5%). That would allow running at equivalent of up to +1.8 higher multipler at the same voltage. Review the 2570P owner's thread for info on how to do that if interested.

-

US$300 AKiTiO Node TB3 eGFX box (32Gbps-TB3)

Tech Inferno Fan replied to rene_canlas's topic in Enclosures and Adapters

The author of the article explains why the Blade+core eGPU benchmark performance is better than XPS+core: - Blade gets a faster [email protected] CPU with hyperthreading support - XPS15 gets a slower [email protected] CPU with no hyperthreading support -

Wow, you have a x4 3.0 (32Gbps) link. Once it's stable, it will run faster than 32Gbps-TB3 since there's no TB controller latency as found at https://www.techinferno.com/index.php?/forums/topic/10579-17-clevo-p870dm-g-gtx108032gbps-ngffm2-pe4c-v41-win10-bloodhawk/ Check your PSU, even testing another if you can. Try also downclocking your video card. There's also the possibility the PCIe 3.0 (8Gbps) lanes are having reliability issues. We certainly saw some combinations of video cards and systems having problems maintaining even a lower specced PCIe 2.0 (5Gbps) signal, requiring downgrading to Gen1 for stability using Setup 1.30.

-

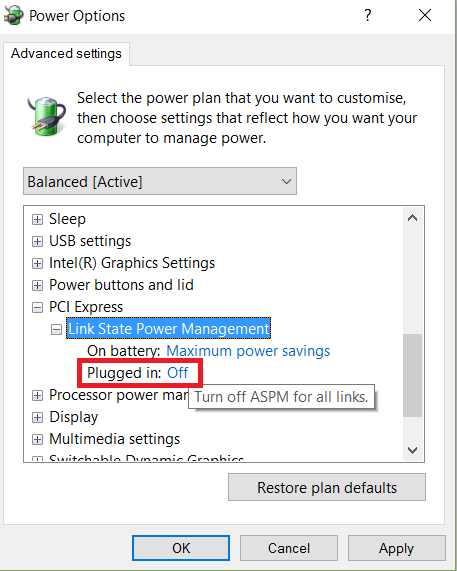

Great investigation. Rightly so, if don't have such a BIOS setting then the problem persists with newer NVidia drivers. Can you disable ASPM in your power profile using pic below (PCI Express -> Link State Power Management -> off ), re-enable ASPM in the BIOS and see if this power profile setting gives an equivalent fix?

-

Don't have a E6440 schematic. So refer to the E6430 one whose docking connector is the same. See pg 38 of http://kythuatphancung.com/uploads/download/df17d_Compal_LA-7782P.pdf

- 222 replies

-

- dell latitude e6440

- e6440

-

(and 8 more)

Tagged with:

-

US$379 according to http://www.powercolor.com/global/News.asp?id=1258 :

-

NeNo, as you are using Setup 1.20, it's important that you create a MBR partitioning scheme on your HDD and boot MBR Windows installation media. AFAIK, the trick can be accomplished by specifying in Bootcamp that you wish to install Win7. Alternatively, Bootcamp 4.0 would do MBR installations.

-

Your startup.bat ais already set to replay the pci.bat from the last PCI compaction. No changes are needed.

-

Once you've followed the Setup 1.20 instructions, it overcomes error 12 against the eGPU. It then becomes configured and can be used. Power starvation from an insufficient PSU, a video card clocked beyond it's ASIC's capabilities or use of a PCIe riser rather than directly in the enclosure are more likely the cause of your BSOD. Try using another PSU and/or downclocking the video card using MSI Afterburner.

-

The Wolfe Thunderbolt GPU enclosure

Tech Inferno Fan replied to Dschijn's topic in Enclosures and Adapters

The Wolfe eGPU kickstarter campaign has been cancelled: -

Anybody with problematic R9 xxx or RXxxx cards in Windows pls try to disable power management to see if this makes a difference. It was noted that large power fluctuations (I believe it was by @goalque ) are seen on R9 xxx or newer cards that weren't there previously causing instability in TB chassis. * ULPS power management: http://www.sevenforums.com/tutorials/316913-ulps-ultra-low-power-state-disable-amd-crossfirex.html * PCIe power management http://www.sevenforums.com/tutorials/292971-pcie-link-state-power-management-turn-off-windows.html * Prevent downclocking of card using ClockBlocker: http://forums.guru3d.com/showthread.php?t=404465

-

Please check your email INBOX and SPAM folders.

-

Nice that it's all PnP. Wonder if Intel made it that way? GPU-Z is indeed correctly reporting a x4 2.0 PCIe link to the AKiTiO Thunder2 TB1 bridge. That bridge connects to the NUC TB1 bridge at 10Gbps downstream. Hence the disparity between the performance when compared to your TB2 ZBook which too uses a x4 2.0 PCIe link but a 16Gbps-TB2 downstream link.

-

mPCIe/EC eGPU troubleshooting steps

Tech Inferno Fan replied to Tech Inferno Fan's topic in DIY e-GPU Projects

This can happen due to incorrect initialization of the video card making it present with the wrong PCI ID. Seen it mostly with AMD cards that use hotplugging or eGPU delay switches. Have you tried to instead sleep the machine, jumpered your power supply to be permanently ON as described in the opening post, disable all the delay switches on the edisabled on the eGPU adapter, then resume the machine and do a Device Manager rescan? Alternatively, try reflashing the VBIOS. -

eGPU experiences [version 2.0]

Tech Inferno Fan replied to Tech Inferno Fan's topic in DIY e-GPU Projects

Use CUDA-Z to measure the Host-to-Device Bandwidth using the following reference. x1 1.1 would be ~190MiB/s, x1 2.0 ~380MiB/s. Reference CUDA-Z Host-to-Device Bandwidth NGFF.M2-32Gbps (x4 3.0): 2842 MiB/s link TB2 -16Gbps (x4 2.0): 1258 MiB/s link TB1-10Gbps: 781MiB/s link TB1-8Gbps (x2 2.0): 697MiB/s link EC2-4Gbps (x1 2.0): 373MiB/s link -

I have seen it reported that, just like Windows users without NVidia Optimus, it's possible to start your *windowed* app on an externally attached LCD and drag it across to the internal LCD. With windows it's then possible to use a DVI or VGA dummy to simulate an external LCD and use apps like Actual/Ultramon window managers to drag the app from the pseudo external LCD for display on the internal LCD.

-

Good stuff to get it all going. The PCIe power management will switch the eGPU to 1.1 when idle. Place it under load by say using the NVidia spinning logo in it's control panel and observe the eGPU state using GPU-Z. It will switch to 2.0 under load.

-

Given 'Legacy' works, may I suggest try a USB and/or disk image installation of Setup 1.30 next? Set chainloader->mode=MBR then do Chainloader->test run. If you can chainload to Windows then you can play around with Gen2 port settings, hotswapping wifi, etc.

-

What music are you listening to right now?

Tech Inferno Fan replied to StamatisX's topic in Off Topic

For the beautiful US scenery -

Usually a GPT installation of Windows is in UEFI mode. You can do a quick test and change your BIOS setting to 'CSM or legacy' instead of UEFI. Can you boot Windows? If not, then would need to convert from UEFI to MBR for Setup 1.30 to be able to chainload it. Pls PM or email me what you need Setup 1.30 for so I can evaluate how to best accomplish that.